I am reading Robert Nishihara’s thesis on Ray [1] while encountering several concepts I am not familiar with. So this post takes some notes on those new concepts.

Ring AllReduce

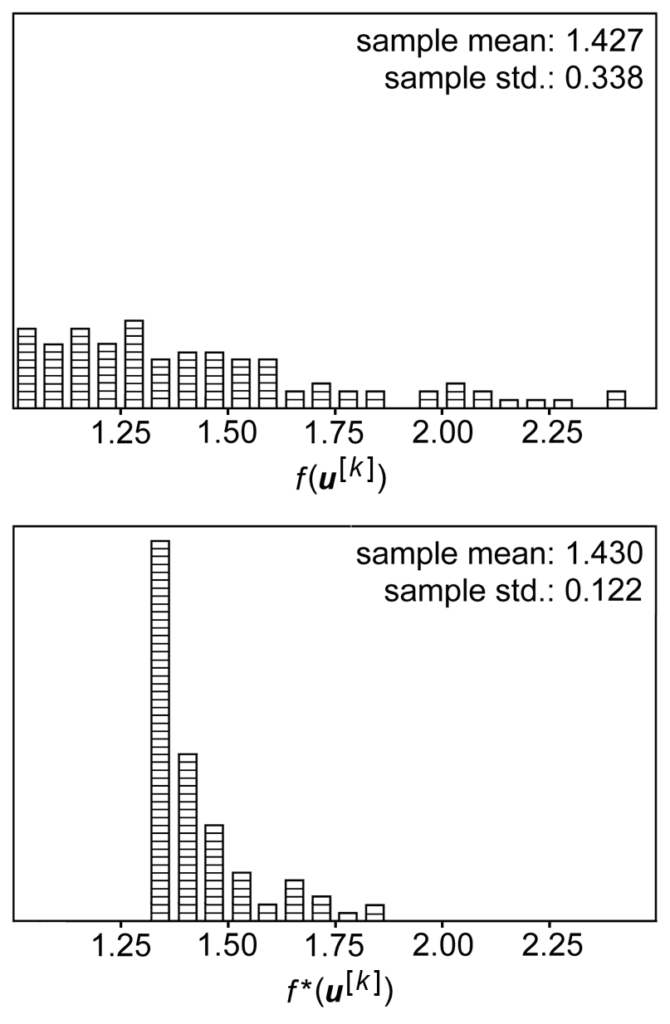

Ring AllReduce is a technique to communicate with multiple computation nodes for aggregating results. It is a primitive to many distributed training systems. In the Ray paper, the author tries to analyze how easy Ring AllReduce can be implemented using Ray’s API.

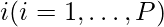

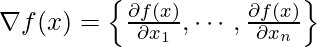

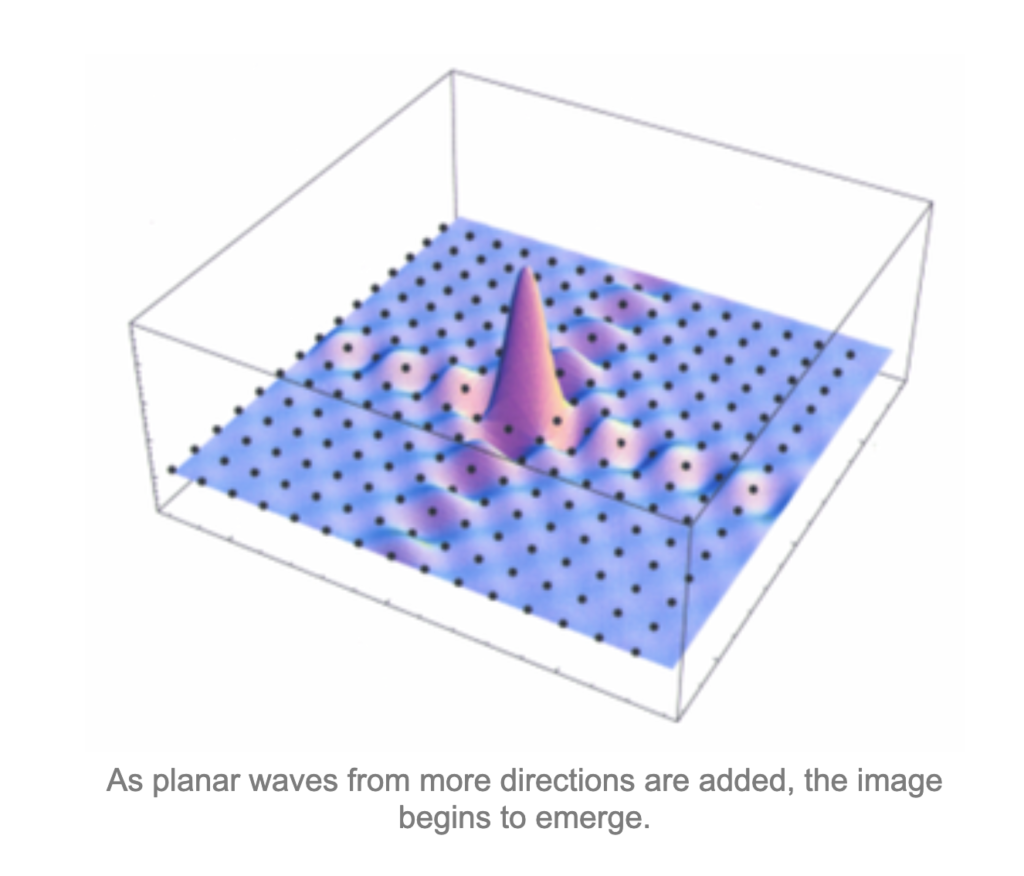

There are two articles that articulating the idea really well [5, 6]. They point out the bottleneck of network data communication when a distributed training system wants to aggregate gradients from worker nodes in a master-slave pattern. Because each worker (say, there are P-1 workers in total) needs to send its gradient vector (say it uses N space) to master and the master needs to aggregate and send the updated model vector (same size as the gradient vectors) back to each worker for the next iteration, the data transferring through the network would be as large as 2*(P-1)*N, which scales linearly with the number of workers.

Ring AllReduce have multiple cycles of data transferring among all workers (P workers since we don’t need one for the master). But each cycle only transfers a small amount of data in each cycle such that the accumulative amount of data transferring would be smaller than that of the master-slave pattern.

The basic idea of RingAllReduce is to divide each gradient vector into P chunks on all workers. There are first P-1 cycles of data aggregating then P-1 cycles data syncing. In the first cycle, each worker  sends its i-th chunk to the next worker (with index

sends its i-th chunk to the next worker (with index  ), and receives the (i-1)-th chunk from the previous worker. The received (i-1)-th chunk will be aggregated locally with the worker’s own (i-1)-th chunk. In the second cycle, each worker sends its (i-1)-th chunk, which got aggregated in the last cycle, to the next worker, and receives (i-2)-th chunk from the previous worker. Similarly, each worker now can aggregate on its (i-2)-th chunk with the received chunk which also indexes on i-2. Continuing on this pattern, each worker will send its (i-2), (i-3)-th, …chunk in each cycle until

), and receives the (i-1)-th chunk from the previous worker. The received (i-1)-th chunk will be aggregated locally with the worker’s own (i-1)-th chunk. In the second cycle, each worker sends its (i-1)-th chunk, which got aggregated in the last cycle, to the next worker, and receives (i-2)-th chunk from the previous worker. Similarly, each worker now can aggregate on its (i-2)-th chunk with the received chunk which also indexes on i-2. Continuing on this pattern, each worker will send its (i-2), (i-3)-th, …chunk in each cycle until  data aggregating cycles are done. Upon then each worker has one chunk that has been fully aggregated with all other workers. Then doing the similar circular cycles P-1 times can make all workers sync on all fully aggregated chunks from each other.

data aggregating cycles are done. Upon then each worker has one chunk that has been fully aggregated with all other workers. Then doing the similar circular cycles P-1 times can make all workers sync on all fully aggregated chunks from each other.

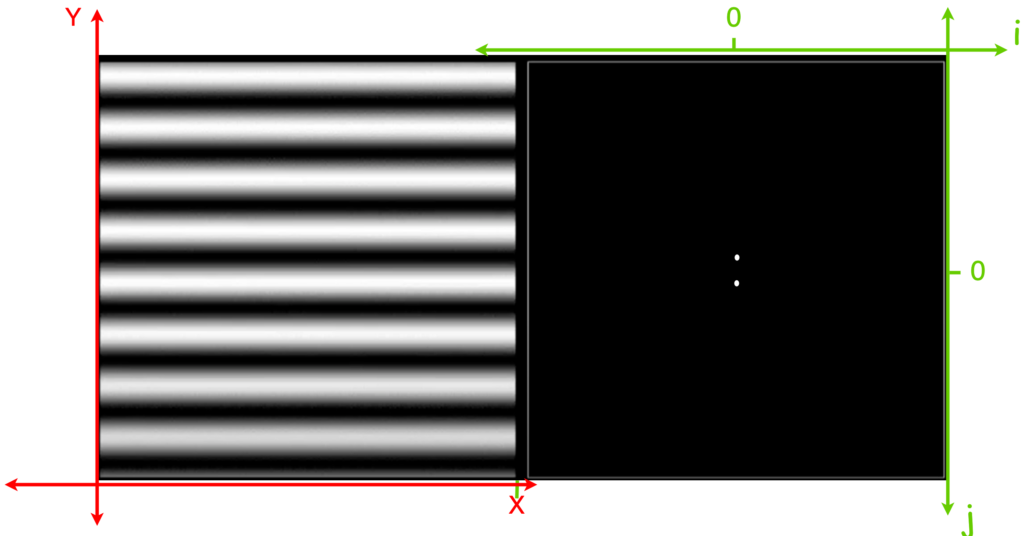

I draw one simple diagram to illustrate the idea:

ADMM (Alternating Direction Method of Multipliers)

Another interesting technique introduced in the thesis is ADMM. It is a good opportunity to revisit optimization from the scratch. I’m mainly following [4] and [7] for basic concepts.

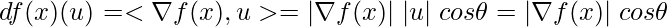

The definition of directional derivative and gradient:

The gradient of  is

is  . The gradient is a vector and can only be known fully when given a concrete value

. The gradient is a vector and can only be known fully when given a concrete value  . The directional derivative is the gradient’s projection on another unit vector

. The directional derivative is the gradient’s projection on another unit vector  :

:  , where

, where  is inner product. See some introduction in [9] and [10].

is inner product. See some introduction in [9] and [10].

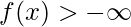

The definition of a proper function simply means (Definition 51.5 [7]):  for all

for all  and

and  for some

for some  .

.

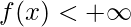

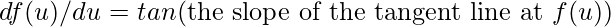

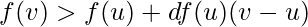

The definition of a convex and strictly convex function is (Proposition 40.9 [7]):

The function  is convex on

is convex on  iff

iff  . Remember that

. Remember that  is the change of

is the change of  caused by a tiny change in

caused by a tiny change in  and we have

and we have  .

.  is a strict convex function iff

is a strict convex function iff  .

.

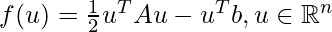

This definition actually leads to a very common technique to check if a multivariate quadratic function is a convex function: if  , as long as

, as long as  is positive semidefinite, then

is positive semidefinite, then  is convex. See Example 40.1 [7].

is convex. See Example 40.1 [7].

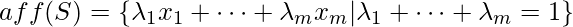

The definition of affine hull: given a set of vectors  with

with  ,

,  is all affine combinations of the vectors in

is all affine combinations of the vectors in  , i.e.,

, i.e.,  .

.

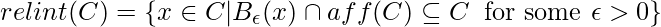

The definition of relint (Definition 51.9 [7]):

Suppose  a subset of

a subset of  , the relative interior of

, the relative interior of  is the set:

is the set:  . There is a good example from [8] which explains the difference between interior and relative interior.

. There is a good example from [8] which explains the difference between interior and relative interior.

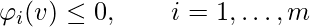

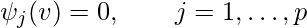

The most important thing is to understand the high level of the typical optimization procedure, which I also covered in [3]. Suppose we want to optimize the primal problem:

minimize

subject to

with

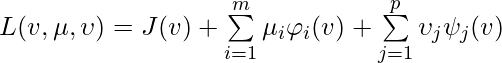

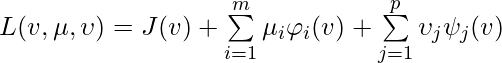

The Lagrangian  for the primal problem is defined as:

for the primal problem is defined as:

, where

, where  (i.e.,

(i.e.,  ) and

) and  .

.

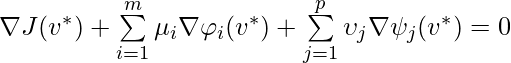

The KKT conditions are necessary conditions that if  is a local minimum of

is a local minimum of  , then

, then  must satisfy the following conditions [11]:

must satisfy the following conditions [11]:

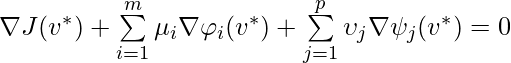

1. stationarity:

2. primal feasibility:  and

and

3. dual feasibility:

4. complementary slackness:

In other words, if we already know  we know it must satisfy the KKT conditions. However not all solutions that satisfy the KKT conditions are the local minimum of

we know it must satisfy the KKT conditions. However not all solutions that satisfy the KKT conditions are the local minimum of  . The KKT conditions can be used to find global minimum

. The KKT conditions can be used to find global minimum  if additional conditions are satisfied. One such conditions is “Second-order sufficient conditions” [12], which I also talked about in [3]. Another such conditions are if the constraints, the domain

if additional conditions are satisfied. One such conditions is “Second-order sufficient conditions” [12], which I also talked about in [3]. Another such conditions are if the constraints, the domain  , and the objective function

, and the objective function  are convex, then KKT conditions also imply

are convex, then KKT conditions also imply  is a global minimum. (Theorem 50.6 [7]).

is a global minimum. (Theorem 50.6 [7]).

While some problems have characteristics that allow using the KKT conditions as sufficient condition to find the global solution, there are others that are easier to solve or approximate using another method called dual ascent. By maximizing another function called dual function, we can get the exact solution or a lower bound of the primal problem.

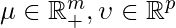

The dual function  is defined as:

is defined as:

The dual problem is defined as:

maximize

subject to

From [7] page 1697, one of the main advantages of the dual problem over the primal problem is that it is a convex optimization problem, since we wish to maximize a concave objective function  (thus minimize

(thus minimize  , a convex function), and the constraints

, a convex function), and the constraints  are convex. In a number of practical situations, the dual function

are convex. In a number of practical situations, the dual function  can indeed be computed.

can indeed be computed.

If the maximum of the dual problem is  and the minimum of the primal problem is

and the minimum of the primal problem is  . We have weak duality that always holds:

. We have weak duality that always holds:  . Strong duality holds when the dual gap is zero, with certain conditions holding, for example slater’s condition [14]. We can find the local minimum

. Strong duality holds when the dual gap is zero, with certain conditions holding, for example slater’s condition [14]. We can find the local minimum  of the dual problem by a special form of gradient ascent algorithm called sequential optimization problem (SMO) [13] because special treatment is needed for the constraints involved in the dual problem.

of the dual problem by a special form of gradient ascent algorithm called sequential optimization problem (SMO) [13] because special treatment is needed for the constraints involved in the dual problem.

[7] provides two ways on how to do constrained optimization on SVM (Section 50.6 and 50.10): one is to use the KKT conditions, the other is to solve the dual problem.

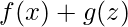

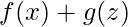

The dual problem is simplified when there are only affine equality constraints in the primal problem:

Primal problem:

minimize

subject to

Dual problem:

maximize

subject to

Since the dual problem is an unconstrained optimization problem, we can use dual ascent to solve this problem easily:

,

,

where  is a step size.

is a step size.

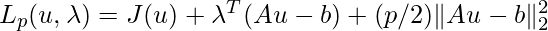

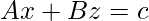

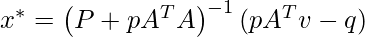

The main flaw of the dual ascent is that under certain conditions the solution may diverge. The augmented Lagrangian method augments the Lagrangian function with a penalty term:

Based on some theory, the augmented Lagrangian is strongly convex and has better convergence property.

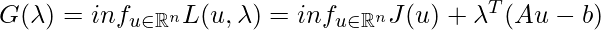

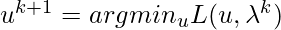

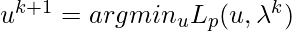

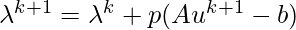

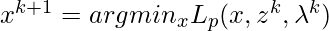

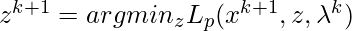

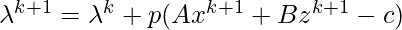

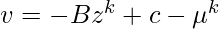

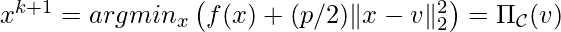

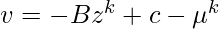

The method of multipliers is the dual ascent applied on the augmented Lagrangian:

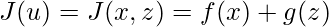

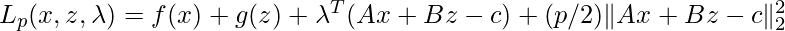

If  can be separated into two parts such that

can be separated into two parts such that  , then we can iteratively update

, then we can iteratively update  ,

,  , and

, and  separately, and such a method is called Alternating Direction Method of Multipliers (ADMM):

separately, and such a method is called Alternating Direction Method of Multipliers (ADMM):

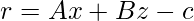

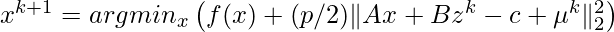

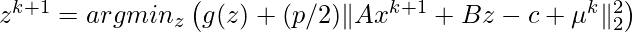

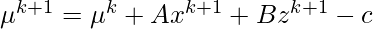

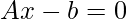

Primal problem:

minimize

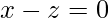

subject to

The augmented Lagrangian:

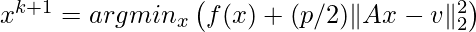

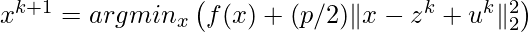

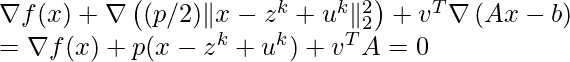

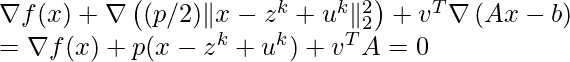

Updates (note we are not doing  as in the method of multipliers):

as in the method of multipliers):

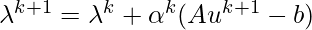

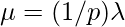

If we define  and

and  , then the updates can be simplified as:

, then the updates can be simplified as:

Structures in  ,

,  ,

,  , and

, and  can often be exploited to carry out

can often be exploited to carry out  -minimization and

-minimization and  -minimization more efficiently. We now look at several examples.

-minimization more efficiently. We now look at several examples.

Example 1: if  is an identity matrix,

is an identity matrix,  , and

, and  is the indicator function of a closed nonempty convex set

is the indicator function of a closed nonempty convex set  , then

, then  , which means

, which means  is the projection of

is the projection of  onto

onto  .

.

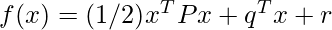

Example 2: if  and

and  , then

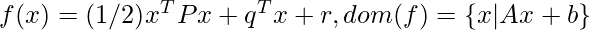

, then  becomes an unconstrained quadratic programming with the analytic solution at

becomes an unconstrained quadratic programming with the analytic solution at  .

.

Example 3: if  and it must satisfy

and it must satisfy  , then the ADMM primal problem becomes:

, then the ADMM primal problem becomes:

minimize  (where

(where  is an indicator function of the nonnegative orthant

is an indicator function of the nonnegative orthant  )

)

subject to

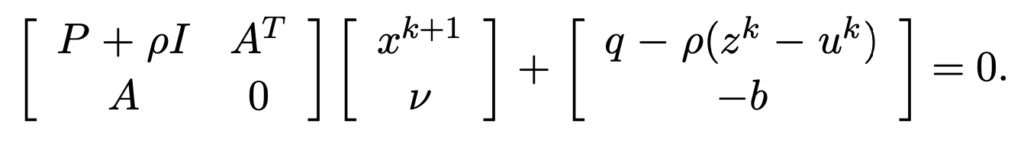

Then  . This is a constrained quadratic programming. We have to use KKT conditions to solve it. Suppose the Lagrangian multiplier is

. This is a constrained quadratic programming. We have to use KKT conditions to solve it. Suppose the Lagrangian multiplier is  , then the KKT conditions state that:

, then the KKT conditions state that:

,

,

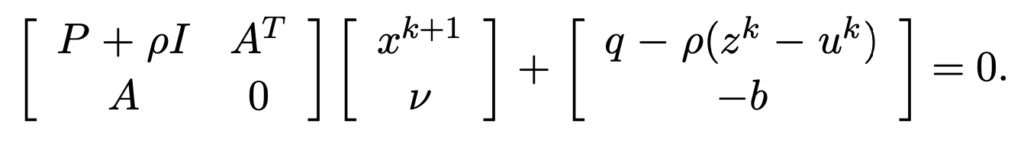

which is exactly given by a linear system in matrix form in Section 5.2 [4]:

(As a side note, solving a constrained quadratic programming usually relies on KKT conditions. It can be converted to solving a linear system in matrix form: https://www.math.uh.edu/~rohop/fall_06/Chapter3.pdf)

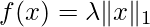

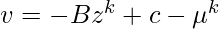

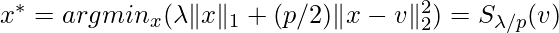

Example 4 (Section 4.4.3 in [4]): if  ,

,  , and

, and  , then

, then  where

where  is the soft thresholding operator.

is the soft thresholding operator.

References

[1] On Systems and Algorithms for Distributed Machine Learning: https://www2.eecs.berkeley.edu/Pubs/TechRpts/2019/EECS-2019-30.pdf

[2] Compare between parameter servers and ring allreduce: https://harvest.usask.ca/bitstream/handle/10388/12390/CHOWDHURY-THESIS-2019.pdf?sequence=1&isAllowed=y

[3] Overview of optimization: https://czxttkl.com/2016/02/22/optimization-overview/

[4] ADMM tutorial: https://web.stanford.edu/~boyd/papers/pdf/admm_distr_stats.pdf

[5] https://tech.preferred.jp/en/blog/technologies-behind-distributed-deep-learning-allreduce/

[6] https://towardsdatascience.com/visual-intuition-on-ring-allreduce-for-distributed-deep-learning-d1f34b4911da

[7] Algebra, Topology, Differential Calculus, and Optimization Theory For Computer Science and Machine Learning https://www.cis.upenn.edu/~jean/math-deep.pdf

[8] https://math.stackexchange.com/a/2774285/235140

[9] http://sites.science.oregonstate.edu/math/home/programs/undergrad/CalculusQuestStudyGuides/vcalc/grad/grad.html

[10] https://math.stackexchange.com/a/661220/235140

[11] https://en.wikipedia.org/wiki/Karush%E2%80%93Kuhn%E2%80%93Tucker_conditions#Necessary_conditions

[12] https://en.wikipedia.org/wiki/Karush%E2%80%93Kuhn%E2%80%93Tucker_conditions#Sufficient_conditions

[13] http://cs229.stanford.edu/notes/cs229-notes3.pdf

[14] https://en.wikipedia.org/wiki/Slater%27s_condition

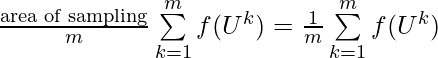

![]() by Monte Carlo samples, we can instead evaluate

by Monte Carlo samples, we can instead evaluate ![]() with

with ![]() in order to reduce variance. The requirement for control variate to work is that

in order to reduce variance. The requirement for control variate to work is that ![]() is correlated with

is correlated with ![]() and the mean of

and the mean of ![]() is known.

is known. ![]() is picked as the Taylor expansion of

is picked as the Taylor expansion of ![]() . We know that

. We know that ![]() ‘s Taylor expansion can be expressed as

‘s Taylor expansion can be expressed as ![]() . Therefore, if we pick the second order of Taylor expansion for

. Therefore, if we pick the second order of Taylor expansion for ![]() and set

and set ![]() , we get

, we get ![]() .

. ![]() and we want to evaluate the integral

and we want to evaluate the integral ![]() . If we want to use Monte Carlo estimator to estimate

. If we want to use Monte Carlo estimator to estimate ![]() using

using ![]() samples, we have:

samples, we have:

![]() is

is ![]() (we use multi-variable Taylor expansion here [7]). We first compute its mean:

(we use multi-variable Taylor expansion here [7]). We first compute its mean: ![]() . Thus, our control variate Monto Carlo estimator is:

. Thus, our control variate Monto Carlo estimator is:![Rendered by QuickLaTeX.com \frac{1}{m}\sum\limits_{k=1}^{m} z(u_1, u_2)\newline=\frac{1}{m}\sum\limits_{k=1}^{m} \left[f(u_1, u_2) -h(u_1, u_2) + \theta\right]\newline=\frac{1}{m}\sum\limits_{k=1}^{m} \left[exp[(u_1^2 + u_2^2)/2] - 1 - (u_1^2 + u_2^2)/2 + 4/3 \right]](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-cefe400b6090655af28e4c28735815f6_l3.png) .

.![]() is smaller than

is smaller than ![]() .

.

![]()

![]() to approximate

to approximate ![]() , which causes variance. If we have a control variate

, which causes variance. If we have a control variate ![]() and

and ![]() can be chosen at any value but a sensible choice being

can be chosen at any value but a sensible choice being ![]() , a deterministic version of

, a deterministic version of ![]() , then the control variate version of policy gradient can be written as (Eqn. 7 in [2]):

, then the control variate version of policy gradient can be written as (Eqn. 7 in [2]):![Rendered by QuickLaTeX.com \nabla_\theta log \pi_\theta(a_t | s_t) R_t - h(s_t, a_t) + \mathbb{E}_{s\sim \rho_\pi, a \sim \pi}\left[ h(s_t, a_t)\right] \newline=\nabla_\theta log \pi_\theta(a_t | s_t) R_t - h(s_t, a_t) + \mathbb{E}_{s \sim \rho_\pi}\left[ \nabla_a Q_w(s_t, a)|_{a=\mu_\theta(s_t)} \nabla_\theta \mu_\theta(s_t)\right]](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-9629b8ff4fc66089624196ff0d65190c_l3.png)

![]() is some critic with known analytic expression, and actually does not depend on

is some critic with known analytic expression, and actually does not depend on ![]() .

. ![]() , variance of actions

, variance of actions ![]() , and variance of trajectories

, and variance of trajectories ![]() . What [3] highlights is that the magnitude of

. What [3] highlights is that the magnitude of ![]() is usually greatly smaller than the rest two so the benefit of using control variate-based policy gradient is very limited.

is usually greatly smaller than the rest two so the benefit of using control variate-based policy gradient is very limited.

, where

, where

![Rendered by QuickLaTeX.com \[K(\mathbf{x}, \mathbf{y})=exp\left(-\frac{\|\mathbf{x}-\mathbf{y}\|^2}{2\sigma^2}\right),\]](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-b79b831a52476a21c48ca2567248e86f_l3.png)

![Rendered by QuickLaTeX.com \[f(t)=(g*h)(t)=\int^\infty_{-\infty}g(\tau)h(t-\tau)d\tau\]](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-237cc2257376e03764228a073433bafd_l3.png)

![Rendered by QuickLaTeX.com \[\hat{f}(\xi)=\int^{\infty}_{-\infty}f(t)e^{-2\pi i t \xi} dt\]](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-bdcf4fbf82c13fb6b7e8c2a58a4ae12d_l3.png)

![Rendered by QuickLaTeX.com \[f(t)=\int^\infty_{-\infty}\hat{f}(\xi)e^{2\pi i t \xi} d\xi\]](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-1c2eeeb000305a965a637e37ca7f52a0_l3.png)

![Rendered by QuickLaTeX.com (g*h)(t)=\int^\infty_{-\infty}g(\tau)h(t-\tau)d\tau \newline=\int^\infty_{-\infty} g(\tau)\left[\int^\infty_{-\infty} \hat{h}(\xi) 2^{\pi i (t-\tau) \xi}d\xi\right]d\tau \newline=\int^\infty_{-\infty} \hat{h}(\xi) \left[\int^\infty_{-\infty} g(\tau) 2^{\pi i (t-\tau) \xi}d\xi\right]d\tau \quad \quad \text{swap } g(\tau)\text{ and }\hat{h}(\xi)\newline=\int^\infty_{-\infty} \hat{h}(\xi) \left[\int^\infty_{-\infty} g(\tau) 2^{-\pi i \tau \xi} d\tau\right] 2^{\pi i t \xi} d\xi\newline=\int^\infty_{-\infty} \hat{h}(\xi) \hat{g}(\xi) 2^{\pi i t \xi} d\xi \newline = IFT\left[\hat{h}(\xi) \hat{g}(\xi)\right] \quad\quad Q.E.D.](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-1771258be13b140b77c42f636647fb99_l3.png)

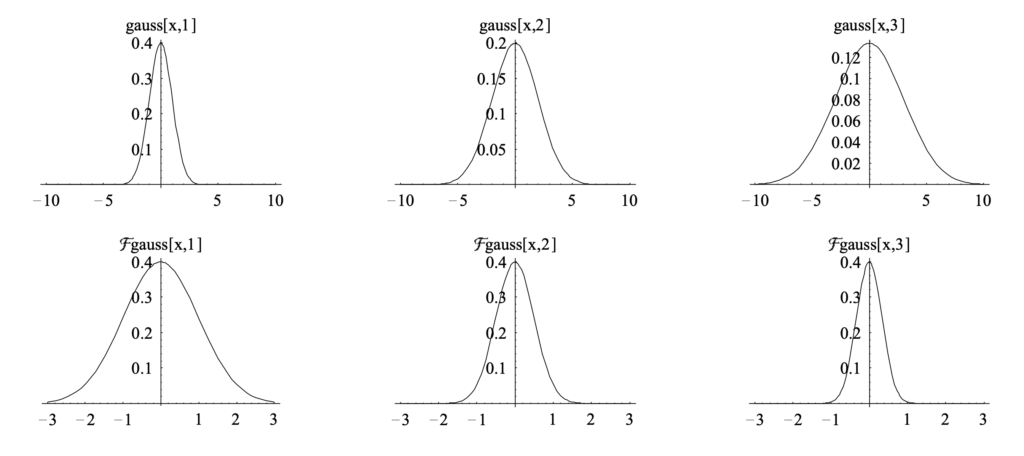

We know that its fourier transform is (

We know that its fourier transform is (

![Rendered by QuickLaTeX.com \theta^* = argmin_\theta\; KL\left(q_\theta(z|x) || p(z|x) \right ) \newline= argmin_\theta \; \mathbb{E}_{q_\theta} [log\;q_\theta(z|x)] - \mathbb{E}_{q_\theta} [log\;p(z,x)]+log\;p(x)](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-1acb87f9932eec25d3692cac471a68aa_l3.png)

![Rendered by QuickLaTeX.com ELBO(\theta) \newline= \mathbb{E}_{q_\theta} [log\;p(z,x)] - \mathbb{E}_{q_\theta} [log\;q_\theta(z|x)]\newline=\mathbb{E}_{q_\theta}[log\;p(x|z)] + \mathbb{E}_{q_\theta}[log\;p(z)] - \mathbb{E}_{q_\theta}[log\;q_\theta(z|x)] \quad\quad p(z) \text{ is the prior of } z \newline= \mathbb{E}_{q_\theta}[log\;p(x|z)] - \mathbb{E}_{q_\theta} [log \frac{q_{\theta}(z|x)}{p(z)}]\newline=\mathbb{E}_{q_\theta}[log\;p(x|z)] - KL\left(q_\theta(z|x) || p(z)\right)](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-8e4bcee52335d51120c9eb549e33fe95_l3.png)

![Rendered by QuickLaTeX.com \begin{align*}&\nabla_\theta \left\{\mathbb{E}_{q_\theta}[log\;p_\phi(x|z)] - KL\left(q_\theta(z|x) || p(z)\right)\right\}\\=&\nabla_\theta \left\{\mathbb{E}_{q_\theta}\left[log\;p_\phi(x, z) - \log q_\theta(z|x)\right] \right\} \quad\quad \text{ rewrite KL divergence} \\ =&\nabla_\theta \; \int q_\theta(z|x) \left[log\;p_\phi(x, z) - \log q_\theta(z|x) \right]dz \\=& \int \left[log\;p_\phi(x, z) - \log q_\theta(z|x) \right]\nabla_\theta q_\theta(z|x) dz + \int q_\theta(z|x) \nabla_\theta \left[log\;p_\phi(x, z) - \log q_\theta(z|x) \right] dz \\=& \mathbb{E}_{q_\theta}\left[ \left(log\;p_\phi(x, z) - \log q_\theta(z|x) \right) \nabla_\theta \log q_\theta(z|x) \right] + \mathbb{E}_{q_\theta}\left[\nabla_\theta \log p_\phi(x, z)\right] + \mathbb{E}_{q_\theta}\left[ \nabla_\theta \log q_\theta(z|x) \right] \\&\text{--- The second term is zero because no }\theta \text{ in } \log p_\phi(x,z) \\&\text{--- The third term being zero is a common trick. See Eqn. 5 in [1]} \\=& \mathbb{E}_{q_\theta}\left[ \left(log\;p_\phi(x, z) - \log q_\theta(z|x) \right) \nabla_\theta \log q_\theta(z|x) \right]\end{align*}](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-ab36fc8bea2fc218505d19ee6e54e94c_l3.png)

![Rendered by QuickLaTeX.com \begin{align*}&\nabla_\theta \left\{\mathbb{E}_{q_\theta}[log\;p_\phi(x|z)] - KL\left(q_\theta(z|x) || p(z)\right)\right\}\\=&\nabla_\theta \left\{\mathbb{E}_{q_\theta}\left[log\;p_\phi(x, z) - \log q_\theta(z|x)\right] \right\} \quad\quad \text{ rewrite KL divergence} \\=&\nabla_\theta \; \int q_\theta(z|x) \left[log\;p_\phi(x, z) - \log q_\theta(z|x) \right]dz \\=&\nabla_\theta \; \int p(\epsilon) \left[log\;p_\phi(x, z) - \log q_\theta(z|x) \right]d\epsilon \quad\quad \\&\text{--- Above uses the property of changing variables in probability density functions.} \\&\text{--- See discussion in [10, 11]} \\=& \int p(\epsilon) \nabla_\theta \left[log\;p_\phi(x, z) - \log q_\theta(z|x) \right]d\epsilon \\=& \int p(\epsilon) \nabla_z \left[log\;p_\phi(x, z) - \log q_\theta(z|x) \right] \nabla_\theta z d\epsilon \\=& \mathbb{E}_{p(\epsilon)} \left[ \nabla_z \left[log\;p_\phi(x, z) - \log q_\theta(z|x) \right] \nabla_\theta f_\theta(x) \right]\end{align*}](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-4fafc4bcaae654c035173052feaa8981_l3.png)

![Rendered by QuickLaTeX.com \mathbb{E}_{p(x)}[f(x)]\approx \frac{1}{N}\sum\limits_{i=1}^Nf(x_i)](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-6cea83b1e26813296576c469c343b9b0_l3.png)

![Rendered by QuickLaTeX.com \mathbb{E}_{p(x)}[z(x)]\newline=\mathbb{E}_{p(x)}[f(x)]-\mathbb{E}_{p(x)}[h(x)] + \theta\newline=\mathbb{E}_{p(x)}[f(x)]-\theta + \theta\newline=\mathbb{E}_{p(x)}[f(x)]](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-119a2e3969f356586cea4c664bcffc85_l3.png)

![Rendered by QuickLaTeX.com Var_{p(x)}[z(x)] \newline = Var_{p(x)}\left[f(x) - h(x)+\theta\right] \newline = Var_{p(x)}\left[f(x)-h(x)\right] \quad \quad \text{constant doesn't contribute to variance}\newline=\mathbb{E}_{p(x)}\left[\left(f(x)-h(x)-\mathbb{E}_{p(x)}\left[f(x)-h(x)\right] \right)^2\right] \quad\quad Var(x)=\mathbb{E}[(x-\mathbb{E}(x))^2] \newline=\mathbb{E}_{p(x)}\left[\left( f(x)-\mathbb{E}_{p(x)}[f(x)] - \left(h(x)-\mathbb{E}_{p(x)}[h(x)]\right) \right)^2\right]\newline=\mathbb{E}_{p(x)}\left[\left(f(x)-\mathbb{E}_{p(x)}[f(x)]\right)^2\right] + \mathbb{E}_{p(x)}\left[\left(h(x)-\mathbb{E}_{p(x)}[h(x)]\right)^2\right] \newline - 2 * \mathbb{E}_{p(x)}\left[f(x)-\mathbb{E}_{p(x)}[f(x)]\right] * \mathbb{E}_{p(x)}\left[h(x)-\mathbb{E}_{p(x)}[h(x)]\right]\newline=Var_{p(x)}\left[f(x)\right]+Var_{p(x)}\left[h(x)\right] - 2 * Cov_{p(x)}\left[f(x), h(x)\right]](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-0ccdbafc7e844065190f08449216de2b_l3.png)

![Rendered by QuickLaTeX.com Var_{p(x)}[z(x)] \newline=Var_{p(x)}\left[f(x)\right]+c^2 \cdot Var_{p(x)}\left[h(x)\right] + 2c * Cov_{p(x)}\left[f(x), h(x)\right]](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-17bc7bdff9ac5e1ae313fbe74320b19d_l3.png) ,

,![Rendered by QuickLaTeX.com Var^*_{p(x)}[z(x)] \newline=Var_{p(x)}\left[f(x)\right] - \frac{\left(Cov_{p(x)}\left[f(x), h(x)\right] \right)^2}{Var_{p(x)}[h(x)]}\newline=\left(1-\left(Corr_{p(x)}\left[f(x),h(x)\right]\right)^2\right)Var_{p(x)}\left[f(x)\right] \quad\quad Corr(x,y)=Cov(x,y)/stdev(x)stdev(y)](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-397f1651dac767c2187126a6a9a18978_l3.png)

![Rendered by QuickLaTeX.com Var_{p(x)}[z(x)] \newline =Var_{p(x)}\left[f(x)\right]+Var_{p(x)}\left[h(x)\right] - 2 * Cov_{p(x)}\left[f(x), h(x)\right]\newline =\frac{8000}{9} + \frac{25}{3}-\frac{2\cdot 250}{3}\newline=\frac{6575}{9}<Var_{p(x)}[f(x)]](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-386cf1266b42bd8a666f8421d6b2e6c5_l3.png) .

.![Rendered by QuickLaTeX.com Var_{p(x)}[\bar{z}(x)]=Var_{p(x)}\left[\frac{\sum\limits_{i=1}^N z(x_i)}{N}\right]=N \cdot Var_{p(x)}\left[\frac{z(x)}{N}\right]=\frac{Var_{p(x)}\left[z(x)\right]}{N}](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-8689d39a37054b16c04ad196608819f2_l3.png) . This matches with our intuition that the more samples you average over, the less variance you have. However, the relative ratio of variance you can save by introducing

. This matches with our intuition that the more samples you average over, the less variance you have. However, the relative ratio of variance you can save by introducing

![Rendered by QuickLaTeX.com J(\theta)\newline=\mathbb{E}_{s,a \sim \pi_\theta} [Q^{\pi_\theta}(s,a)] \newline=\mathbb{E}_{s \sim \pi_\theta}[ \mathbb{E}_{a \sim \pi_\theta} [Q^{\pi_\theta}(s,a)]]\newline=\mathbb{E}_{s\sim\pi_\theta} [\int \pi_\theta(a|s) Q^{\pi_\theta}(s,a) da]](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-f1469552882502e8a85fcd638aa96963_l3.png)

![Rendered by QuickLaTeX.com \nabla_{\theta} J(\theta) \newline= \mathbb{E}_{s\sim\pi_\theta} [\int \nabla \pi_\theta(a|s) Q^{\pi_\theta}(s,a) da] \quad\quad \text{Treat } Q^{\pi_\theta}(s,a) \text{ as non-differentiable} \newline= \mathbb{E}_{s\sim\pi_\theta} [\int \pi_\theta(a|s) \frac{\nabla \pi_\theta(a|s)}{\pi_\theta(a|s)} Q^{\pi_\theta}(s,a) da] \newline= \mathbb{E}_{s, a \sim \pi_\theta} [Q^{\pi_\theta}(s,a) \nabla_\theta log \pi_\theta(a|s)] \newline \approx \frac{1}{N} [G_t \nabla_\theta log \pi_\theta(a_t|s_t)] \quad \quad s_t, a_t \sim \pi_\theta](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-f73caef052359dd2ab0ff34a060164b9_l3.png)

![Rendered by QuickLaTeX.com J(\theta)\newline=\mathbb{E}_{s\sim\pi_b}\left[\mathbb{E}_{a \sim \pi_\theta} [Q^{\pi_\theta}(s,a)] \right] \newline=\mathbb{E}_{s,a \sim \pi_b} [\frac{\pi_\theta(a|s)}{\pi_b(a|s)}Q^{\pi_b}(s,a)]](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-99250c6e14614db890a3fe8e8487be7e_l3.png)

![Rendered by QuickLaTeX.com \nabla_{\theta} J(\theta) \newline=\mathbb{E}_{s,a \sim \pi_b} [\frac{\nabla_\theta \pi_\theta(a|s)}{\pi_b(a|s)} Q^{\pi_b}(s,a)] \quad\quad \text{Again, treat } Q^{\pi_b}(s,a) \text{ as non-differentiable}\newline=\mathbb{E}_{s,a \sim \pi_b} [\frac{\pi_\theta(a|s)}{\pi_b(a|s)} Q^{\pi_b}(s,a) \nabla_\theta log \pi_\theta(a|s)] \newline \approx \frac{1}{N}[\frac{\pi_\theta(a_t|s_t)}{\pi_b(a_t|s_t)} G_t \nabla_\theta log \pi_\theta(a_t|s_t)] \quad\quad s_t, a_t \sim \pi_b](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-6861dae8d1e302c9bcfc3d06cac970b0_l3.png)

![Rendered by QuickLaTeX.com \mathbb{E}_{s,a \sim \pi_b} \left[\frac{\pi_\theta(a|s)}{\pi_b(a|s)} b(s) \nabla_\theta log \pi_\theta(a|s)\right]\newline=\mathbb{E}_{s \sim \pi_b}\left[b(s) \cdot \mathbb{E}_{a \sim \pi_b}\left[\frac{\pi_\theta(a|s)}{\pi_b(a|s)} \nabla_\theta log \pi_\theta(a|s)\right]\right] \newline = \mathbb{E}_{s \sim \pi_b}\left[b(s) \int \pi_b(a|s) \nabla_\theta \frac{\pi_\theta(a|s)}{\pi_b(a|s)} da\right]\newline=\mathbb{E}_{s \sim \pi_b}\left[b(s) \int \nabla_\theta \pi_\theta(a|s) da\right]\newline=\mathbb{E}_{s \sim \pi_b}\left[b(s) \nabla_\theta \int \pi_\theta(a|s) da\right] \newline=\mathbb{E}_{s \sim \pi_b}\left[b(s) \nabla_\theta 1 \right] \newline=0](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-ccc9f1f74889590d36787146035b678d_l3.png)

![Rendered by QuickLaTeX.com \nabla_\theta \mathbb{E}_{\pi \sim p_\theta(\cdot|x)} \left[ \mathcal{L}_\pi(\theta)\right] \newline= \nabla_\theta \sum\limits_\pi p_\theta(\pi|x)\mathcal{L}_\pi(\theta)\newline=\sum\limits_\pi \left[ \nabla_\theta p_\theta(\pi|x) \cdot \mathcal{L}_\pi(\theta)+p_\theta(\pi|x) \cdot \nabla_\theta \mathcal{L}_\pi(\theta) \right]\newline=p_b(\pi|x) \cdot \sum\limits_\pi \left[ \frac{\nabla_\theta p_\theta(\pi|x)}{p_b(\pi|x)} \cdot \mathcal{L}_\pi(\theta)+\frac{p_\theta(\pi|x)}{p_b(\pi|x)} \cdot \nabla_\theta \mathcal{L}_\pi(\theta) \right]\newline=\mathbb{E}_{\pi\sim p_b(\cdot|x)}\left[\frac{1}{p_b(\pi|x)}\nabla_\theta\left(p_\theta(\pi|x)\mathcal{L}_\pi(\theta)\right)\right]](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-227f028e4d3bc7f11df8cb44f48f3e17_l3.png)

![Rendered by QuickLaTeX.com \nabla_\theta \mathbb{E}_{\pi \sim p_\theta(\cdot|x)} \left[ \mathcal{L}_\pi(\theta)\right] \newline= \nabla_\theta \sum\limits_\pi p_\theta(\pi|x)\mathcal{L}_\pi(\theta)\newline=\sum\limits_\pi \left[ \nabla_\theta p_\theta(\pi|x) \cdot \mathcal{L}_\pi(\theta)+p_\theta(\pi|x) \cdot \nabla_\theta \mathcal{L}_\pi(\theta) \right]\newline=p_\theta(\pi|x) \cdot \sum\limits_\pi \left[ \frac{\nabla_\theta p_\theta(\pi|x)}{p_\theta(\pi|x)} \cdot \mathcal{L}_\pi(\theta)+\frac{p_\theta(\pi|x)}{p_\theta(\pi|x)} \cdot \nabla_\theta \mathcal{L}_\pi(\theta) \right]\newline=\mathbb{E}_{\pi\sim p_\theta(\cdot|x)}\left[\frac{1}{p_\theta(\pi|x)}\nabla_\theta\left(p_\theta(\pi|x)\mathcal{L}_\pi(\theta)\right)\right]](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-907143278650354a5e362762565099f1_l3.png)

would have a monotonic trend:

would have a monotonic trend: