There are several terms often used in the Ads ML domain. Let’s dive into them.

total bid = ecpm bid + quality bid

ecpm bid = paced bid * ecvr

- ecvr is “expected conversion rate”. Different ads can have different definitions of conversion [8] – e.g., link click, app install, or offsite conversion. ecvr = p(conversion | impression) * position_discount

- paced bid = bid by advertiser * pacer, where pacer controls how fast the advertiser’s budget is spent

advertiser value is the same thing as ecpm bid [15]

position_discount is a function of position, page type, and ad’s conversion type. Ads in the same request have the same page type. Note that position_discount may not be monotonically decreasing with the slot increasing.

ecpm price = 2nd ecpm bid + 2nd quality bid – 1st quality bid (we charge the runner-up’s ecpm bid plus the difference between quality bids). My interpretation of ecpm price is what we, the platform like Meta, actually charge, compared to ecpm bid, which is what advertisers bid.

Price-to-value ratio (PVR) = ecpm price / ecpm bid

Billing Based Revenue BBR = sum over all impressions (ecpm price)

Note: the summation is over all impressions because most of the advertisers (> 95%) are billed by impression.

Event Based Revenue EBR

- EBR = sum over all conversions (paced bid * PVR)

- EBR = sum over all conversions (paced bid * ecpm price / ecpm bid)

- EBR = sum over all conversions (ecpm price / ECVR)

- Difference between EBR vs BBR [4]: note that BBR is summing over all impressions while EBR is summing over all conversions. Since most advertisers are billed by impressions, BBR is closer to real revenue (see “which is close to our actual business operation in practice” in [4]) while EBR is closer to what in expectation should be charged. As [4] shows, EBR and BBR are the same when ECVR is well calibrated.

Estimated Ads Value = sum over all impressions (ecpm bid)

Realized Ads Value = sum over all conversions (paced bid)

Ads Score = Realized Ads Value + Quality Score

User Dollar Value (UDV) = P70(Aggregate of top eCPM bids of user’s last week’s requests) [2]

Quality bid is also known as organic bid. It contains several components like Report Bid, Xout Bid, Repetition Penalty bid, etc. [3]. [14] has a more detailed walkthrough of how each component is computed in quality bid. Let’s use one example, XOUT-Repetitive Bid.

xout_repetitive_bid = -udv * xout_normalization_factor * xout_repetitive_prediction<br />, where xout_normalization_factor = 1 / avg(user's estimated xout rate on ads impressions)

When we report Ads Score, we need to compute quality score. Xout-repetitive quality score is different than xout_repetitive_bid in whether the last term is prediction or realized value.

Xout-repetitive quality score = -udv * xout_normalization_factor * 1(user hides the ad with reason repetition)

Auction process

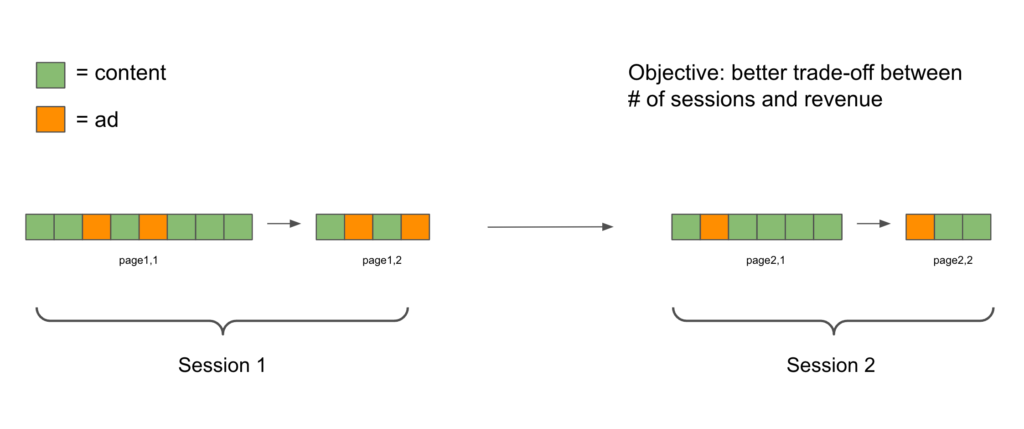

When a page request comes, the backend system needs to assemble a number of organic contents and ads. For ads, each page request only involves one set of ads (or, one set of advertisers, assuming each ad comes from a distinct advertiser). There are two ways to fill each slot with one of the ads:

- slot first greedy. Traverse each slot. For each slot, pick the ad with the highest total bid. Note that in the total bid, ecvr = p(conversion | impression) * position_discount

- bidder-slot pair greedy. For the same set of ads, compute their total bids on all possible slots. Because position_discount may not monotonically decrease with the slot, the highest total bid may fill a slot not on the next slot with the smallest index. Whenever we fill a slot, we remove the computed total bids belonging to the associated advertiser and other advertisers’ total bids on the slot. We then continue to fill the next slot until all slots are filled.

Inline vs. non-inline conversion

Inline: specific actions performed on ad units

non-inline: actions performed outside ad units

https://www.internalfb.com/intern/wiki/Ad-event-types/overview/

there is also a good answer here.

You can check real-time ads traffic of different ads types here.

l0, l1, l2, l3, l4, l5 meaning

l1 = ads, l2=adset, l3=campaign, l4=ad account, l5=business. See [10,11]

What is dilution / backtest/ default v0 / explicit v0?

My colleague explained to me how dilution arises in the context of ads but I don’t have an answer for default/explicit v0:

At any given time, there are many QRT universes going on for different testing purposes. As a result, one user can belong to multiple QRT universes at the same time. It is possible that multiple QRT universes will test changes on the same feature / model type. Then the question is which QRT universe’s treatment a user will receive if he or she happens to belong multiple QRTs that are testing the same feature / model type. In reality, there is always some deterministic priority ordering among QRT universes. So even one user belongs to universe A, he or she may always receive the treatment of universe B. In this case, universe B dilutes the results of universe A. Readings of universe A should be amplified by (1 – dilute factor). In practice, dilute factor > 20% is too high that we consider readings invalid.

I have seen dilution defined in the context out of ads [12]. In that case, suppose there are X users allocated to the test group but only Z% of them actually saw the test feature. So any metrics read from the test group only represent X * Z% users.

Ads optimization goal vs. conv type vs. AdsEventTrait

From [17]: AdOptimizationGoal is customer facing – when our advertiser creates an AdSet (L2), they pick an optimization method. ConversionType is an internal field, which is used to determine the correct models to use. Non-UOF systems use ConversionType directly. Those already migrated to UOF use AdEventTraits (AET), which is computed from ConversionType, AdOptimizationGoal and other things. In a nutshell, AET is a newer concept similar to ConversionType, but it captures a lot more information like the attribution information. In the old system, Ads can only be optimized using a single event (ConversionType). With UOF, Ads can be optimized using multiple events (AETs). Impressions and clicks are the actual events.

Unified Optimization Framework [16]

Unified Optimization Framework (UOF) provides the consistent and principled development flow for ad product / quality bid. It covers most parts of the current end-to-end development process. If you want to know anything about UOF, this is the right place for you.

Ads optimization goal vs. conv type vs. AdsEventTrait

From [17]: AdOptimizationGoal is customer facing – when our advertiser creates an AdSet (L2), they pick an optimization method. ConversionType is an internal field, which is used to determine the correct models to use. Non-UOF systems use ConversionType directly. Those already migrated to UOF use AdEventTraits (AET), which is computed from ConversionType, AdOptimizationGoal and other things. In a nutshell, AET is a newer concept similar to ConversionType, but it captures a lot more information like the attribution information. In the old system, Ads can only be optimized using a single event (ConversionType). With UOF, Ads can be optimized using multiple events (AETs). Impressions and clicks are the actual events.

Generate ad model baselines

At big leading companies, ad models are iterated in a designed streamline. In each iteration, a strong baseline is generated as the reference point for new technical proposals. Baselines need to be rigorously built to be used to judge other proposals. How do we build baselines? We divide the process into three steps [18]:

- B1 [Pure Refresh]: SmartClone the v0 job but only remove hard-deprecated features; it’s the closest trainable baseline to v0. Because v0 uses different training dates / settings, we use this job as a representation of v0 to compare training metrics against.

- B2 [Launchable Refresh]: SmartClone the v0 job and remove/replace all features that block the launch; it’s the launchable baseline that we can directly use for production. This job usually is the fallback and lower bound of our baseline jobs; only when other jobs are better than this B2 job will they be picked as final baseline job.

- B3 [Feature Refresh]: AMR Clone the v0 job and replace the features with new feature importance results using AMR; it’s the baseline that contains new features. We use this job to harvest the gain of new features / feature importance techniques.

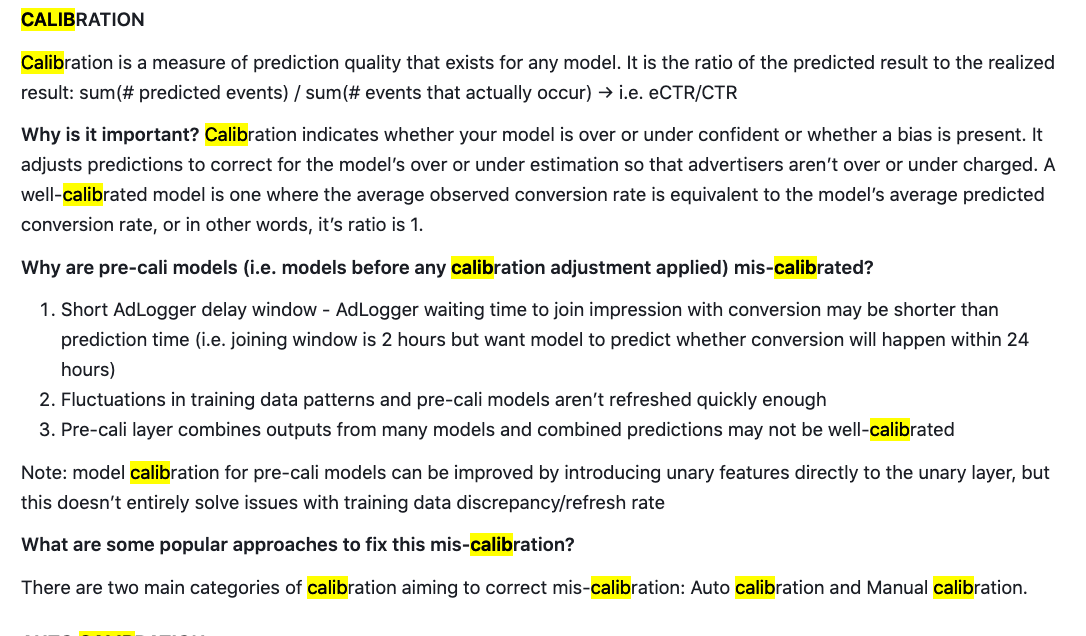

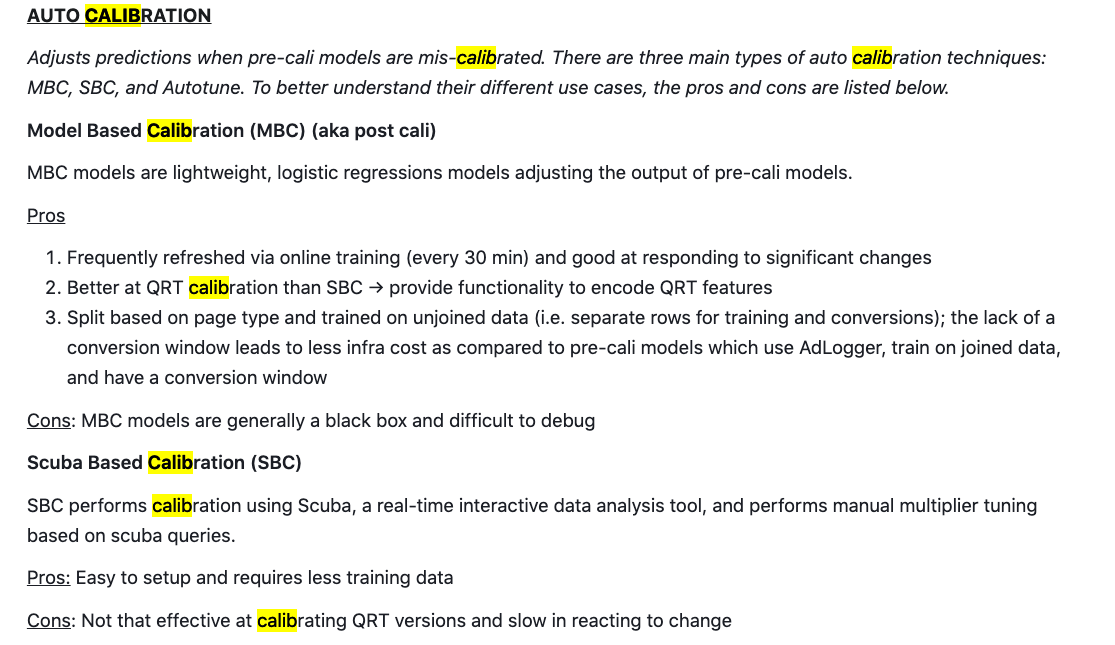

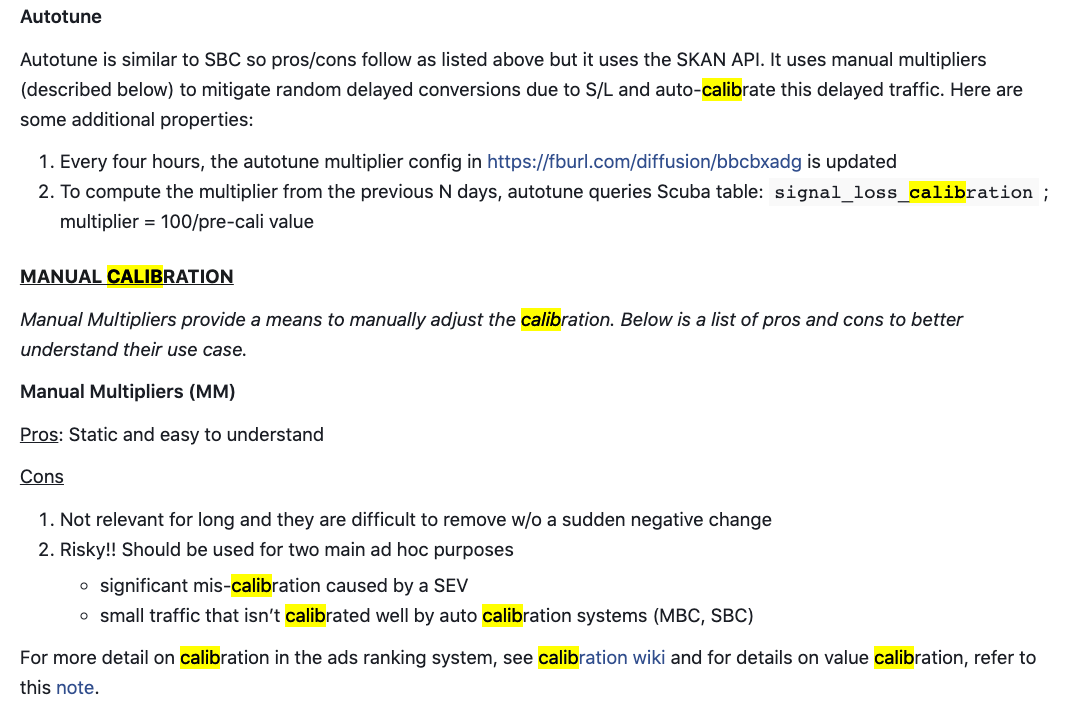

Calibration [19]

There are other variations of NE that are also used such as Coarse NE (a S/L resilient offline metric) and cali-free NE (metric which is resilient to the frequent high eval NE variance due to mis calibration). (See more introduction in [19]).

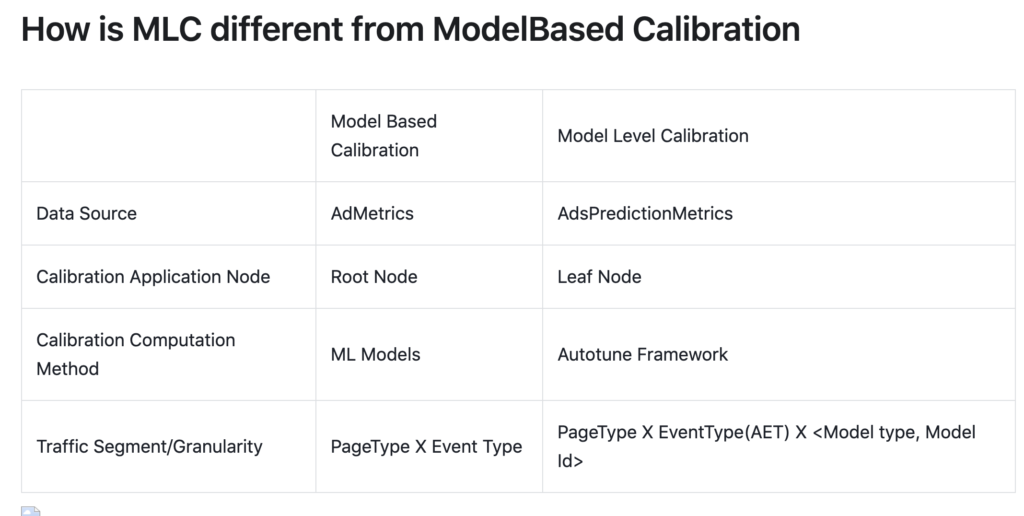

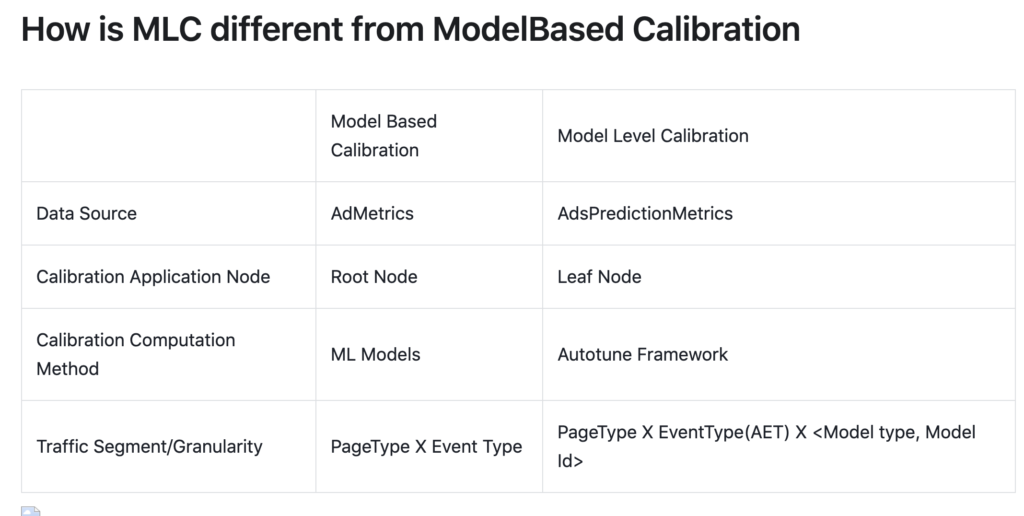

Currently, we adopt model-level calibration (MLC) instead of adsevent level calibration [20]. In [20], they also mentioned that MBC will be deprecated completely once MLC is fully online. The difference between MLC and MBC is listed below [21]:

References

[1] Ads metrics min delivery camp https://docs.google.com/presentation/d/19spfxiPCQ-MG1yn1ufhNdC4EOWgb7DpAKxICvMXL1cA/edit?usp=sharing

[2] UDV Wiki: https://fburl.com/wiki/ryaxbcet

[3] Organic bid wiki: https://www.internalfb.com/intern/wiki/FAM_Quality/Concepts/Organic_bids/

[4] BBR vs. EBR: https://www.internalfb.com/intern/wiki/BCR_Delivery/How_is_BBR_and_EBR_different/

[5] Ads Score Primer: https://www.internalfb.com/intern/wiki/Ads/Delivery/AdsRanking/Ads_Core_ML_Ranking_Overview/Ad_Score_Explained_Primer/

[6] Auction / bidding https://docs.google.com/presentation/d/1B843o2SE0ERXBWmo7HLtodBCanHRY4OEJFYhvRl0Prc/edit#slide=id.g9fd629c270_1_192

[7] Bidder Slot Pair Greedy Example: https://docs.google.com/spreadsheets/d/1FbIT1I3buy3x36S7CDR8zlTo85hlSdaTz-MnMn4bPUE/edit#gid=0

[8] Ad event types: https://www.internalfb.com/intern/wiki/Ad-event-types/

[9] Ads Score wiki: https://www.internalfb.com/intern/wiki/Ads/Delivery/AdsRanking/Ranking/Metrics/AdsScore/

![Rendered by QuickLaTeX.com \[p(\theta|x)=\frac{p(\theta) p(x|\theta)}{p(x)}=\frac{p(\theta) p(x|\theta)}{\int_\theta p(\theta) p(x|\theta) d\theta}\]](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-2529e21a8f21d8b383b095d0962a740d_l3.png)

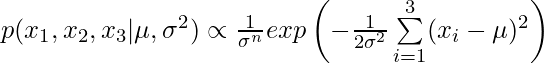

. In this simple example, there is an analytical expression for the posterior distribution

. In this simple example, there is an analytical expression for the posterior distribution ![Rendered by QuickLaTeX.com \[\alpha(\theta^{new}, \theta^{old})=min \left(1, \frac{p(\theta^{old}|x)g(\theta^{new}|\theta^{old})}{p(\theta^{new}|x) g(\theta^{old}|\theta^{new})}\right)\]](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-75a1b765204a57aa4e742b7c38f731ef_l3.png)

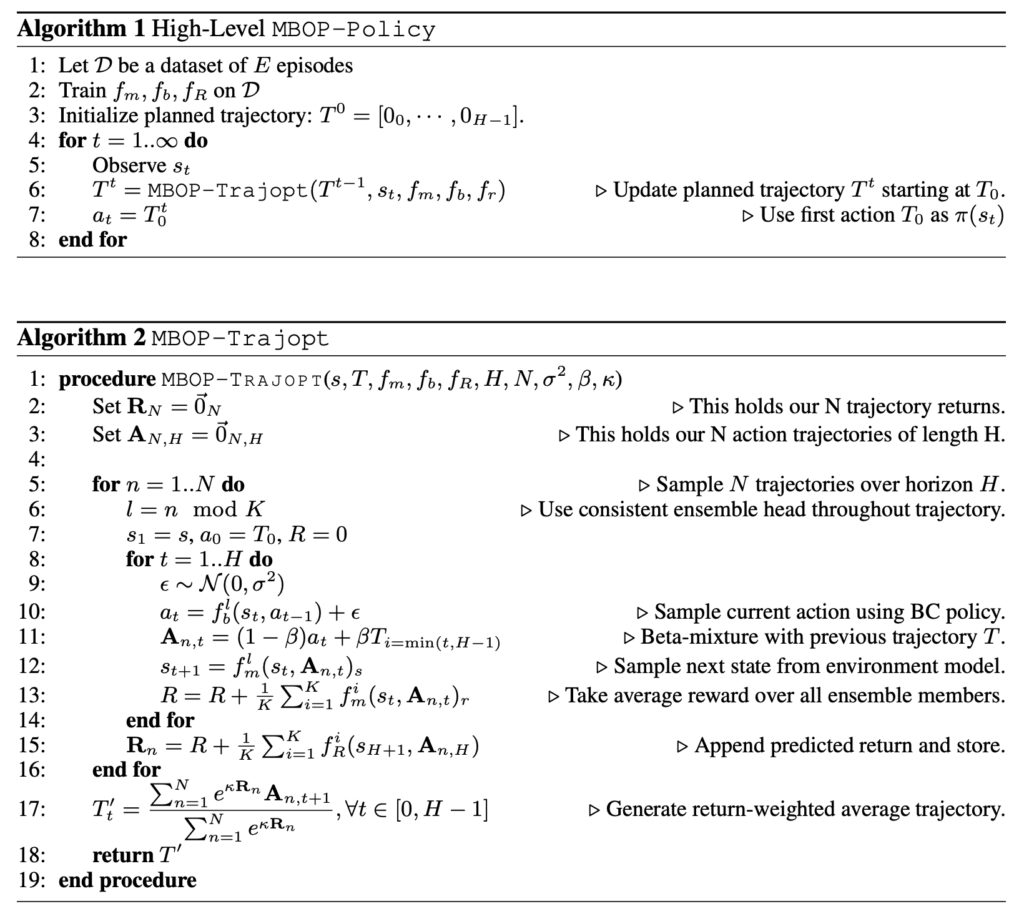

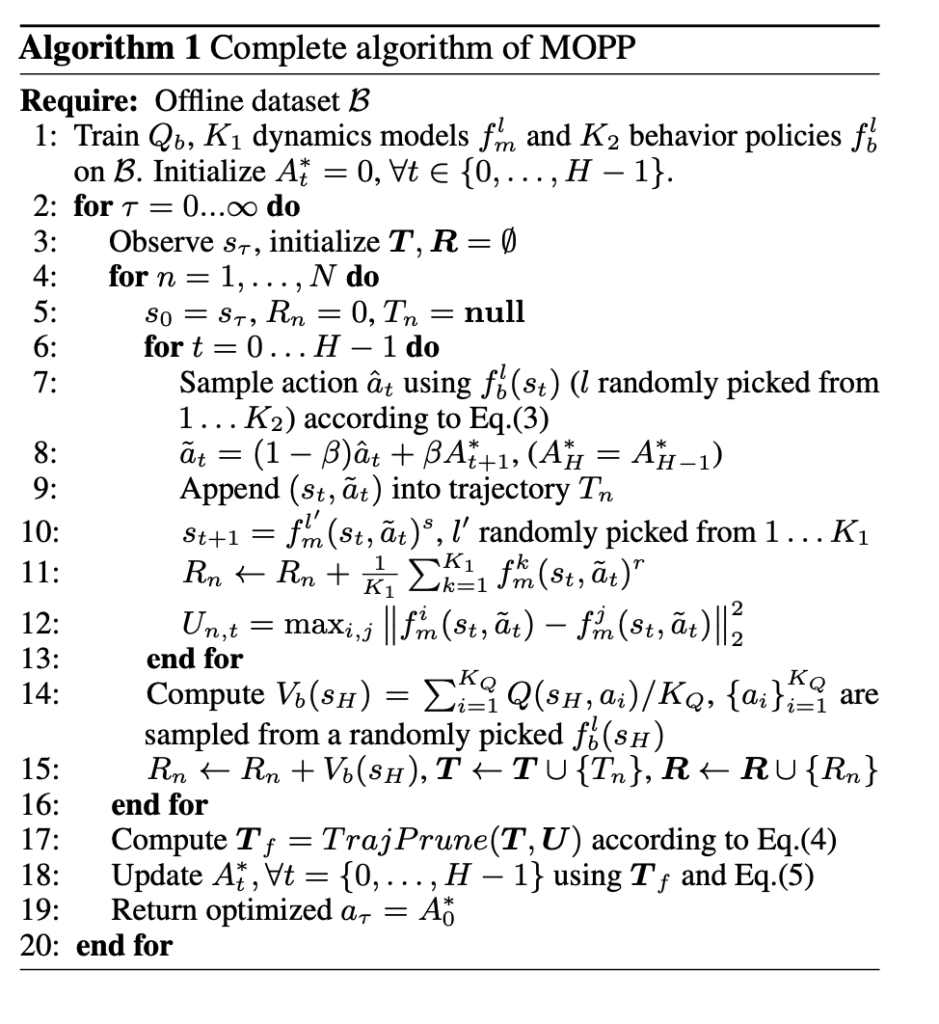

Note one detail at line 14: if we simulate

Note one detail at line 14: if we simulate