We have briefly touched some concepts of causal inference in [1, 2]. This post introduces some more specific works which apply causal inference in recommendation systems. Some works need to know the background of backdoor and frontdoor adjustments. So we will introduce them first.

Backdoor and frontdoor adjustment

Suppose we have a causal graph like below and we want to know the causal effect of X on Y. This can be translated to computing ![]() .

.

![]() can be seen as the post-intervention distribution on a modified causal graph, in which we want to intervene on

can be seen as the post-intervention distribution on a modified causal graph, in which we want to intervene on ![]() by setting a specific value

by setting a specific value ![]() on

on ![]() . Therefore, the parents of

. Therefore, the parents of ![]() do not affect its value anymore and this corresponds to removing all the parents of

do not affect its value anymore and this corresponds to removing all the parents of ![]() in the original causal graph:

in the original causal graph:

When backdoor or frontdoor criteria are satisfied, we can apply backdoor and frontdoor adjustments to express ![]() only using the observational distribution on the original causal graph:

only using the observational distribution on the original causal graph:

Backdoor:

![Rendered by QuickLaTeX.com \[P(y|do(X=x)) = \sum\limits_u P(y|x,u)P(u)\]](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-69af674e11974d3fa7af148e3c9421ea_l3.png)

Frontdoor:

![Rendered by QuickLaTeX.com \[P(y|do(X=x)) = \sum\limits_z P(z|x) \sum\limits_{x'} P(y|x', z)P(x')\]](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-7339016f5308bd6488b430e19faf43f1_l3.png)

The difference between backdoor and frontdoor adjustment is that the backdoor adjustment needs to assume that all confounder factors are observed (note ![]() is used in the backdoor adjustment formula but not in the frontdoor adjustment formula).

is used in the backdoor adjustment formula but not in the frontdoor adjustment formula).

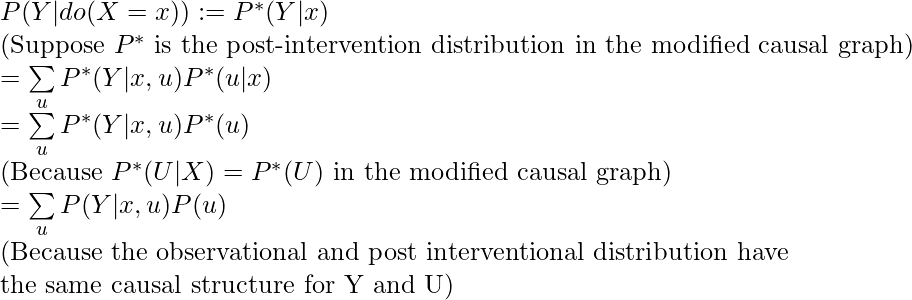

I find two places which shed lights on how backdoor and frontdoor adjustment formulas are derived. Let’s first look at how the backdoor adjustment formula is derived, from [3]:

Note that the backdoor adjustment assumes we can observe the confounder. That’s why you can see the summation over ![]() in

in ![]() . The frontdoor adjustment assumes we cannot observer

. The frontdoor adjustment assumes we cannot observer ![]() . Therefore, we have to use other observational distributions to decompose

. Therefore, we have to use other observational distributions to decompose ![]() . While [3] also explains how to derive the frontdoor adjustment for

. While [3] also explains how to derive the frontdoor adjustment for ![]() , I feel [4] did a better job in explanation.

, I feel [4] did a better job in explanation.

![Rendered by QuickLaTeX.com P(Y|do(X=x)) \newline= \sum\limits_z P(Y|z, do(X=x))P(z|do(X=x)) \newline =\sum\limits_z P(Y|z, do(X=x))P(z|x) \newline (P(z|do(X=x))=P(z|x) \text{ because there is no confounder between X and Z}) \newline (\text{However, we can't change }P(Y|z, do(X=x)) \text{ to }P(Y|z, x) \text{ because} \newline \text{there is an (unobserved) confounder between X and Y} )\newline=\sum\limits_z P(Y|do(Z=z), do(X=x))P(z|x) \newline \text{A trick to change from } P(Y|z, do(X=x))\text{ to } P(Y|do(Z=z), do(X=x)) \newline = \sum\limits_z P(Y|do(Z=z))P(z|x) \newline do(X=x)\text{'s effect is completely blocked by }do(Z=z)\newline\text{hence }P(Y|do(Z=z), do(X=x))=P(Y|do(Z=z)) \newline=\sum\limits_z P(z|x) \left[\sum\limits_{x'}P(Y|z, x')P(x')\right] \newline \text{Applying backdoor adjustment between do(Z=z) and Y}](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-5eaf80a6bd203c2cdbb7a63dd1d3ee75_l3.png)

Note that in the last equation, ![]() cannot be further simplified to

cannot be further simplified to ![]() because of the presence of

because of the presence of ![]() which can affect both

which can affect both ![]() and

and ![]() [5].

[5].

Backdoor adjustment in real world papers

After introducing backdoor / front adjustment, let’s examine how they are used in practice. I came across two papers [9, 10], both of which applied backdoor adjustment.

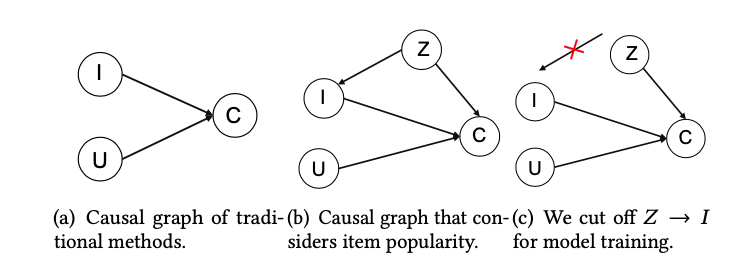

[9] tries to account for popularity bias explicitly, for which many other models fail to take into account. In the diagram below, I represents items, U represents users, C represents user interaction on specific items, and Z represents popularity. Popularity is a confounder for items because more popular items tend to get exposed more often and create a feedback loop to continue biasing the model. Popularity is also a confounder for user interaction because users tend to have “herd mentality” thus tend to follow the majority to consume popular items.

To really know the intrinsic value of an item to a user, we have to estimate ![]() . By applying the backdoor adjustment, we have

. By applying the backdoor adjustment, we have ![]() .

. ![]() can be estimated by any deep learning model which takes as input user-side features, item-side features, as well as popularity. But the paper has a nice trick to decompose

can be estimated by any deep learning model which takes as input user-side features, item-side features, as well as popularity. But the paper has a nice trick to decompose ![]() , where

, where ![]() is model parameters. Then they can use

is model parameters. Then they can use ![]() to rank items based on real intrinsic value.

to rank items based on real intrinsic value.

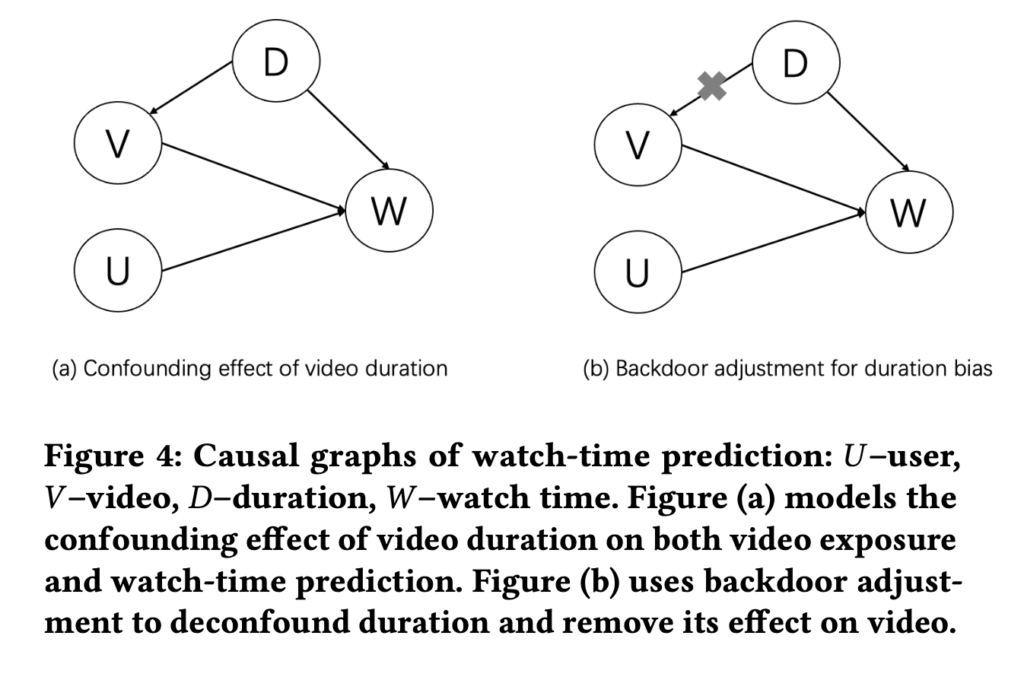

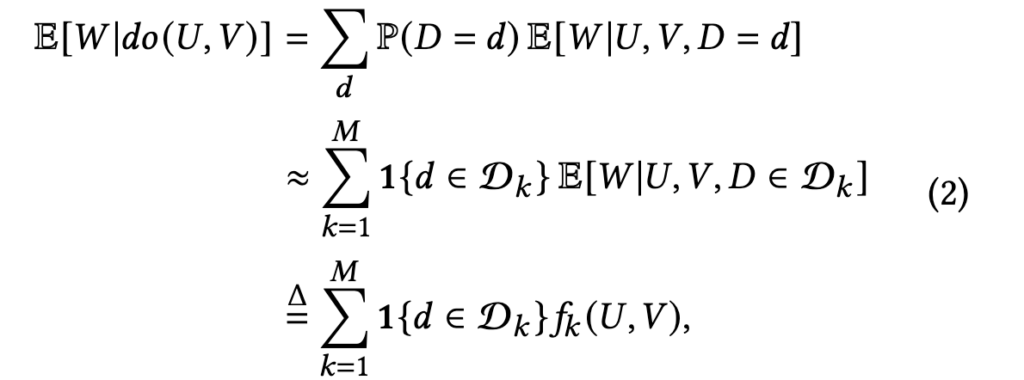

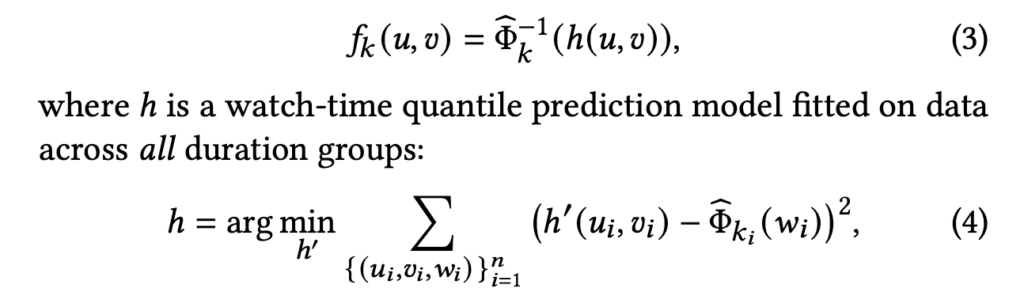

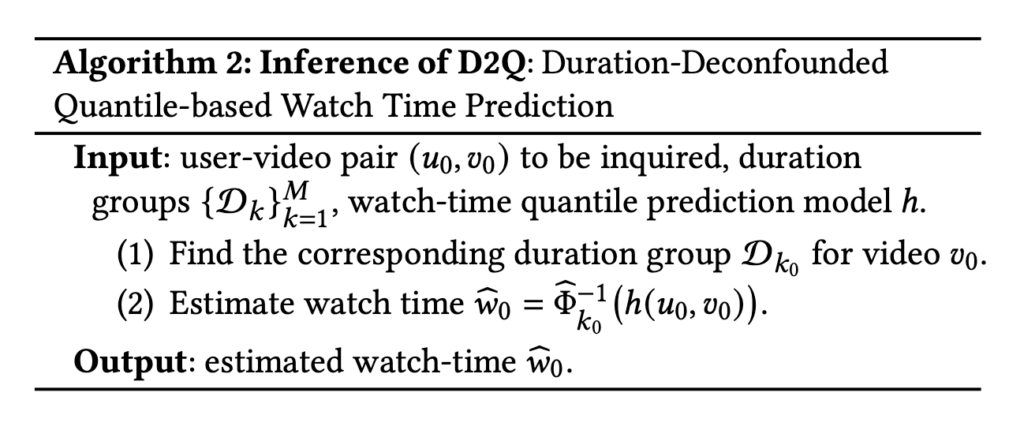

[10] provides another idea to do backdoor adjustment. They are tackling the bias of video length which is commonly seen in modern video recommendation systems. The bias exists because video watch time is often a topline metric video recommendation systems try to optimize. As a result, video with long duration tends to be overexposured and tend to amplify the video length bias feedback loop. The causal graph the authors model is as below, where U, V, D, and W represents user, video, duration, and watch time, respectively:

The backdoor adjustment to estimate the real intrinsic value ![]() is

is

Basically, the formula says that we will group videos into different duration buckets. The real intrinsic value ![]() is an estimator to estimate the watch time percentile within that duration bucket.

is an estimator to estimate the watch time percentile within that duration bucket. ![]() can be a shared model that predicts the watch time percentile in different buckets.

can be a shared model that predicts the watch time percentile in different buckets.

It is interesting to see that during the inference, they will still rank based on predicted watch time, not the intrinsic value ![]() :

:

A question could arise naturally that why we could not use a deep learning model to take as input user and video features as well as the confounder duration? This goes back to the motivation of the paper itself [10]. Such a deep learning model tends to predict high watch time for long videos simply because they are over-exposed (i.e., many of them are put in top positions so naturally long videos attract more watch time). However, with bucketing durations and changing labels to percentiles within each bucket, the model prediction will be less biased.

Causal Discovery

The paper [7] introduces a cool way to trim user history in user sequence modeling. The context is that in sequential recommendation problems, the objective function can be formulated as following:

![Rendered by QuickLaTeX.com \[min -\sum\limits_{k=1}^N\sum\limits_{j=1}^{l_k} log f(\vec{v}_k^j | \mathbf{H}_k^j),\]](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-d00f0f3b82e978c3d797a9cc35588e4f_l3.png)

where ![]() is user

is user ![]() ‘s

‘s ![]() -th interacted item in history represented as a one-hot vector, and

-th interacted item in history represented as a one-hot vector, and ![]() is the user interacted item history before the

is the user interacted item history before the ![]() -th item.

-th item. ![]() can be a softmax function so that minimizing the function will encourage the model to accurately predict which item the user will most likely interact with next given the history.

can be a softmax function so that minimizing the function will encourage the model to accurately predict which item the user will most likely interact with next given the history.

However, user history may contain many irrelevant items which makes prediction hard. [7] proposes using causal discovery to discover causally related items so that we only use purified history to predict next items users may interact with. The sequential model will use a more focused history for prediction and will be potentially more accurate in prediction.

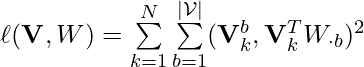

Denote all users’ history as ![]() , the objective then becomes:

, the objective then becomes:

![Rendered by QuickLaTeX.com min - \ell(\mathbf{V}, W) + \lambda ||W||_1 \newline = min -\sum\limits_{k=1}^N\sum\limits_{j=1}^{l_k}\sum\limits_{b=1}^{|\mathcal{V}|} log f(\left[\vec{v}_k^j\right]_b | \mathbf{\tilde{H}}_{kb}^j(W)) + \lambda ||W||_1 \newline s.t. \quad trace(e^{W \odot W})=\vert\mathcal{V}\vert,](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-3f1576d5f29113b67a56952f36dd4680_l3.png)

where ![]() is the discovered causal graph between items, and

is the discovered causal graph between items, and ![]() is the purified history for each potential item

is the purified history for each potential item ![]() at the time of prediction.

at the time of prediction. ![]() is a real-valued matrix which approximates the actual binarized causal DAG, i.e.,

is a real-valued matrix which approximates the actual binarized causal DAG, i.e., ![]() means that the change of item

means that the change of item ![]() will cause the change of item

will cause the change of item ![]() .

.

Note that the form of ![]() is from NOTEARS [8], the work introducing differentiable causal discovery. Originally in [8],

is from NOTEARS [8], the work introducing differentiable causal discovery. Originally in [8], ![]() , which can be thought as a matrix expressing all interacted items for each user without temporal order.

, which can be thought as a matrix expressing all interacted items for each user without temporal order.  is a least-square error, which intuitively means that each observed interacted item should be explained by all other observed interacted items and the causal graph

is a least-square error, which intuitively means that each observed interacted item should be explained by all other observed interacted items and the causal graph ![]() . [8] shows that, if

. [8] shows that, if ![]() is the binarized version of

is the binarized version of ![]() (the actual causal directed acyclic graph), then

(the actual causal directed acyclic graph), then ![]() .

. ![]() as a relaxed version of

as a relaxed version of ![]() can also be constrained in similar way but we need to slightly modify the constraint as

can also be constrained in similar way but we need to slightly modify the constraint as ![]() .

.

The novelty of [7] is that it puts the causal discovery framework proposed in [8] in a sequential recommendation context such that temporal information is taken into account and ![]() is no longer just a matrix of all interacted items for each user. Instead,

is no longer just a matrix of all interacted items for each user. Instead, ![]() .

.

On a side note, the paper [7] also introduces a differentiable clustering method which is also pretty cool.

References

[1] Causal Inference https://czxttkl.com/2020/10/13/causal-inference/

[2] Tools needed to build an RL debugging tool: https://czxttkl.com/2020/09/08/tools-needed-to-build-an-rl-debugging-tool/

[7] Sequential Recommendation with Causal Behavior Discovery: https://arxiv.org/abs/2204.00216

[8] DAGs with NO TEARS Continuous Optimization for Structure Learning: https://arxiv.org/abs/1803.01422

[9] Causal Intervention for Leveraging Popularity Bias in Recommendation: https://arxiv.org/pdf/2102.11107.pdf

[10] Deconfounding Duration Bias in Watch-time Prediction for Video Recommendation: https://arxiv.org/abs/2206.06003