I am very curious to see whether generative models like GAN and VAE can fit data of multi-modes. [1] has some overview over different generative models, mentioning that VAE has a clear probabilistic objective function and is more efficient.

[2] showed that diffusion models (score-based generative models) can better fit multimode distribution than VAE and GAN. [4] mentions that VAE does not have mode collapse as GAN but has a similar problem that the decoder may ignore the latent representation and generate things arbitrarily and InfoVAE [5] can mitigate it. [6] also suggested that beta-VAE and Adversarial VAE [7] achieve better performance than vanilla VAE.

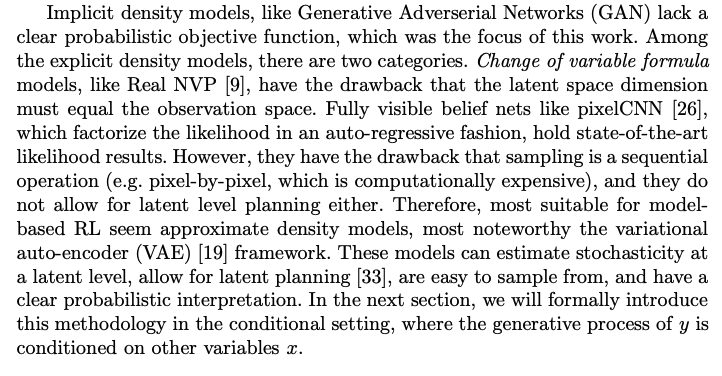

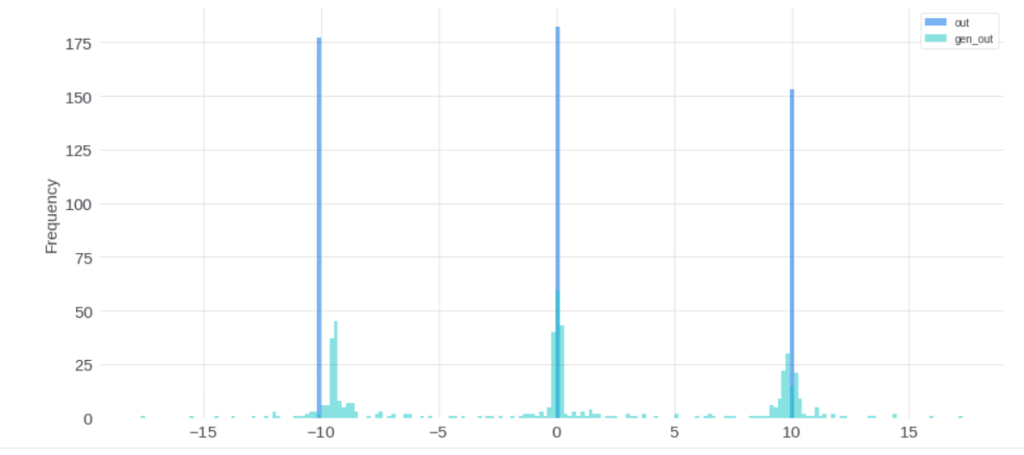

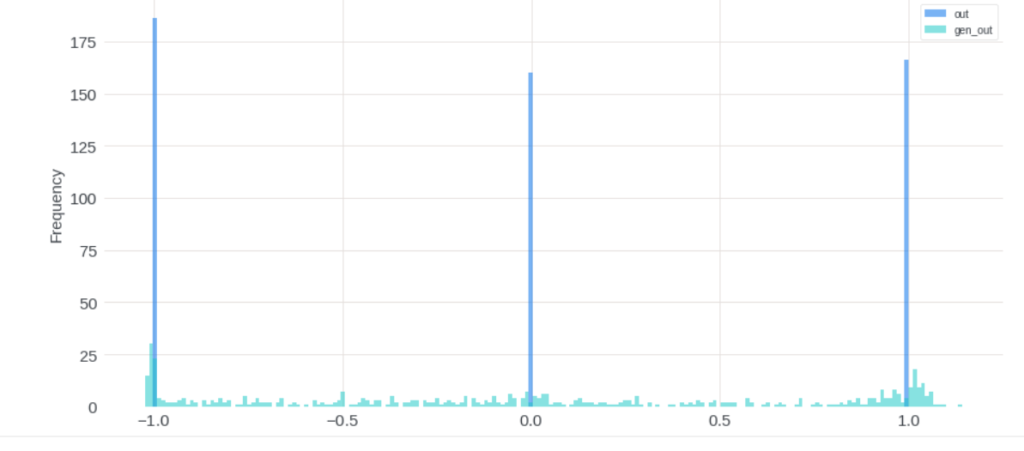

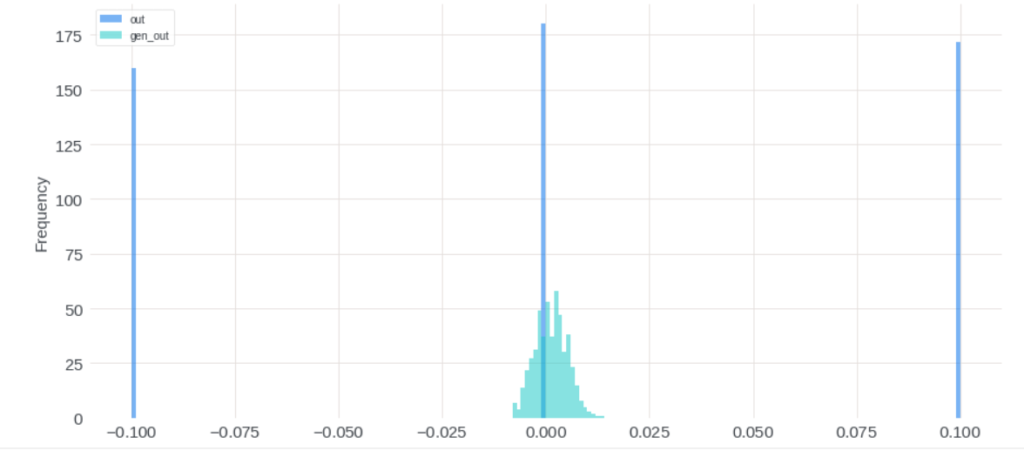

I did a hands-on toy experiment, where I generate 3-modes distributions and let vanilla VAE fit them. The result is not very satisfying given this is only a very simple toy problem. Therefore, it calls out that we may need more advanced VAE as described above.

First, when the three modes are well separated and well balanced (I generated 33% data as -10, 33% data as 0, and the other 33% data as 10):

However, when the three modes are -1, 0, and 1, generated data are not well separated:

When the three modes are -0.1, 0, and 0.1, generated data only concentrate on one mode (mode collapse!):

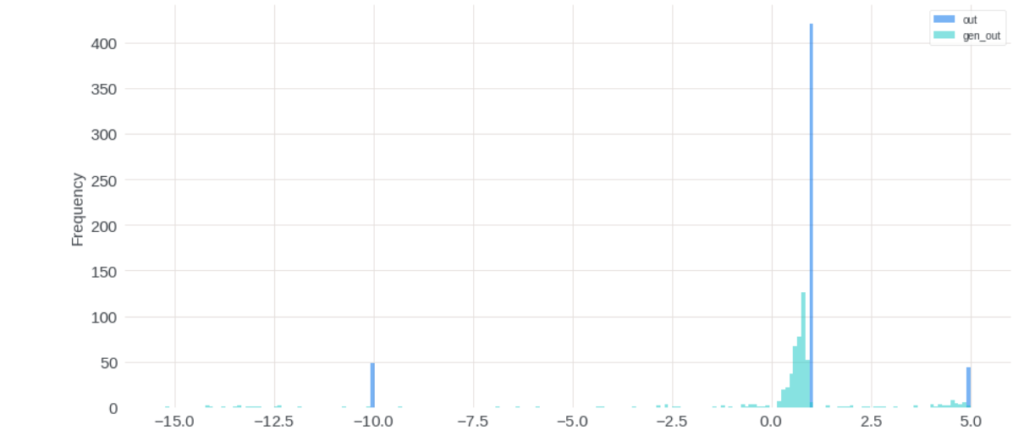

I also tried that the three modes have imbalanced data amount and VAE did not perform well either:

The toy experiment’s code can be found in `train_VAE_simple.py` in https://www.dropbox.com/sh/n8lbdzvrdvahme7/AADFgs_JLz8BhHzOaUCcJ1MZa?dl=0.

At last, I’d like to share that [3] seems a good tutorial to introduce diffusion models.

References

[1] Learning Multimodal Transition Dynamics for Model-Based Reinforcement Learning: https://arxiv.org/pdf/1705.00470.pdf

[2] Can Push-forward Generative Models Fit Multimodal Distributions?: https://openreview.net/pdf?id=Tsy9WCO_fK1

[3] https://www.youtube.com/watch?v=cS6JQpEY9cs

[4] https://ai.stackexchange.com/questions/8879/why-doesnt-vae-suffer-mode-collapse

[5] https://ermongroup.github.io/blog/a-tutorial-on-mmd-variational-autoencoders/

[6] https://ameroyer.github.io/reading-notes/representation%20learning/2019/05/02/infovae.html

[7] https://medium.com/vitrox-publication/adversarial-auto-encoder-aae-a3fc86f71758