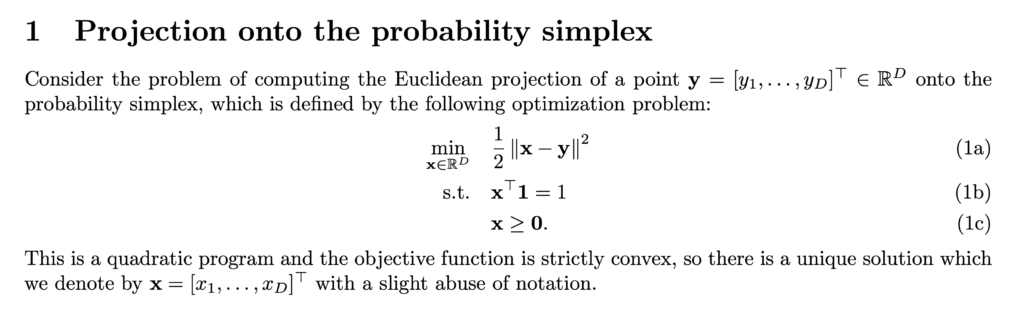

I am reading “Determinantal point processes for machine learning”, in which it uses projected gradient descent in Eqn. 212. More broadly, such problems have this general form:

where we want to map from ![]() to

to ![]() on the simplex. Since we often encounter problems of the sum-to-1 constraint, I think it is worth listing the solution in this post.

on the simplex. Since we often encounter problems of the sum-to-1 constraint, I think it is worth listing the solution in this post.

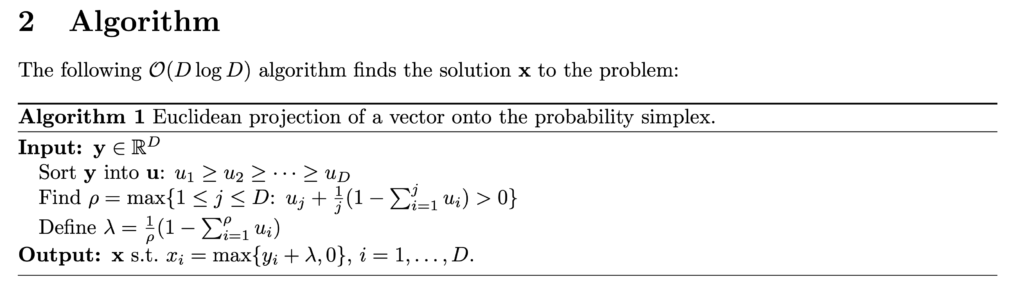

The algorithm is simple as detailed in https://eng.ucmerced.edu/people/wwang5/papers/SimplexProj.pdf:

We omit the proof but I do appreciate the elegancy of this algorithm.