In this post, I am going to explain the code (as much as I can) from theano LSTM tutorial: http://deeplearning.net/tutorial/lstm.html

You need to first understand LSTM. Here is an online recommended material: http://colah.github.io/posts/2015-08-Understanding-LSTMs/, in which many beautiful figures are provided to illustrate LSTM step by step.

The tutorial aims to predict positive/negative sentiment based on movie reviews in texts. Essentially, it uses RNN with LSTM units to accomplish a supervised learning task. The basic idea is as follows:

- Use vectors to represent words and convert each review into a `num_words` $latex \times$ `word_emb_dim` matrix. Representing words as vectors is a technique called word embedding. You can control your model complexity by flexibly setting the value of `word_emb_dim`. In the theano tutorial, the default `word_emb_dim` is set to 128.

- It uses mini-batch for learning. Therefore, you need to assemble multiple review matrices from step 1 into a 3-dimension tensor, with the shape `num_samples` $latex \times$ `max_num_words` $latex \times$ `word_emb_dim`. `max_num_words` is the max number of words among samples in mini-batch.

- It feeds the assembled mini-batch tensor into the RNN model. In RNN, the number of hidden units is by default set to 128, the same number of the default `word_emb_dim`. I am not sure whether it is a recommended practice to set the number of hidden units and the dimension of word embedding equal.

- After the tensor passes through the RNN, the model generates two tensors, one is stacked hidden unit vectors of the shape `num_samples` $latex \times$ `max_num_words` $latex \times$ `num_hidden_units`, the other is stacked memory cell output of the same shape. To go to the next step, we only need to keep the stacked hidden unit vectors.

- It applies mean pooling on the hidden unit outputs along the axis of words. Specifically, it calculates the mean of every component in the hidden unit vectors emitted by all the words in one review. Mean pooling results in a matrix of shape `num_samples` $latex \times$ `num_hidden_units`. It has one dimension less than the stacked hidden unit vectors from step 4, resulting from the mean pooling.

- Finally, the matrix from step 5 is fed into a logistic regression model. The number of LR weight aligns with `num_hidden_units`. The cost (objective function) is ultimately defined as a log likelihood function as usual logistic regression models do.

Now, let’s look at the real code from the theano tutorial. In most cases I don’t modify the default parameters as provided.

The main function as entrance:

if __name__ == '__main__':

# See function train for all possible parameter and there definition.

train_lstm(

max_epochs=100,

test_size=500,

)

The core part of training `train_lstm`:

def train_lstm(

dim_proj=128, # word embeding dimension and LSTM number of hidden units.

patience=10, # Number of epoch to wait before early stop if no progress

max_epochs=5000, # The maximum number of epoch to run

dispFreq=10, # Display to stdout the training progress every N updates

decay_c=0., # Weight decay for the classifier applied to the U weights.

lrate=0.0001, # Learning rate for sgd (not used for adadelta and rmsprop)

n_words=10000, # Vocabulary size

optimizer=adadelta, # sgd, adadelta and rmsprop available, sgd very hard to use, not recommanded (probably need momentum and decaying learning rate).

encoder='lstm', # TODO: can be removed must be lstm.

saveto='lstm_model.npz', # The best model will be saved there

validFreq=370, # Compute the validation error after this number of update.

saveFreq=1110, # Save the parameters after every saveFreq updates

maxlen=100, # Sequence longer then this get ignored

batch_size=16, # The batch size during training.

valid_batch_size=64, # The batch size used for validation/test set.

dataset='imdb',

# Parameter for extra option

noise_std=0.,

use_dropout=True, # if False slightly faster, but worst test error

# This frequently need a bigger model.

reload_model=None, # Path to a saved model we want to start from.

test_size=-1, # If >0, we keep only this number of test example.

):

# Model options

model_options = locals().copy()

print("model options", model_options)

load_data, prepare_data = get_dataset(dataset)

print('Loading data')

train, valid, test = load_data(n_words=n_words, valid_portion=0.05,

maxlen=maxlen)

if test_size > 0:

# The test set is sorted by size, but we want to keep random

# size example. So we must select a random selection of the

# examples.

idx = numpy.arange(len(test[0]))

numpy.random.shuffle(idx)

idx = idx[:test_size]

test = ([test[0][n] for n in idx], [test[1][n] for n in idx])

ydim = numpy.max(train[1]) + 1

model_options['ydim'] = ydim

print('Building model')

# This create the initial parameters as numpy ndarrays.

# Dict name (string) -> numpy ndarray

params = init_params(model_options)

if reload_model:

load_params('lstm_model.npz', params)

# This create Theano Shared Variable from the parameters.

# Dict name (string) -> Theano Tensor Shared Variable

# params and tparams have different copy of the weights.

tparams = init_tparams(params)

# use_noise is for dropout

(use_noise, x, mask,

y, f_pred_prob, f_pred, cost) = build_model(tparams, model_options)

if decay_c > 0.:

decay_c = theano.shared(numpy_floatX(decay_c), name='decay_c')

weight_decay = 0.

weight_decay += (tparams['U'] ** 2).sum()

weight_decay *= decay_c

cost += weight_decay

f_cost = theano.function([x, mask, y], cost, name='f_cost')

grads = tensor.grad(cost, wrt=list(tparams.values()))

f_grad = theano.function([x, mask, y], grads, name='f_grad')

lr = tensor.scalar(name='lr')

f_grad_shared, f_update = optimizer(lr, tparams, grads,

x, mask, y, cost)

print('Optimization')

kf_valid = get_minibatches_idx(len(valid[0]), valid_batch_size)

kf_test = get_minibatches_idx(len(test[0]), valid_batch_size)

print("%d train examples" % len(train[0]))

print("%d valid examples" % len(valid[0]))

print("%d test examples" % len(test[0]))

history_errs = []

best_p = None

bad_count = 0

if validFreq == -1:

validFreq = len(train[0]) // batch_size

if saveFreq == -1:

saveFreq = len(train[0]) // batch_size

uidx = 0 # the number of update done

estop = False # early stop

start_time = time.time()

try:

for eidx in range(max_epochs):

n_samples = 0

# Get new shuffled index for the training set.

kf = get_minibatches_idx(len(train[0]), batch_size, shuffle=True)

for _, train_index in kf:

uidx += 1

use_noise.set_value(1.)

# Select the random examples for this minibatch

y = [train[1][t] for t in train_index]

x = [train[0][t]for t in train_index]

# Get the data in numpy.ndarray format

# This swap the axis!

# Return something of shape (minibatch maxlen, n samples)

x, mask, y = prepare_data(x, y)

n_samples += x.shape[1]

cost = f_grad_shared(x, mask, y)

f_update(lrate)

if numpy.isnan(cost) or numpy.isinf(cost):

print('bad cost detected: ', cost)

return 1., 1., 1.

if numpy.mod(uidx, dispFreq) == 0:

print('Epoch ', eidx, 'Update ', uidx, 'Cost ', cost)

if saveto and numpy.mod(uidx, saveFreq) == 0:

print('Saving...')

if best_p is not None:

params = best_p

else:

params = unzip(tparams)

numpy.savez(saveto, history_errs=history_errs, **params)

pickle.dump(model_options, open('%s.pkl' % saveto, 'wb'), -1)

print('Done')

if numpy.mod(uidx, validFreq) == 0:

use_noise.set_value(0.)

train_err = pred_error(f_pred, prepare_data, train, kf)

valid_err = pred_error(f_pred, prepare_data, valid,

kf_valid)

test_err = pred_error(f_pred, prepare_data, test, kf_test)

history_errs.append([valid_err, test_err])

if (best_p is None or

valid_err <= numpy.array(history_errs)[:,

0].min()):

best_p = unzip(tparams)

bad_counter = 0

print( ('Train ', train_err, 'Valid ', valid_err,

'Test ', test_err) )

if (len(history_errs) > patience and

valid_err >= numpy.array(history_errs)[:-patience,

0].min()):

bad_counter += 1

if bad_counter > patience:

print('Early Stop!')

estop = True

break

print('Seen %d samples' % n_samples)

if estop:

break

except KeyboardInterrupt:

print("Training interupted")

end_time = time.time()

if best_p is not None:

zipp(best_p, tparams)

else:

best_p = unzip(tparams)

use_noise.set_value(0.)

kf_train_sorted = get_minibatches_idx(len(train[0]), batch_size)

train_err = pred_error(f_pred, prepare_data, train, kf_train_sorted)

valid_err = pred_error(f_pred, prepare_data, valid, kf_valid)

test_err = pred_error(f_pred, prepare_data, test, kf_test)

print( 'Train ', train_err, 'Valid ', valid_err, 'Test ', test_err )

if saveto:

numpy.savez(saveto, train_err=train_err,

valid_err=valid_err, test_err=test_err,

history_errs=history_errs, **best_p)

print('The code run for %d epochs, with %f sec/epochs' % (

(eidx + 1), (end_time - start_time) / (1. * (eidx + 1))))

print( ('Training took %.1fs' %

(end_time - start_time)), file=sys.stderr)

return train_err, valid_err, test_err

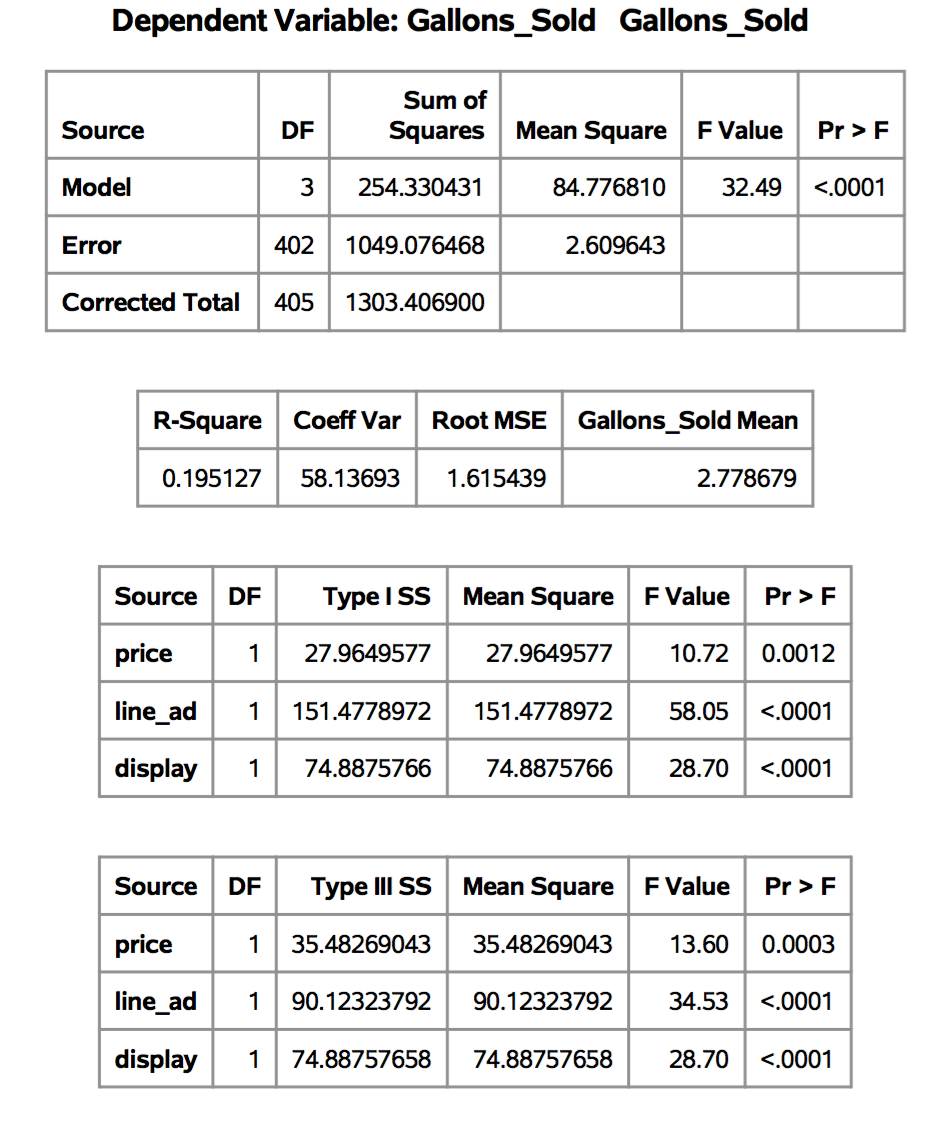

line 61: initialize all the parameters to be learned as theano shared variable. I print out the shape information of each parameters (under the default setting):

| Parameter name |

Note |

Shape |

| Wemb |

Word embedding. Every word is represented by a vector as a row |

(10000, 128) |

| lstm_W |

stacked weights involved in LSTM which connect input $latex X$: $latex W_i, W_f, W_o, W_c$. Stacking them for efficient learning as suggested in the tutorial. Each weight is a 128×128 square matrix. |

|

| lstm_U |

stacked weights involved in LSTM which connect previous hidden units: $latex U_i, U_f, U_o, U_c$. Each weight is a 128×128 square matrix. |

(128, 512) |

| lstm_b |

the biases inside LSTM unit |

(512,) |

| U |

The weights in the logistic regression. It contains two sets of weights, one for predicting positive and the other for negative |

(128,2) |

| b |

The biases in the logistic regression |

(2,) |

line 65: `build_model` is the crux part for building LSTM using theano.

def build_model(tparams, options):

trng = RandomStreams(SEED)

# Used for dropout.

use_noise = theano.shared(numpy_floatX(0.))

x = tensor.matrix('x', dtype='int64')

mask = tensor.matrix('mask', dtype=config.floatX)

y = tensor.vector('y', dtype='int64')

n_timesteps = x.shape[0]

n_samples = x.shape[1]

emb = tparams['Wemb'][x.flatten()].reshape([n_timesteps,

n_samples,

options['dim_proj']])

# proj = get_layer(options['encoder'])[1](tparams, emb, options,

proj = lstm_layer(tparams, emb, options, mask=mask)

# if options['encoder'] == 'lstm':

proj = (proj * mask[:, :, None]).sum(axis=0)

proj = proj / mask.sum(axis=0)[:, None]

if options['use_dropout']:

proj = dropout_layer(proj, use_noise, trng)

pred = tensor.nnet.softmax(tensor.dot(proj, tparams['U']) + tparams['b'])

f_pred_prob = theano.function([x, mask], pred, name='f_pred_prob')

f_pred = theano.function([x, mask], pred.argmax(axis=1), name='f_pred')

off = 1e-8

if pred.dtype == 'float16':

off = 1e-6

cost = -tensor.log(pred[tensor.arange(n_samples), y] + off).mean()

return use_noise, x, mask, y, f_pred_prob, f_pred, cost

line 14-16: assemble word embedding tensor in the shape `n_timesteps` $latex \times$ `n_samples` $latex \times$ `dim_proj`. `n_timesteps` corresponds to `max_num_words` in step 2 and `dim_proj` corresponds to `word_emb_dim`/`num_hidden_units`. Such name mapping persists onwards.

line 17-21: I commented out some lines from original code and modified them into more succinct expressions.

line 18: `proj` is the stacked hidden unit vectors in the shape of `n_timesteps` $latex \times$ `n_samples` $latex \times$ `dim_proj`. (The order of dimensions is different than we introduce in step 4.)

line 20-21: mean pooling from step 5. `mask` is a binary matrix of size `n_timesteps` $latex \times$ `n_samples`, of which 1 denotes a word exists.

Now let’s look at line 18 proj = lstm_layer(tparams, emb, options, mask=mask) :

def lstm_layer(tparams, state_below, options, mask=None):

nsteps = state_below.shape[0]

dim_proj = options['dim_proj']

n_samples = state_below.shape[1]

# if state_below.ndim == 3:

# else:

# n_samples = 1

assert mask is not None

def _slice(_x, n, dim):

# if _x.ndim == 3:

# return _x[:, :, n * dim:(n + 1) * dim]

return _x[:, n * dim:(n + 1) * dim]

def _step(m_, x_, h_, c_):

preact = tensor.dot(h_, tparams['lstm_U'])

preact += x_

i = tensor.nnet.sigmoid(_slice(preact, 0, dim_proj))

f = tensor.nnet.sigmoid(_slice(preact, 1, dim_proj))

o = tensor.nnet.sigmoid(_slice(preact, 2, dim_proj))

c = tensor.tanh(_slice(preact, 3, dim_proj))

c = f * c_ + i * c

c = m_[:, None] * c + (1. - m_)[:, None] * c_

h = o * tensor.tanh(c)

h = m_[:, None] * h + (1. - m_)[:, None] * h_

return h, c

state_below = (tensor.dot(state_below, tparams['lstm_W']) +

tparams['lstm_b'])

rval, updates = theano.scan(_step,

sequences=[mask, state_below],

outputs_info=[tensor.alloc(numpy_floatX(0.),

n_samples,

dim_proj),

tensor.alloc(numpy_floatX(0.),

n_samples,

dim_proj)],

name='lstm_layers',

n_steps=nsteps)

return rval[0] # hidden units outputs h: n_timesteps * n_samples * dim

lstm_layer uses `theano.scan` to loop through the input. It only returns `rval[0]` because the upper level logistic regression only needs hidden units’ outputs.