I’d like to talk more about policy gradient [1], which I touched upon in 2017. In common online tutorials, policy gradient theorem takes a lot of spaces to prove that the gradient of the policy ![]() in the direction to improve accumulated returns is:

in the direction to improve accumulated returns is:

![Rendered by QuickLaTeX.com J(\theta)\newline=\mathbb{E}_{s,a \sim \pi_\theta} [Q^{\pi_\theta}(s,a)] \newline=\mathbb{E}_{s \sim \pi_\theta}[ \mathbb{E}_{a \sim \pi_\theta} [Q^{\pi_\theta}(s,a)]]\newline=\mathbb{E}_{s\sim\pi_\theta} [\int \pi_\theta(a|s) Q^{\pi_\theta}(s,a) da]](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-f1469552882502e8a85fcd638aa96963_l3.png)

![Rendered by QuickLaTeX.com \nabla_{\theta} J(\theta) \newline= \mathbb{E}_{s\sim\pi_\theta} [\int \nabla \pi_\theta(a|s) Q^{\pi_\theta}(s,a) da] \quad\quad \text{Treat } Q^{\pi_\theta}(s,a) \text{ as non-differentiable} \newline= \mathbb{E}_{s\sim\pi_\theta} [\int \pi_\theta(a|s) \frac{\nabla \pi_\theta(a|s)}{\pi_\theta(a|s)} Q^{\pi_\theta}(s,a) da] \newline= \mathbb{E}_{s, a \sim \pi_\theta} [Q^{\pi_\theta}(s,a) \nabla_\theta log \pi_\theta(a|s)] \newline \approx \frac{1}{N} [G_t \nabla_\theta log \pi_\theta(a_t|s_t)] \quad \quad s_t, a_t \sim \pi_\theta](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-f73caef052359dd2ab0ff34a060164b9_l3.png)

where ![]() is the accumulated return beginning from step

is the accumulated return beginning from step ![]() from real samples.

from real samples.

Note that the above gradient is only for on-policy policy gradient, because ![]() . How can we derive the gradient for off-policy policy gradient, i.e.,

. How can we derive the gradient for off-policy policy gradient, i.e., ![]() where

where ![]() is a different behavior policy? I think the simplest way to derive it is to connect importance sampling with policy gradient.

is a different behavior policy? I think the simplest way to derive it is to connect importance sampling with policy gradient.

A quick introduction of importance sampling [2]: estimating a quantity under a distribution is equivalently estimating it under another different distribution with an importance sampling weight, i.e., ![]() . This comes handy when you can’t collect data using

. This comes handy when you can’t collect data using ![]() but you can still compute

but you can still compute ![]() when

when ![]() is sampled from

is sampled from ![]() .

.

In the case of off-policy policy gradient, ![]() becomes “the value function of the target policy, averaged over the state distribution of the behavior policy” (from DPG paper [6]):

becomes “the value function of the target policy, averaged over the state distribution of the behavior policy” (from DPG paper [6]):

![Rendered by QuickLaTeX.com J(\theta)\newline=\mathbb{E}_{s\sim\pi_b}\left[\mathbb{E}_{a \sim \pi_\theta} [Q^{\pi_\theta}(s,a)] \right] \newline=\mathbb{E}_{s,a \sim \pi_b} [\frac{\pi_\theta(a|s)}{\pi_b(a|s)}Q^{\pi_b}(s,a)]](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-99250c6e14614db890a3fe8e8487be7e_l3.png)

![Rendered by QuickLaTeX.com \nabla_{\theta} J(\theta) \newline=\mathbb{E}_{s,a \sim \pi_b} [\frac{\nabla_\theta \pi_\theta(a|s)}{\pi_b(a|s)} Q^{\pi_b}(s,a)] \quad\quad \text{Again, treat } Q^{\pi_b}(s,a) \text{ as non-differentiable}\newline=\mathbb{E}_{s,a \sim \pi_b} [\frac{\pi_\theta(a|s)}{\pi_b(a|s)} Q^{\pi_b}(s,a) \nabla_\theta log \pi_\theta(a|s)] \newline \approx \frac{1}{N}[\frac{\pi_\theta(a_t|s_t)}{\pi_b(a_t|s_t)} G_t \nabla_\theta log \pi_\theta(a_t|s_t)] \quad\quad s_t, a_t \sim \pi_b](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-6861dae8d1e302c9bcfc3d06cac970b0_l3.png)

As we know adding a state-dependent baseline in on-policy policy gradient would reduce the variance of gradient [4] and not introduce bias. Based on my proof, adding a state-dependent baseline in off-policy policy gradient will not introduce bias either:

![Rendered by QuickLaTeX.com \mathbb{E}_{s,a \sim \pi_b} \left[\frac{\pi_\theta(a|s)}{\pi_b(a|s)} b(s) \nabla_\theta log \pi_\theta(a|s)\right]\newline=\mathbb{E}_{s \sim \pi_b}\left[b(s) \cdot \mathbb{E}_{a \sim \pi_b}\left[\frac{\pi_\theta(a|s)}{\pi_b(a|s)} \nabla_\theta log \pi_\theta(a|s)\right]\right] \newline = \mathbb{E}_{s \sim \pi_b}\left[b(s) \int \pi_b(a|s) \nabla_\theta \frac{\pi_\theta(a|s)}{\pi_b(a|s)} da\right]\newline=\mathbb{E}_{s \sim \pi_b}\left[b(s) \int \nabla_\theta \pi_\theta(a|s) da\right]\newline=\mathbb{E}_{s \sim \pi_b}\left[b(s) \nabla_\theta \int \pi_\theta(a|s) da\right] \newline=\mathbb{E}_{s \sim \pi_b}\left[b(s) \nabla_\theta 1 \right] \newline=0](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-ccc9f1f74889590d36787146035b678d_l3.png)

You have probably noticed that during the derivation in on-policy policy gradient, we treat ![]() as non-differentiable with regard to

as non-differentiable with regard to ![]() . However

. However ![]() is indeed affected by

is indeed affected by ![]() because it is the returns of the choices made by

because it is the returns of the choices made by ![]() . I am not sure if treating

. I am not sure if treating ![]() differentiable would help improve performance, but there are definitely papers doing so in specific applications. One such an example is seq2slate [5] (where, in their notations,

differentiable would help improve performance, but there are definitely papers doing so in specific applications. One such an example is seq2slate [5] (where, in their notations, ![]() refers to actions,

refers to actions, ![]() refers to states, and

refers to states, and ![]() is the same as our

is the same as our ![]() ):

):

If we adapt Eqn. 8 to the off-policy setting, we have (the first three lines would be the same):

![Rendered by QuickLaTeX.com \nabla_\theta \mathbb{E}_{\pi \sim p_\theta(\cdot|x)} \left[ \mathcal{L}_\pi(\theta)\right] \newline= \nabla_\theta \sum\limits_\pi p_\theta(\pi|x)\mathcal{L}_\pi(\theta)\newline=\sum\limits_\pi \left[ \nabla_\theta p_\theta(\pi|x) \cdot \mathcal{L}_\pi(\theta)+p_\theta(\pi|x) \cdot \nabla_\theta \mathcal{L}_\pi(\theta) \right]\newline=p_b(\pi|x) \cdot \sum\limits_\pi \left[ \frac{\nabla_\theta p_\theta(\pi|x)}{p_b(\pi|x)} \cdot \mathcal{L}_\pi(\theta)+\frac{p_\theta(\pi|x)}{p_b(\pi|x)} \cdot \nabla_\theta \mathcal{L}_\pi(\theta) \right]\newline=\mathbb{E}_{\pi\sim p_b(\cdot|x)}\left[\frac{1}{p_b(\pi|x)}\nabla_\theta\left(p_\theta(\pi|x)\mathcal{L}_\pi(\theta)\right)\right]](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-227f028e4d3bc7f11df8cb44f48f3e17_l3.png)

If you follow the same rewriting trick to rewrite Eqn. 8, we can get another form of gradient in the on-policy setting:

![Rendered by QuickLaTeX.com \nabla_\theta \mathbb{E}_{\pi \sim p_\theta(\cdot|x)} \left[ \mathcal{L}_\pi(\theta)\right] \newline= \nabla_\theta \sum\limits_\pi p_\theta(\pi|x)\mathcal{L}_\pi(\theta)\newline=\sum\limits_\pi \left[ \nabla_\theta p_\theta(\pi|x) \cdot \mathcal{L}_\pi(\theta)+p_\theta(\pi|x) \cdot \nabla_\theta \mathcal{L}_\pi(\theta) \right]\newline=p_\theta(\pi|x) \cdot \sum\limits_\pi \left[ \frac{\nabla_\theta p_\theta(\pi|x)}{p_\theta(\pi|x)} \cdot \mathcal{L}_\pi(\theta)+\frac{p_\theta(\pi|x)}{p_\theta(\pi|x)} \cdot \nabla_\theta \mathcal{L}_\pi(\theta) \right]\newline=\mathbb{E}_{\pi\sim p_\theta(\cdot|x)}\left[\frac{1}{p_\theta(\pi|x)}\nabla_\theta\left(p_\theta(\pi|x)\mathcal{L}_\pi(\theta)\right)\right]](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-907143278650354a5e362762565099f1_l3.png)

, where in the last few equations, ![]() not followed by

not followed by ![]() is treated as non-differentiable.

is treated as non-differentiable.

Again, I’d like to disclaim that I don’t really know whether we should just make the policy differentiable (policy gradient) or make both the policy and return differentiable (seq2slate Eqn. 8). I’ll report back if I have any empirical findings.

————————— Update 2020.7 ——————-

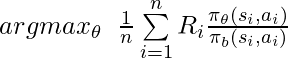

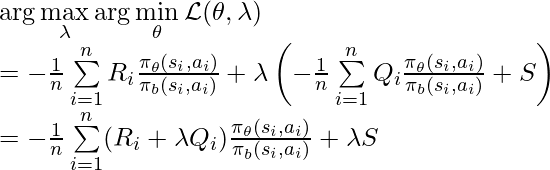

Another topic to consider is constrained optimization in off-policy policy gradient. I am using notations closer to my real-world usage. Assume we are optimizing a policy’s parameters using data collected by another policy to maximize some reward function ![]() (i.e., one-step optimization,

(i.e., one-step optimization, ![]() ), with the constraint that the policy should also achieve at least some amount of

), with the constraint that the policy should also achieve at least some amount of ![]() in another reward type

in another reward type ![]() :

:

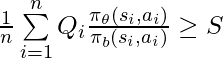

subject to

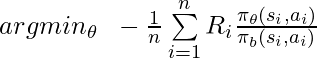

Writing it in a standard optimization format, we have:

subject to

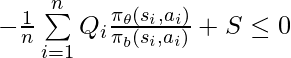

Now we can solve this optimization problem using Lagrangian multiplier. Suppose there is a non-negative multiplier ![]() . The augmented objective function becomes the original objective plus an additional penalty, which makes infeasible solutions sub-optimal:

. The augmented objective function becomes the original objective plus an additional penalty, which makes infeasible solutions sub-optimal:

In simpler problems we can just find the saddle point where ![]() and

and ![]() . However, our problem could be in a large scale that it is not feasible to find the saddle point easily. Note that one cannot use stochastic gradient descent to find the saddle point because saddle points are not local minima [8].

. However, our problem could be in a large scale that it is not feasible to find the saddle point easily. Note that one cannot use stochastic gradient descent to find the saddle point because saddle points are not local minima [8].

Following the idea in [7], what we can show is that if we try to optimize ![]() under different

under different ![]() ‘s, then

‘s, then  would have a monotonic trend:

would have a monotonic trend:

Suppose

For any arbitrary two ![]() ‘s:

‘s: ![]() , we have:

, we have:![]()

![]()

Therefore,![]()

![]()

Adding both sides will keep the inequality hold:![]()

![]()

![]()

![]() (because

(because ![]() the inequality changes direction)

the inequality changes direction)

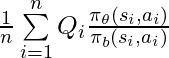

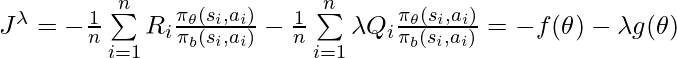

This theorem immediately implies that if we optimize ![]() using normal off-policy policy gradient with reward in the form of

using normal off-policy policy gradient with reward in the form of ![]() , we are essentially doing constrained optimization. The larger

, we are essentially doing constrained optimization. The larger ![]() we use, the more rigid the constraint is. While using a combined reward shape like

we use, the more rigid the constraint is. While using a combined reward shape like ![]() looks a naive way to balance trade-offs, I never thought it could link to constrained optimization in this way!

looks a naive way to balance trade-offs, I never thought it could link to constrained optimization in this way!

The Lagrangian Multiplier optimization procedure above is taken referenced at [9].

————————— Update 2020.7 part (2) ——————-

Note that one cannot use stochastic gradient descent to find the saddle point because saddle points are not local minima [8].

This sentence is actually controversial. From [10] Section 3, we can see that we can still use stochastic gradient update to find the saddle point: for ![]() , we use gradient ascent; for

, we use gradient ascent; for ![]() we use gradient descent. Second, we can convert

we use gradient descent. Second, we can convert ![]() to yet another problem for minimizing the magnitude of the gradient of both

to yet another problem for minimizing the magnitude of the gradient of both ![]() and

and ![]() [11] . The intuition is that the closer to the saddle point, the smaller magnitude of the gradient of

[11] . The intuition is that the closer to the saddle point, the smaller magnitude of the gradient of ![]() and

and ![]() . Therefore, we can perform stochastic gradient descent on the magnitude of gradient.

. Therefore, we can perform stochastic gradient descent on the magnitude of gradient.

References

[1] https://czxttkl.com/2017/05/28/policy-gradient/

[2] https://czxttkl.com/2017/03/30/importance-sampling/

[3] On a Connection between Importance Sampling and the Likelihood Ratio Policy Gradient

[5] https://arxiv.org/pdf/1810.02019.pdf

[6] http://proceedings.mlr.press/v32/silver14.pdf

[7] Deep Learning with Logged Bandit Feedback

[8] https://stackoverflow.com/a/12284903

[9] https://people.duke.edu/~hpgavin/cee201/LagrangeMultipliers.pdf

[10] Reward Constrained Policy Optimization

[11] https://en.wikipedia.org/wiki/Lagrange_multiplier#Example_4:_Numerical_optimization