Here, I am listing some classic proofs regarding the convergence of Q-learning and SARSA in finite MDPs (by definition, in finite Markov Decision Process the sets of states, actions and rewards are finite [1]).

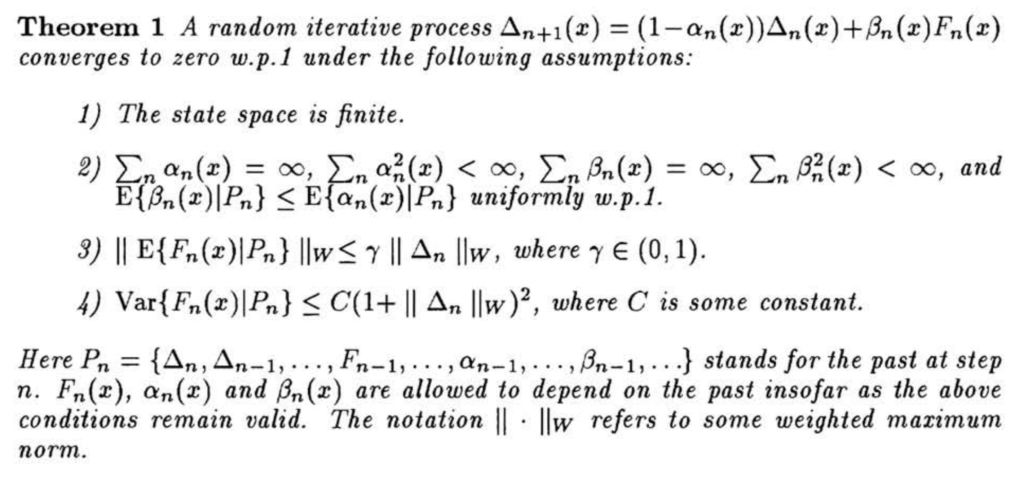

The very first Q-learning convergence proof comes from [4]. The proof is based on a very useful theorem:

Note that this theorem is general to be applied on multi-dimensional space (![]() can be multi-dimensional).

can be multi-dimensional).

We can apply Theorem 1 to Q-learning whose update rule is as follows:![]()

Subtracting ![]() from both sides and letting

from both sides and letting ![]() , we have:

, we have:![Rendered by QuickLaTeX.com \triangle_t(s_t,a_t)\newline=(1-\alpha_t(s_t, a_t))\triangle_t(s_t, a_t) + \alpha_t(s,a)[r_t + \gamma\max_{b\in \mathcal{A}}Q_t(s_{t+1}, b) - Q^*(s_t, a_t)]\newline=(1-\alpha_t(s_t, a_t))\triangle_t(s_t, a_t) + \alpha_t(s_t, a_t)F_t(s_t,a_t)](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-a4bd0cd1322d0e6aad3dcc206774cae9_l3.png)

We can also let ![]() , the learning rate, to be zero for

, the learning rate, to be zero for ![]() . Till this point, we have modeled the Q-learning process exactly as the random iterative process stated in Theorem 1. Hence, as long as we can prove the 4 assumptions are held, we can prove Q-learning converges to the optimal Q-values (i.e.,

. Till this point, we have modeled the Q-learning process exactly as the random iterative process stated in Theorem 1. Hence, as long as we can prove the 4 assumptions are held, we can prove Q-learning converges to the optimal Q-values (i.e., ![]() converges to 0).

converges to 0).

Assumption 1: trivial to hold since we are focusing on finite MDPs

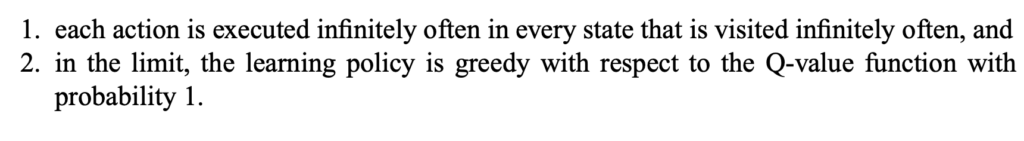

Assumption 2: ![]() based on our formulation. If we apply a GLIE learning policy (“greedy in the limit with infinite exploration”) [2], then we make assumption 2 hold:

based on our formulation. If we apply a GLIE learning policy (“greedy in the limit with infinite exploration”) [2], then we make assumption 2 hold:

Assumption 3: ![]() . There are some notations that may not look familiar to ordinary audience. First,

. There are some notations that may not look familiar to ordinary audience. First, ![]() means the weighted maximum norm [5]. Therefore,

means the weighted maximum norm [5]. Therefore, ![]() . Second,

. Second, ![]() just means

just means ![]() can be estimated using all the past interactions, i.e.,

can be estimated using all the past interactions, i.e., ![]() in

in ![]() can be estimated conditional on all the past information rather than just

can be estimated conditional on all the past information rather than just ![]() .

.

To prove assumption 3 holds, we first look at how we define the optimal Q-function:![]()

We can show that ![]() is a fix point of a contraction operator

is a fix point of a contraction operator ![]() , defined over a generic function

, defined over a generic function ![]() :

:![]()

Actually, ![]() , a

, a ![]() -contraction, because:

-contraction, because:![Rendered by QuickLaTeX.com \|\textbf{H}q_1 - \textbf{H}q_2\|_\infty \newline=\max\limits_{s,a} \big|\sum\limits_{s'\in \mathcal{S}} P_a(s,s')[r(s,a,s')+\gamma\max\limits_{a' \in \mathcal{A}}q_1(s',a') - r(s,a, s')-\gamma \max\limits_{a'\in\mathcal{A}}q_2(s',a')]\big|\newline=\max\limits_{s,a}\gamma \big|\sum\limits_{s'\in\mathcal{S}}P_a(s,s') [\max\limits_{a' \in \mathcal{A}}q_1(s',a')-\max\limits_{a' \in \mathcal{A}}q_2(s',a')]\big|\newline\text{Think of }\max |\textbf{p}*\textbf{x}| \leq \max|p_1\cdot\max|\textbf{x}|, p_2\cdot \max|\textbf{x}|, \cdots| \text{ if } \textbf{p}>0\newline\leq\max\limits_{s,a}\gamma \sum\limits_{s'\in \mathcal{S}}P_a(s,s')\big|\max\limits_{a' \in \mathcal{A}}q_1(s',a')-\max\limits_{a' \in \mathcal{A}}q_2(s',a')\big|\newline\text{norm property: }|\textbf{u}-\textbf{v}|_\infty \geq |\textbf{u}|_\infty-|\textbf{v}|_\infty\newline\leq \max\limits_{s,a}\gamma \sum\limits_{s'\in \mathcal{S}}P_a(s,s')\max\limits_{a' \in \mathcal{A}}\big|q_1(s',a')-q_2(s',a')\big| \newline \text{Enlarge the domain of max} \newline\leq \max\limits_{s,a}\gamma \sum\limits_{s'\in \mathcal{S}}P_a(s,s')\max\limits_{s'' \in \mathcal{S},a'' \in \mathcal{A}}\big|q_1(s'',a'')-q_2(s'',a'')\big|\newline=\max\limits_{s,a}\gamma \sum\limits_{s'\in \mathcal{S}}P_a(s,s')\|q_1-q_2\|_\infty\newline=\gamma \|q_1-q_2\|_\infty](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-3d90ea69364bba6dcb0961b4034592b0_l3.png)

Note in one of our previous note [6], we talked about the intuitive meaning of ![]() -contraction: consecutive application of

-contraction: consecutive application of ![]() makes the function

makes the function ![]() closer and closer to the fixed point

closer and closer to the fixed point ![]() at the rate of

at the rate of ![]() . Another intuitive understanding of

. Another intuitive understanding of ![]() (in terms of L2 norm) is that the distance between

(in terms of L2 norm) is that the distance between ![]() and

and ![]() is closer after applying

is closer after applying ![]() :

:

After proving ![]() is a

is a ![]() -contraction, we now prove assumption 3:

-contraction, we now prove assumption 3:![Rendered by QuickLaTeX.com \|E\{F_t(s,a) | P_n\}\|_w \newline=\sum\limits_{s'\in\mathcal{S}}P_a(s,s')[r(s,a,s')+\gamma\max\limits_{a'\in\mathcal{A}}Q_t(s', a')-Q^*(s,a)]\newline=\sum\limits_{s'\in\mathcal{S}}P_a(s,s')[r(s,a,s')+\gamma\max\limits_{a'\in\mathcal{A}}Q_t(s', a')]-Q^*(s,a)\newline=(\textbf{H}Q_t)(s,a)-Q^*(s,a)\newline\text{Using the fact that }Q^*=\textbf{H}Q^*\newline=(\textbf{H}Q_t)(s,a)-(\textbf{H}Q^*)(s,a)\newline \leq \|\textbf{H}Q_t-\textbf{H}Q^*\|_\infty\newline\leq \gamma \|Q_t-Q^*\|_\infty\newline=\gamma \|\triangle_t\|_\infty](https://czxttkl.com/wp-content/ql-cache/quicklatex.com-e17fe7f973a6af8acded5c30b86cc38f_l3.png)

It is less obvious to me how to prove assumption 4, even after reading [3] and proposition 5.5 in [8]. But the take away of assumption 4 is that the variance of ![]() should be bounded.

should be bounded.

At last, y0u can prove the convergence of SARSA [2] in a similar fashion. Hope I’ll have time to cover in the future.

I think the most important note to take away from this post is the pattern to prove convergence of a learning algorithm. Researchers often need to propose variants of Q-learning (such as soft Q-values in maximum entropy environment [6], or SlateQ for dealing with combinatorial actions [9]). They usually need to prove convergence at least in the case of finite MDPs. One can start from the optimal Q-function of their learning algorithm and prove it is a fixed point of a contraction operator. Then, look at the update rule and construct a random iterative process as stated in Theorem 1. Finally, prove the 4 assumptions hold.

Reference

[1] https://medium.com/harder-choices/finite-markov-decision-process-8a34f5e571f9

[2] Convergence Results for Single-Step On-Policy

Reinforcement-Learning Algorithms https://link.springer.com/content/pdf/10.1023/A:1007678930559.pdf

[3] Convergence of Q-learning: a simple proof http://users.isr.ist.utl.pt/~mtjspaan/readingGroup/ProofQlearning.pdf

[4] Convergence of Stochastic Iterative Dynamic Programming Algorithms https://papers.nips.cc/paper/764-convergence-of-stochastic-iterative-dynamic-programming-algorithms.pdf

[7] norm properties: https://en.wikipedia.org/wiki/Norm_(mathematics)#Properties

[8] Neuro Dynamic Programming https://www.dropbox.com/s/naenlfpy0f9vmvt/neuro-dynamic-programming-optimization-and-neural-.pdf?dl=0

[9] SlateQ: https://arxiv.org/abs/1905.12767