There are several online posts [1][2] that illustrate the idea of Transformer, the model introduced in the paper “attention is all you need” [4].

Based on [1] and [2], I am sharing a short tutorial for implementing Transformer [3]. In this tutorial, the task is “copy-paste”, i.e., to let a Transformer learn to output the same sequence as the input sequence. We denote the symbols used in the code as:

| Name | Meaning |

| src_vocab_size=12 | The vocabulary size of the input sequence |

| tgt_vocab_size=12 | The vocabulary size of the output sequence. Since our task is to copy the input sequence, tgt_vocab_size = src_vocab_size. We reserve symbol 0 for padding and symbol 1 for the decoder starter symbol, and we assume possible real symbols are from 2 to 11. Therefore, the total vocabulary size is 12. |

| dim_model=512 | This is the dimension of the embedding, as well as the output of self-attention, and the input/output of the position-wise feed forward model. |

| dim_feedforward=256 | The size of the hidden layer in the position-wise feed forward layer. |

| d_k = 64 | The dimension of each attention head. |

| num_heads=8 | The number of attention heads. For the sake of computational convenience, d_k * num_heads should be equal to dim_model. |

| seq_len=7 | The length of input sequences |

| batch_size=30 | Batch size, the number of input sequences per batch |

| num_stacked_layers=6 | The number of layers to be stacked in both Encoder and Decoder |

Let’s first look at what the input/output data looks like, which is generated by data_gen. Each Batch instance contains several essential pieces of information to be used in training:

- src (batch_size x seq_len): the input sequence to the encoder

- src_mask (batch_size x 1 x seq_len): the mask of the input sequence, so that each input symbol can fine control which other symbols to attend

- trg_y (batch_size x seq_len): the output sequence as the label, which is the same as src given that our task is simply “copy-paste”

- trg (batch_size x seq_len): the input sequence to the decoder. The first symbol of each sequence is symbol 1 that helps kicking off the decoder; the last symbol of each sequence is the second to the last of the trg_y sequence.

- trg_mask (batch_size x seq_len x seq_len): the mask of the output sequence, so that at each decoding step, a symbol being decoded can attend to other already decoded symbols.

trg_mask[i, j, k], a binary value, indicates whether in the i-th output sequence the j-th symbol can attend to the k-th symbol. Since a symbol can attend all its preceding symbols,trg_mask[i,j,k]=1for allk <= j.

Now, let’s look at EncoderLayer class. There are num_stacked_layers=6 encoder layers in one Encoder. The bottom layer takes the symbol embeddings as input, while each of the upper layers takes the previous layer’s output. Both the symbol embedding and each layer’s output has the same dimension `batch_size x seq_len x dim_model`, so in theory you can stack as many layers as needed because they all have compatible input/output. The symbol embedding converts input symbols into embeddings, plus positional encoding which helps the model learns positional information.

One EncoderLayer does the following things:

- It has three matrices stored in

MultiHeadedAttention.linears[:3], which we call query transformation matrix, key transformation matrix, and value transformation matrix. They transform the input from the last layer into three matrices which the self-attention module will use. Each of the output matrices, which we call query, key, and value, has the shapebatch_size x num_heads x seq_len x d_k. - The transformed matrices are used as query, key, and value in self-attention (

attentionfunction). The attention is calculated based on Eqn.1 in [4]:

The attention function also needs a mask as input. The mask tells it for each symbol what other symbols it can attend to (due to padding, or other reasons). In our case, our input sequences are of the equal length and we allow all symbols attend to other symbols; so the mask is always filled with ones.

- The attentions of

d_kheads are concatenated and passed through a linear projection layer, which results to the output with shape `batch_size x seq_len x dim_model` - The output is finally processed by a BatchNorm layer and a Residual layer.

So far, the encoder part can be illustrated in the following diagram [1] (suppose our input sequence has length 2, containing the symbol 2 and 3):

One DecoderLayer does the following thing:

- it first does self-attention on the target sequence (

batch.trg, not thetrg_ysequence), which generates attention of shape (batch_size, seq_len, dim_model). The first symbol of a target sequence is 1, a symbol indicating the decoder to start decoding. - the target sequence performs attention on the encoder, i.e., using the target sequence’s self attention as the query, and using the final encoder layer’s output as the key and value.

- Pass the encoder-decoder attention’s output through a feedforward layer, with batch norm and residual connection. The output of one DecoderLayer has the shape `batch_size x seq_len x dim_model`. Again this shape allows you stack as many layers as you want.

The decoder part is shown in the following diagram:

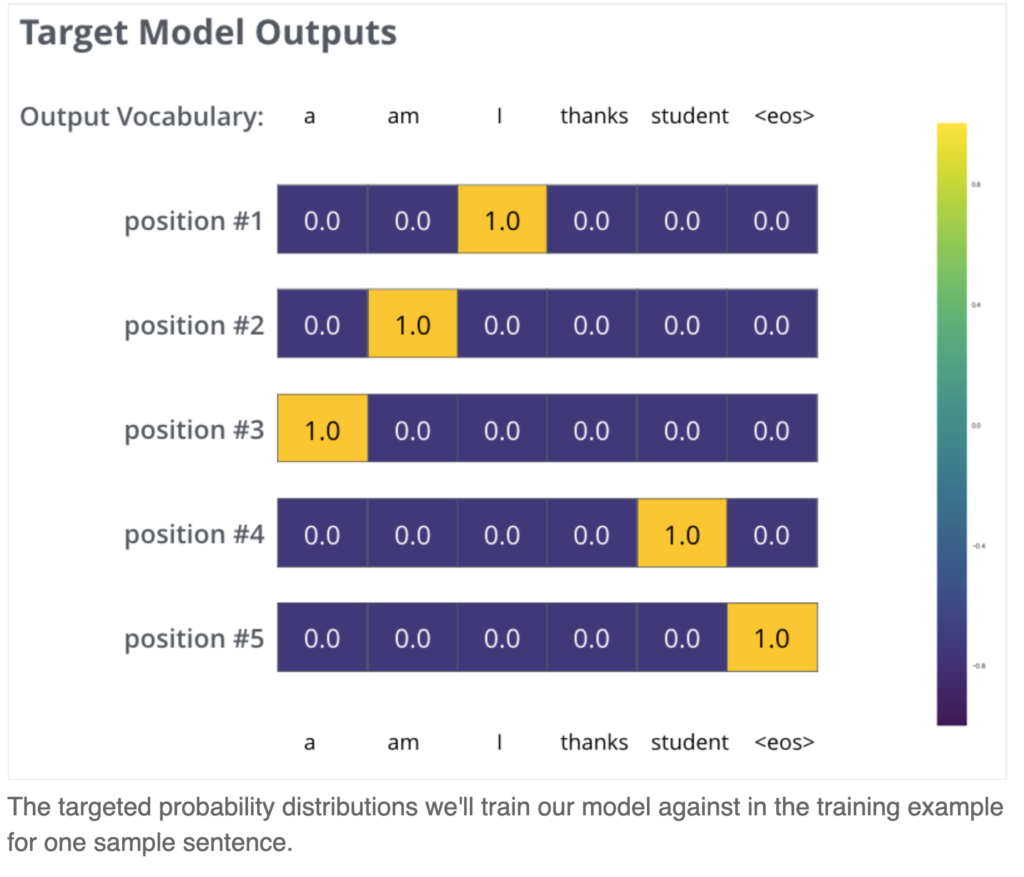

The last part is label fitting. The last layer of DecoderLayer has the output of shape , i.e., each target symbol’s attention. We pass them through a linear project then softmax such that each target symbol will be associated with a probability distribution of the next symbol to decode. We fit the probability distribution against batch.trg_y by minimizing the KL divergence. Here is one example of label fitting:

In practice, we also apply label smoothing such that the target distribution to fit has slight probability mass even on the non-target symbol. So for example, the target distribution of position #1 (refer to the diagram on the left) is no longer (0, 0, 1, 0, 0); rather it should be (0.1, 0.1, 0.6, 0.1, 0.1) if you use 0.1 label smoothing. The intuition is that even our label may not be 100% accurate and we should not let the model overly confident in the training data. In other words, label smoothing helps prevent overfitting and hope to improve generalization in unseen data.

[1] http://jalammar.github.io/illustrated-transformer/

[2] http://nlp.seas.harvard.edu/2018/04/03/attention.html

[3] https://github.com/czxttkl/Tutorials/blob/master/experiments/transformer/try_transformer.py