In this post, I am showing how to compile Cython modules on Windows, which often leads to weird error messages.

(Cython references: http://cython.org/, http://docs.cython.org/index.html, https://github.com/cython/cython)

1. You should already have correct structure to hold your “.pyx” file. Also, `setup.py` needs to be correctly configured. The following shows an example of `setup.py`:

try:

from setuptools import setup

except ImportError:

from distutils.core import setup

from Cython.Build import cythonize

import numpy

config = {

'description': 'SimpleCNN',

'author': 'Zhengxing Chen',

'author_email': 'czxttkl@gmail.com',

'install_requires': ['nose','numpy','scipy','cython'],

'packages': ['simplecnn'],

'name':"SimpleCNN",

'ext_modules':cythonize("simplecnn/pool.pyx"), # change path and name accordingly

'include_dirs':[numpy.get_include()] # my cython module requires numpy

}

setup(**config)

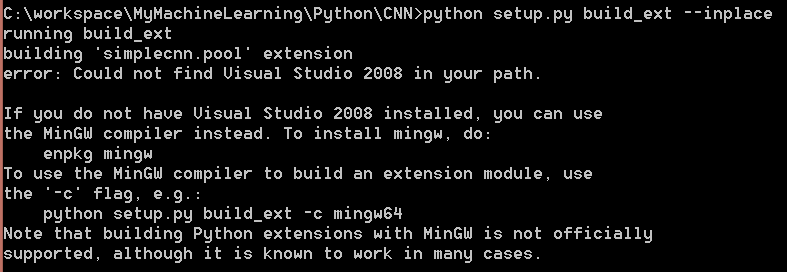

2. Based on the instruction on the official guide of Cython (http://docs.cython.org/src/quickstart/build.html#building-a-cython-module-using-distutils), I tried:

python setup.py build_ext --inplace

But it turned out that Visual Studio 2008 was not found in my path. And I was suggested to use mingw64 to compile the code. Luckily, I have installed mingw64 on my Windows machine.

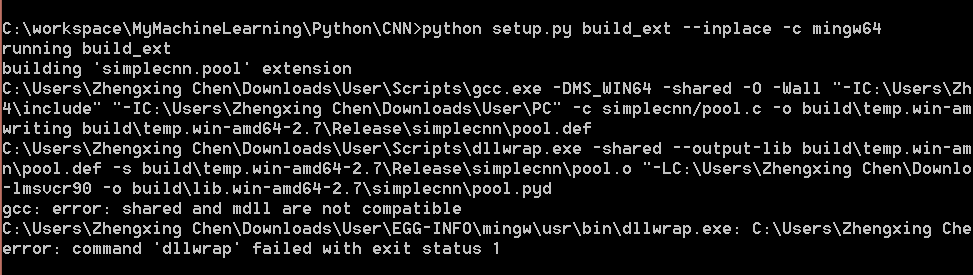

However, another error jumped in when I used:

python setup.py build_ext --inplace -c mingw64

gcc: error: shared and mdll are not compatible error: comand 'dllwrap' failed with exit status 1

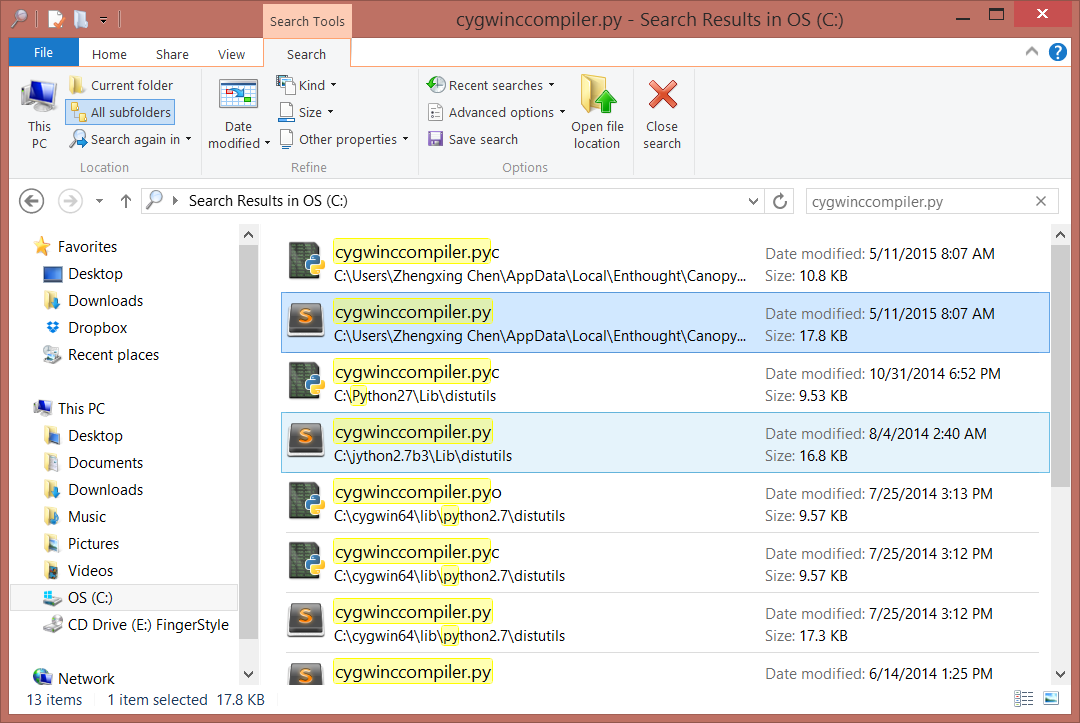

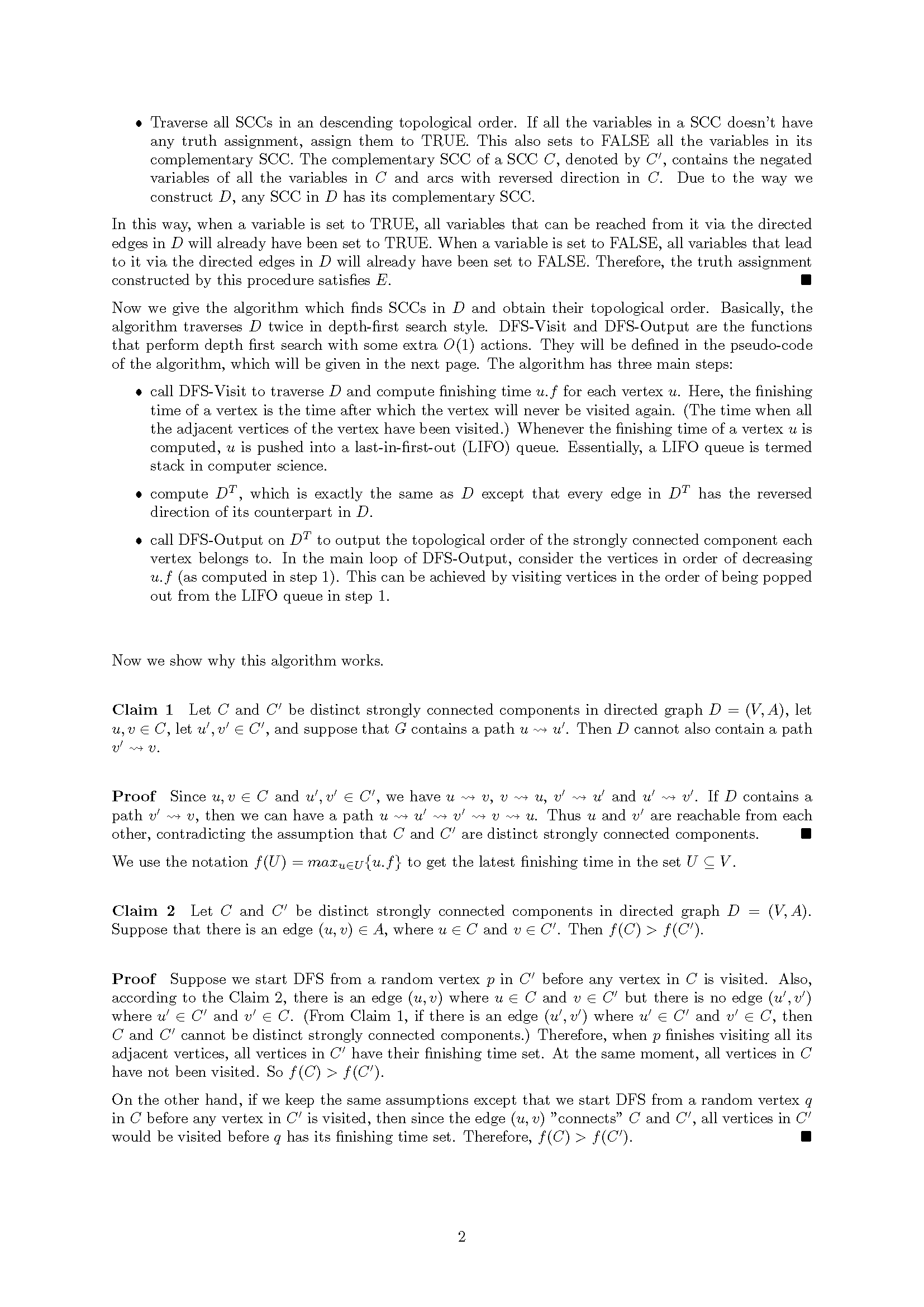

I don’t know the meaning of “shared and mdll are not compatible”. But after some experiments, I found that giving gcc the parameter “-mdll” rather than “-shared” will bypass such error and manage to build your Cython module. In light of this, we need to modify the code in `cygwinccompiler.py` file in `distutils` package. You can locate `cygwinccompiler.py` file using search on Windows:

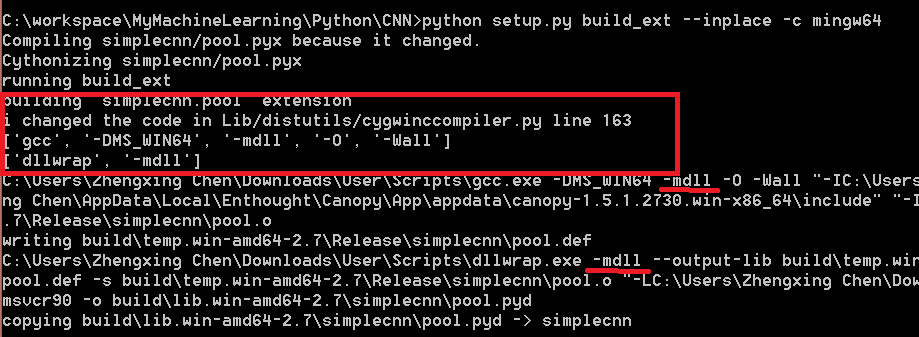

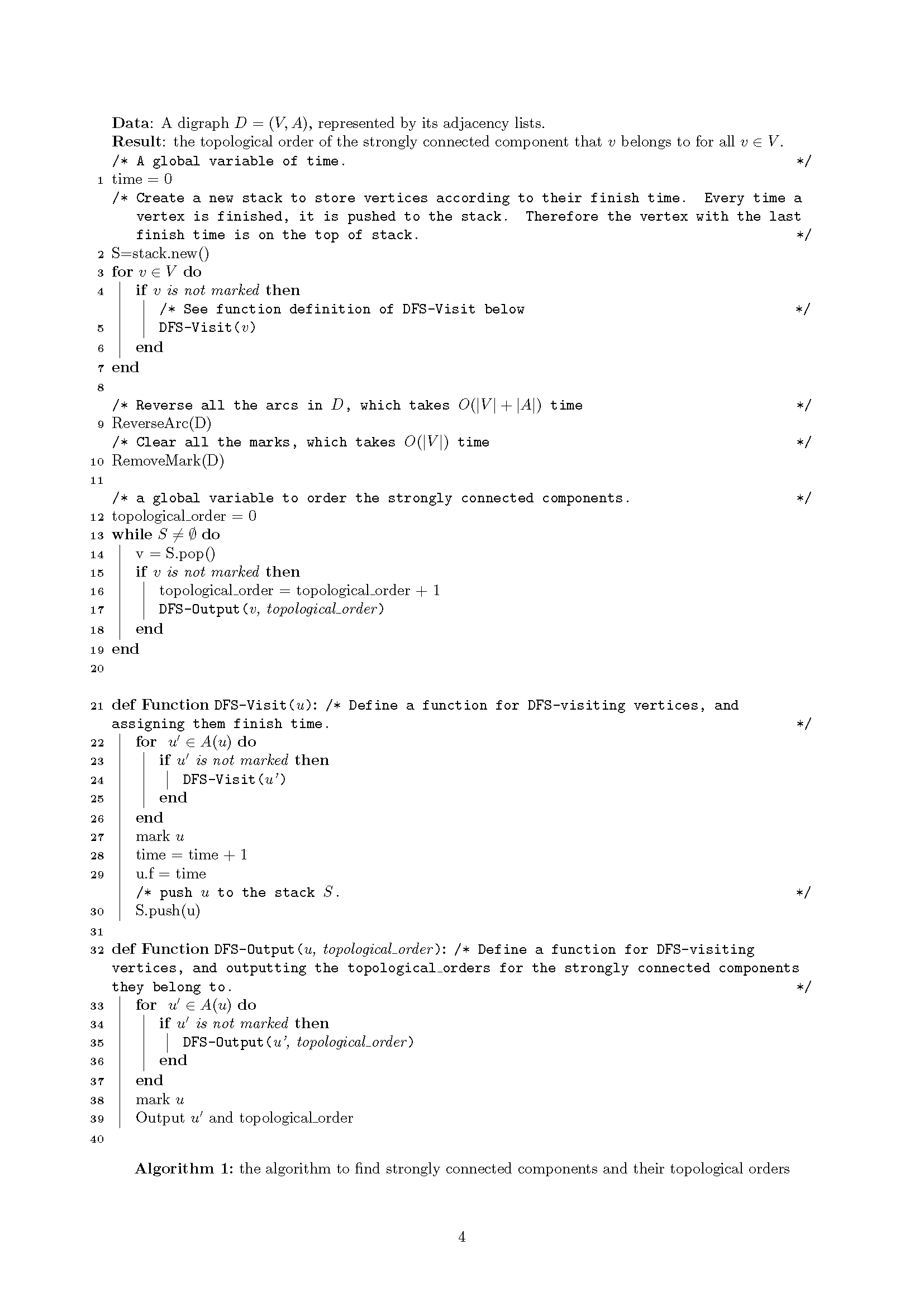

In the file, it has a _compile function which is needed to change (see the red part below):

def _compile(self, obj, src, ext, cc_args, extra_postargs, pp_opts):

if ext == '.rc' or ext == '.res':

# gcc needs '.res' and '.rc' compiled to object files !!!

try:

self.spawn(["windres", "-i", src, "-o", obj])

except DistutilsExecError, msg:

raise CompileError, msg

else: # for other files use the C-compiler

try:

print "i changed the code in Lib/distutils/cygwinccompiler.py line 163"

self.compiler_so[2] = '-mdll'

self.linker_so[1] = '-mdll'

print self.compiler_so

print self.linker_so

self.spawn(self.compiler_so + cc_args + [src, '-o', obj] +

extra_postargs)

except DistutilsExecError, msg:

raise CompileError, msg

After that, remember to invalidate (remove) `cygwinccompiler.pyc` file so that you make sure Python will recompile `cygwinccompiler.py` and your modification will take effect.

FinallyI re-tried to compile Cython code and it worked since then. A `.pyd` file was generated on the same folder as the `.pyx` file.