The best way to convert a tex file to docx is to: use Mircosoft Office Word 2013 or later to open the pdf file generated by .tex file. Word will convert pdf to docx with pretty format.

Add permanent key bindings for Jupyter Notebook

This post shows how to add customized permanent key bindings for jupyter notebook.

1. check the location of your jupyter config folder using the command:

sudo ~/.local/bin/jupyter --config-dir

I am running Ubuntu. The config folder, by default is, `/home/your_user_name/.jupyter`

2. Create a folder `custom` in the config folder.

3. Create a file `custom.js` in the `custom` folder. (So the path for `custom.js` is `/home/your_user_name/.jupyter/custom/custom.js`) Add your key binding function in `custom.js`. Here is an example adding a key bind combo for restarting and re-running all cells.

$([Jupyter.events]).on("app_initialized.NotebookApp", function () {

Jupyter.keyboard_manager.command_shortcuts.add_shortcut('0,1', {

help: 'restart and run all',

help_index: '0,1',

handler: function (event) {

Jupyter.notebook.kernel.restart();

setTimeout(function(){ Jupyter.notebook.execute_all_cells(); }, 1000);

return false;

}}

);

});

4. Now restart the Jupyter and try press 0 followed by 1 in the command mode. All cells should be re-run after an one second delay.

Reference:

https://www.webucator.com/blog/2015/11/show-line-numbers-by-default-in-ipython-notebook/

http://stackoverflow.com/questions/32573958/how-to-bind-a-command-to-restart-and-run-all

Adaptive Regularization of Weight Vectors — Mathematical derivation

In this post I am showing my understanding about the paper Adaptive Regularization of Weight Vectors: http://papers.nips.cc/paper/3848-adaptive-regularization-of-weight-vectors.pdf

The paper aims to address the negatives of a previous algorithm called confidence weighted (CW) learning by introducing the algorithm Adaptive Regularization Of Weights (AGOW). CW and AGOW are both online learning algorithms, meaning updates happen after observing each datum. The background under investigation is linear binary classifier, i.e., the algorithms try to learn weights $latex \boldsymbol{w}$ such that ideally given a datum $latex \boldsymbol{x}_t$ and its real label $latex y_t$, $latex sign(\boldsymbol{w} \cdot \boldsymbol{x}_t) = y_t$.

First, let’s look at the characteristics of CW. At each round $latex t$ where a new datum $latex (\boldsymbol{x}, y_t)$ is observed, CW aims to solve the following optimization problem:

$latex (\boldsymbol{\mu}_t, \Sigma_t) = \min\limits_{\boldsymbol{\mu}, \Sigma} D_{KL}(\mathcal{N}(\boldsymbol{\mu}, \Sigma) || \mathcal{N}(\boldsymbol{\mu}_{t-1}, \Sigma_{t-1})) \\ \qquad \qquad \text{ s.t. } Pr_{\boldsymbol{w} \sim \mathcal{N}(\boldsymbol{\mu}, \Sigma)}[y_t (\boldsymbol{w} \cdot \boldsymbol{x}_t) \geq 0] \geq \eta $

Interpreted in plain English, the optimization problem of CW says: I should make sure the current example can be predicted correctly with $latex \eta$ confidence by updating the underlying distribution of weights $latex \boldsymbol{w}$. However, the update should be as little as possible to preserve previous weights as much as possible.

The paper analyzes the positives and the negatives of CW: forcing the probability of predicting each example correctly to be at least $latex \eta$ (usually $latex > \frac{1}{2}$) can lead to rapid learning but suffer over-fitting problem if labels are very noisy. Also, the constraint, $latex Pr_{\boldsymbol{w} \sim \mathcal{N}(\boldsymbol{\mu},\Sigma)}[y_t (\boldsymbol{w} \cdot \boldsymbol{x}_t) \geq 0] \geq \eta$, is specific to linear binary classification problems, leaving people unknown how to extend to alternative settings.

The paper proposes AGOW with the following unconstrained optimization problem:

$latex C(\boldsymbol{\mu}, \Sigma) = D_{KL}(\mathcal{N}(\mu, \Sigma) || \mathcal{N}(\mu_{t-1}, \Sigma_{t-1}) ) + \lambda_1 \ell_{h^2}(y_t, \boldsymbol{\mu} \cdot \boldsymbol{x}_t) + \lambda_2 \boldsymbol{x}_t^T \Sigma \boldsymbol{x}_t \\ \text{ where } \ell_{h^2}(y_t, \boldsymbol{\mu} \cdot \boldsymbol{x}_t) = (\max \{ 0, 1 – y_t (\boldsymbol{\mu} \cdot \boldsymbol{x}_t) \} )^2 \text{ is a squared hinge loss function. }$

The optimization problem of AGOW aims to balance the following desires:

- each update should not be too radical. (the first KL divergence term)

- the current datum should be predicted with low loss. (the second term). The property of the hinge loss indicates that if the current datum can be predicted correctly with sufficient margin (i.e., $latex y_t(\boldsymbol{\mu}\cdot \boldsymbol{x}_t) > 1$) before updating the parameters, then there is no need to update $latex \boldsymbol{\mu}$.

- confidence in the parameters should generally grow. (the third term). Note that covariance matrix is always positive semi-definite as you can google it.

Now I will show how to derive the update rule for $latex \boldsymbol{\mu}$. Since $latex C(\boldsymbol{\mu}, \Sigma)$ is a convex function w.r.t. $latex \boldsymbol{\mu}$ and it is differentiable when $latex 1 – y_t(\boldsymbol{\mu}_t \cdot \boldsymbol{x}_t) \geq 0$, we can update $latex \boldsymbol{\mu}$ by setting the derivative of $latex C(\boldsymbol{\mu}, \Sigma)$ w.r.t to $latex \boldsymbol{\mu}$ to zero:

$latex \frac{\partial C(\boldsymbol{\mu}, \Sigma)}{\partial\boldsymbol{\mu}} = \frac{\partial}{\partial\boldsymbol{\mu}} \frac{1}{2}(\boldsymbol{\mu}_{t-1} -\boldsymbol{\mu})^T \Sigma_{t-1}^{-1}(\boldsymbol{\mu}_{t-1} -\boldsymbol{\mu}) + \frac{\partial}{\partial \boldsymbol{\mu}} \frac{1}{2r} \ell_{h^2}(y_t, \boldsymbol{\mu} \cdot \boldsymbol{x}_t) = 0$

Rearranging the equality above we can get formula (6) in the paper:

$latex \boldsymbol{\mu}_t = \boldsymbol{\mu}_{t-1} + \frac{y_t}{r}(1-y_t (\boldsymbol{x}_t^T \boldsymbol{\mu}_t)) \Sigma_{t-1} \boldsymbol{x}_t$

If we multiply both sides by $latex \boldsymbol{x}_t^T$, we get:

$latex \boldsymbol{x}_t^T \boldsymbol{\mu}_t = \boldsymbol{x}_t^T \boldsymbol{\mu}_{t-1} + \frac{y_t}{r}(1-y_t (\boldsymbol{x}_t^T \boldsymbol{\mu}_t)) \boldsymbol{x}_t^T \Sigma_{t-1} \boldsymbol{x}_t$

Moving everything containing $latex \boldsymbol{x}_t^T \boldsymbol{\mu}_t$ to the left we get:

$latex (1+ \frac{1}{r}\boldsymbol{x}_t^T \Sigma_{t-1} \boldsymbol{x}_t) \boldsymbol{x}_t^T \boldsymbol{\mu}_t = \frac{y_t}{r}\boldsymbol{x}_t^T \Sigma_{t-1} \boldsymbol{x}_t + \boldsymbol{x}_t^T \boldsymbol{\mu}_{t-1}$

Therefore,

$latex \boldsymbol{x}_t^T \boldsymbol{\mu}_t = \frac{y_t \boldsymbol{x}_t^T \Sigma_{t-1} \boldsymbol{x}_t + r \boldsymbol{x}_t^T \boldsymbol{\mu}_{t-1}}{r+\boldsymbol{x}_t^T \Sigma_{t-1} \boldsymbol{x}_t}$

Plugging the formula above back to where $latex \boldsymbol{x}_t^T \boldsymbol{\mu}_t$ appears in formula (6), we have:

$latex \boldsymbol{\mu}_t = \boldsymbol{\mu}_{t-1} + \frac{1 – y_t \boldsymbol{x}_t^T \boldsymbol{\mu}_{t-1}}{r+\boldsymbol{x}_t^T \Sigma_{t-1} \boldsymbol{x}_t} \Sigma_{t-1} y_t \boldsymbol{x}_t$

Recall the passive way AGOW adopts to update $latex \boldsymbol{\mu}_t$:

- if $latex 1 – y_t(\boldsymbol{\mu}_t ) < 0$, which means the margin to predict this datum is already large enough, there is no need to update $latex \boldsymbol{\mu}_t$, i.e. $latex \boldsymbol{\mu}_t = \boldsymbol{\mu}_{t-1}$.

- if $latex 1 – y_t(\boldsymbol{\mu}_t ) \geq 0$ then we need to update $latex \boldsymbol{\mu}$ according to the equality above.

To combine these two update rules, we obtain the formula (7) in the paper:

$latex \boldsymbol{\mu}_t = \boldsymbol{\mu}_{t-1} + \frac{\max(0, 1 – y_t \boldsymbol{x}_t^T \boldsymbol{\mu}_{t-1})}{r+\boldsymbol{x}_t^T \Sigma_{t-1} \boldsymbol{x}_t} \Sigma_{t-1} y_t \boldsymbol{x}_t$

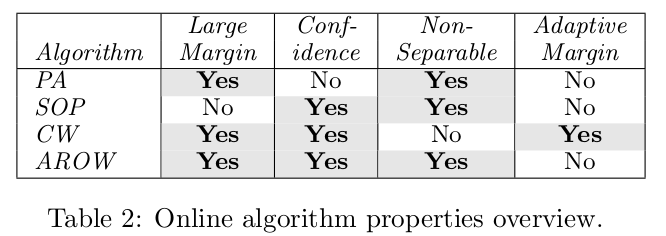

I skip the derivation for the update rule of $latex \Sigma$. The most important conclusion of the paper can be found in Table 2 where it summarizes pros and cons of state-of-the-art online learning algorithm.

Math Derivation for Bayesian Probabilistic Matrix Factorization Model

In this paper I am trying to derive the mathematical formulas that appear in the paper Bayesian Probabilistic Matrix Factorization using Markov Chain Monte Carlo. We will use exactly the same notations as in the paper. The part that I am interested in is the Inference part (Section 3.3).

Sample $latex U_i$

In Gibbs sampling, to sample one parameter, you need to determine its conditional distribution on other parameters. To sample $latex U_i$, we need to determine the distribution $latex p(U_i|R, V, \Theta_U, \Theta_V, \alpha)$. Since $latex U_i$ is independent to $latex \Theta_V$,$latex p(U_i|R, V, \Theta_U, \Theta_V, \alpha) = p(U_i | R, V, \Theta_U, \alpha)$.

According to the Bayesian rule, $latex p(U_i | R, V, \Theta_U, \alpha) \cdot p(R | V, \Theta_U, \alpha) = p(R | U_i, V, \Theta_U, \alpha) \cdot p(U_i | V, \Theta_U, \alpha) $. When we sample from $latex p(U_i | R, V, \Theta_U, \alpha)$, $latex R, V, \Theta_U$ and $latex \alpha$ are fixed. Therefore $latex p(R | V, \Theta_U, \alpha)$ can be seen as a constant. Also, in $latex p(U_i|V, \Theta_U, \alpha)$, $latex U_i$ and $latex V$ are independent, so $latex p(U_i|V, \Theta_U, \alpha)=p(U_i|\Theta_U, \alpha)$ .

Therefore, $latex p(U_i | R, V, \Theta_U, \alpha) \sim p(R | U_i, V, \Theta_U, \alpha) \cdot p(U_i |\Theta_U, \alpha)$. Before we go into further details, note that $latex p(R|U_i, V, \Theta_U, \alpha) = \prod\limits_{j=1}^M [\mathcal{N}(R_{ij}|U_i^TV_j, \alpha^{-1}) ]^{I_{ij}}$, a multiplication of $latex M$ normal distributions, and $latex p(U_i | \Theta_U, \alpha) = \mathcal{N}(U_i | \mu_U, \Lambda_U^{-1})$, another normal distribution, therefore $latex p(U_i|R, V, \Theta_U, \alpha)$ should also be an normal distribution because multiplication of multiple normal distributions results in another normal distribution with a scale factor (here the scale factor is $latex p(R|V, \Theta_U, \alpha)$).

To make things easier, let me introduce the canonical form of univariate and multivariate normal distributions. $latex \mathcal{N}(x | \mu, \sigma^2) = exp[\xi + \eta x – \frac{1}{2} \lambda^2 x^2]$ where $latex \lambda=\frac{1}{\sigma}$, $latex \eta=\lambda^2 \mu$ and $latex \xi = -\frac{1}{2}(\log 2\pi – \log \lambda^2 + \eta^2 \sigma^2 )$. $latex \mathcal{N}(\boldsymbol{x} | \boldsymbol{\mu}, V) = exp[\xi + \boldsymbol{\eta}^T \boldsymbol{x} – \frac{1}{2}\boldsymbol{x}^T \Lambda \boldsymbol{x}]$, where $latex \Lambda=V^{-1}$, $latex \boldsymbol{\eta} = V^{-1}\boldsymbol{\mu}$ and $latex \xi=-\frac{1}{2}(d\log2\pi-log|\Lambda|+\boldsymbol{\eta}^T \Lambda^{-1} \boldsymbol{\eta})$. (You can find some patterns of how the univariate case generalizes to the multivariate case as I arrange in this way.)

Now we proceed to expand $latex p(R | U_i, V, \Theta_U, \alpha)$ and $latex p(U_i | \Theta_U, \alpha)$ leveraging such canonical form.

$latex p(R|U_i, V, \Theta_U, \alpha) \\= \prod\limits_{j=1}^M [\mathcal{N}(R_{ij}|U_i^TV_j, \alpha^{-1}) ]^{I_{ij}} \\= \prod\limits_{j=1}^{M} exp[-\frac{1}{2}I_{ij}(\log 2\pi – \log \alpha + \alpha U_i^T V_j V_j^T U_i) + \alpha I_{ij} R_{ij}V_j^T U_i – \frac{1}{2} \alpha I_{ij} R_{ij}^2] \\=\prod\limits_{j=1}^M exp[-\frac{1}{2} \alpha I_{ij} U_i^T V_j V_j^T U_i +\alpha I_{ij} R_{ij}V_j^T U_i + C] \qquad\qquad (C \text{ is everything not relevant to } U_i) \\=exp[-\frac{1}{2} U_i^T (\alpha \sum\limits_{j=1}^M I_{ij} V_j V_j^T) U_i + \alpha\sum\limits_{j=1}^M I_{ij}R_{ij}V_j^T U_i + C]$

$latex p(U_i | \Theta_U, \alpha) \\=\mathcal{N}(U_i | \mu_U, \Lambda_U^{-1}) \\= exp[-\frac{1}{2} U_i^T \Lambda_U U_i + \Lambda_U \mu_U U_i + C]$

$latex p(U_i | R, V, \Theta_U, \alpha) \\ \sim p(R | U_i, V, \Theta_U, \alpha) \cdot p(U_i |\Theta_U, \alpha) \\= exp[-\frac{1}{2} U_i^T ( \Lambda_U + \alpha \sum\limits_{j=1}^M I_{ij} V_j V_j^T ) U_i + (\alpha\sum\limits_{j=1}^M I_{ij}R_{ij}V_j + \Lambda_U \mu_U) U_i + C]$

Compare the exp formula with the canonical form of a multivariate normal distribution you can get the mean and the variance. This is how formula (12) and (13) in the paper derive.

Sample $latex \mu_U$ and $latex \Lambda_U$

According to the Bayesian rule, $latex p(\Theta_U | U, \Theta_0) \cdot p(U | \Theta_0 ) = p(U|\Theta_U, \Theta_0) \cdot p(\Theta_U | \Theta_0)$.

Again, $latex p(U|\Theta_0)$ can be seen as a constant that can be ignored. Therefore,

$latex p(\Theta_U | U , \Theta_0) \\ \sim p(U|\Theta_U, \Theta_0) \cdot p(\Theta_U | \Theta_0) \\=p(U|\Theta_U, \Theta_0) \cdot p(\mu_U | \Lambda_U, \mu_0, \beta_0) \cdot p(\Lambda|W_0, v_0) \\=\prod\limits_{i=1}^N \mathcal{N}(U_i | \mu_U, \Lambda_U^{-1}) \cdot \mathcal{N}(\mu_U|\mu_0 , (\beta_0\Lambda_U)^{-1}) \mathcal{W}(\Lambda_U | W_0, v_0) \qquad\qquad \text{(Acrd. to Formula (5) and (7) in the paper)}$

Compare to formula 216~226 in Reference [5] and formula 1~3 in Reference [6] book page 178, you will get all derivations in formula (14) in the paper. However, it seems like the formulas for $latex S$ are different between the paper and the references. In the paper, $latex S=\frac{1}{N}\sum\limits_{i=1}^{N}U_i U_i^T$. In the reference, $latex S=\frac{1}{N}\sum\limits_{i=1}^{N}(U_i – N\bar{U}) (U_i – N\bar{U})^T$. This is the only place that confuses me and I have no answer for this before I go through all the proof in the reference.

Reference

[1] https://en.wikipedia.org/wiki/Multivariate_normal_distribution

[2] https://en.wikipedia.org/wiki/Normal_distribution

[3] http://www.tina-vision.net/docs/memos/2003-003.pdf

[4] http://blog.csdn.net/shenxiaolu1984/article/details/50405659

[5] https://www.cs.ubc.ca/~murphyk/Papers/bayesGauss.pdf

Estimate best K for K-Means in parallel

Gap statistic is often used to determine the best number of clusters. Please see a local version implementation for gap statistic here: https://github.com/echen/gap-statistic.

It is often desired to parallelize such tedious job to boost the speed. I implement a parallelized version basd on the source code:

library(plyr)

library(ggplot2)

# Calculate log(sum_i(within-cluster_i sum of squares around cluster_i mean)).

dispersion = function(data, num_clusters) {

# R's k-means algorithm doesn't work when there is only one cluster.

if (num_clusters == 1) {

cluster_mean = aaply(data, 2, mean)

distances_from_mean = aaply((data - cluster_mean)^2, 1, sum)

log(sum(distances_from_mean))

} else {

# Run the k-means algorithm `nstart` times. Each run uses at most `iter.max` iterations.

k = kmeans(data, centers = num_clusters, nstart = 5, iter.max = 50)

# Take the sum, over each cluster, of the within-cluster sum of squares around the cluster mean. Then take the log. This is `W_k` in TWH's notation.

log(sum(k$withinss))

}

}

# For an appropriate reference distribution (in this case, uniform points in the same range as `data`), simulate the mean and standard deviation of the dispersion.

reference_dispersion = function(data, num_clusters, num_reference_bootstraps) {

dispersions = maply(1:num_reference_bootstraps, function(i) dispersion(generate_uniform_points(data), num_clusters))

mean_dispersion = mean(dispersions)

stddev_dispersion = sd(dispersions) / sqrt(1 + 1 / num_reference_bootstraps) # the extra factor accounts for simulation error

c(mean_dispersion, stddev_dispersion)

}

# Given a matrix `data`, where rows are observations and columns are individual dimensions, compute and plot the gap statistic (according to a uniform reference distribution).

gap_statistic = function(data, min_num_clusters = 1, max_num_clusters = 10, num_reference_bootstraps = 10) {

# register parallelism backend

library(doMC)

registerDoMC(4)

num_clusters = min_num_clusters:max_num_clusters

actual_dispersions = maply(num_clusters, function(n) dispersion(data, n), .parallel = TRUE,

.paropts = list(.export=c("dispersion"),

.packages=c("plyr")))

ref_dispersions = maply(num_clusters, function(n) reference_dispersion(data, n, num_reference_bootstraps),

.parallel = TRUE,

.paropts = list(.export=c("dispersion", "reference_dispersion"),

.packages=c("plyr")))

mean_ref_dispersions = ref_dispersions[ , 1]

stddev_ref_dispersions = ref_dispersions[ , 2]

gaps = mean_ref_dispersions - actual_dispersions

print(plot_gap_statistic(gaps, stddev_ref_dispersions, num_clusters))

print(paste("The estimated number of clusters is ", num_clusters[which.max(gaps)], ".", sep = ""))

list(gaps = gaps, gap_stddevs = stddev_ref_dispersions)

}

# Plot the gaps along with error bars.

plot_gap_statistic = function(gaps, stddevs, num_clusters) {

qplot(num_clusters, gaps, xlab = "# clusters", ylab = "gap", geom = "line", main = "Estimating the number of clusters via the gap statistic") + geom_errorbar(aes(num_clusters, ymin = gaps - stddevs, ymax = gaps + stddevs), size = 0.3, width = 0.2, colour = "darkblue")

}

# Generate uniform points within the range of `data`.

generate_uniform_points = function(data) {

# Find the min/max values in each dimension, so that we can generate uniform numbers in these ranges.

mins = aaply(data, 2, min)

maxs = apply(data, 2, max)

num_datapoints = nrow(data)

# For each dimension, generate `num_datapoints` points uniformly in the min/max range.

uniform_pts = maply(1:length(mins), function(dim) runif(num_datapoints, min = mins[dim], max = maxs[dim]))

uniform_pts = t(uniform_pts)

}

It uses `doMC` as parallelism backend. You can change how many cores it uses in line 32.

How to make multi-lined cells in table in Latex?

Use package `makecell`.

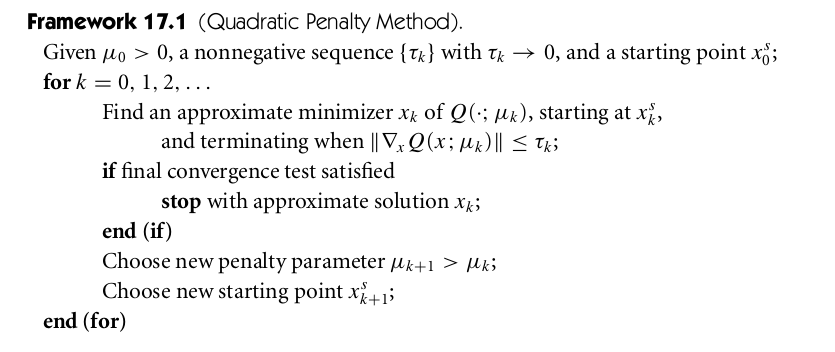

How to make match prediction using TrueSkill?

TrueSkill [1] is a widely accepted algorithm for matchmaking, in which player skills are initialized with a random variable following Gaussian distribution and then get updated through team-based match outcomes. Eventually, with sufficient amount of matches, player skill random variables will converge to some means with very small variance.

There are multiple open sourced libraries implementing the algorithm. For example, one in Python [2] and another in C# [3]. However in these open sourced libraries I don’t see how to predict new matches given learned player skill mean and variance.

Today I checked again the paper and other materials to assure that to predict a new match where draw is not allowed, whichever team has higher sum of means should be predicted to win. Why? Let’s use the example from the original TrueSkill paper.

In the factor graph above, we want to compare $latex t1$ vs $latex t2$ and $latex t2$ vs $latex t3$. Let’s take the comparison between t1 and t2 for example. We are essentially comparing $latex p1$ vs $latex p2+p3$. $latex s_1 \sim \mathcal{N}(\mu_1, \sigma_1^2)$ and $latex p_1 \sim \mathcal{N}(s_1, \beta^2)$. To get the distribution of $latex p_1$, we have:

$latex \int_{-\infty}^{\infty} \frac{1}{\sigma_1 \sqrt{2\pi}} e^{-\frac{(s_1 – \mu_1)}{2 \sigma_1^2}} \frac{1}{\beta \sqrt{2\pi}} e^{-\frac{(p_1 – s_1)}{2 \beta^2}} ds= \frac{1}{\sqrt{\sigma_1^2 + \beta^2} \sqrt{2\pi}} e^{- \frac{(p_1 – \mu_1)^2}{2(\sigma_1^2+\beta^2)}} &s=2$

Therefore $latex p_1$ is another random variable following normal distribution. $latex p_2$ and $latex p_3$ can be derived to be random variables in a similar fashion. Since we know that add/substract multiple normal random variables will result in a random variable with the mean equal to add/substract of the means of participating variables, we conclude that the predicted outcome between two teams relies on whether the sum of means of one team is greater than that of the other team. In our example, $latex d_1 \sim \mathcal{N}(\mu_1 – \mu_2 – \mu_3, \sigma’)$ for some $latex \sigma’$ not important to know for prediction.

if you want to know the winning probability of a player over another, checkout [5] and [6]

[1] http://research.microsoft.com/en-us/projects/trueskill/

[2] http://trueskill.org/

[3] http://www.moserware.com/2010/03/computing-your-skill.html

[4] http://www.tina-vision.net/docs/memos/2003-003.pdf

[5] http://stackoverflow.com/questions/28031698/with-the-trueskill-algorithm-given-two-players-ratings-how-can-i-calculate-th

[6] https://github.com/sublee/trueskill/issues/1#issuecomment-10491635

Optimization Overview

In this post, I am summarizing all I know for optimization. It is a hard, primary problem once you define your model with some kind of objective function integrating a bunch of parameters and you start to wonder how you could learn the values of those parameters to minimize (or maximize) the objective function.

Unconstrained Optimization

You wish to learn parameters to minimize the objective function and those parameters don’t have any constraints. If this is a convex problem, then there must be a global minimum and you can find it by finding all points whose first-order derivatives are zero. If the objective function is not convex, don’t panic. Gradient Descent (with lots of its variants such as momentum gradient descent, stochastic gradient descent, AdaGra) definitely help you to reach local minimum. People find that when the number of variables is large you will reach a not too bad local minimum. (missing reference here). Another family of approach is called quasi-Newton method which is my favorite. They have comparably fast speed as Newton Method does but its computation is way more economic. I implemented one unconstrained LBFGS algorithm in Python and some of its details are revealed here: https://czxttkl.com/?p=1257

Constrained Optimization

When it comes to constrained optimization, there exist tons of approaches which can be applied in different conditions. Here is a summary of techniques you can equip: (from https://ipvs.informatik.uni-stuttgart.de/mlr/marc/teaching/13-Optimization/03-constrainedOpt.pdf):

First of all, let’s get familiar with the foundations of constrained optimization.

Suppose we are faced a constrained optimization problem:

![]()

s.t. ![]() and

and ![]()

At a feasible point ![]() , the inequality constraint

, the inequality constraint ![]() is said to be active if

is said to be active if ![]() and inactive if the strict inequality

and inactive if the strict inequality ![]() is satisfied.

is satisfied.

The active set ![]() at any feasible

at any feasible ![]() consists of the equality constraint indices from

consists of the equality constraint indices from ![]() together with the indices of the inequality constraints

together with the indices of the inequality constraints ![]() for which

for which ![]() , i.e.,

, i.e., ![]()

LICQ: Given the point ![]() and the active set

and the active set ![]() , we say that the linear independence constraint qualification (LICQ) holds if the set of the active constraint gradient

, we say that the linear independence constraint qualification (LICQ) holds if the set of the active constraint gradient ![]() is linearly independent.

is linearly independent.

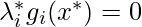

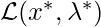

KTT (Karush-Kuhn-Tucker) conditions

KTT conditions are necessary conditions for a optimum solution in nonlinear programming. (Some of the constraints or the objective function is non-linear.) In other words, if a solution ![]() is a local minimum of a function

is a local minimum of a function ![]() which we aims to minimize under certain equality constraints

which we aims to minimize under certain equality constraints ![]() and inequality constraints

and inequality constraints ![]() , then

, then ![]() ,

, ![]() ,

, ![]() and

and ![]() must satisfy KTT conditions. The reverse, i.e., if a solution

must satisfy KTT conditions. The reverse, i.e., if a solution ![]() satisfies KTT conditions then it is a local minimum, is not always true.

satisfies KTT conditions then it is a local minimum, is not always true.

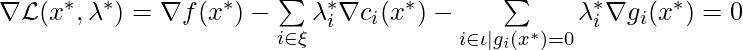

More formally to state KTT conditions:

Assume ![]() is a local solution which achieves local minimum of

is a local solution which achieves local minimum of ![]() . If

. If ![]() ,

, ![]() and

and ![]() are continuously differentiable, and LICQ holds at

are continuously differentiable, and LICQ holds at ![]() , then there is a Lagrange multiplier vector

, then there is a Lagrange multiplier vector ![]() , with components

, with components ![]() , such that following conditions are satisfied at

, such that following conditions are satisfied at ![]() :

:

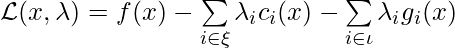

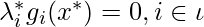

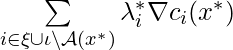

- Define:

In ![]() , we ignore to subtract

, we ignore to subtract  because KKT conditions indicate that

because KKT conditions indicate that![]() for

for ![]() , i.e., for those inactive inequality constraints

, i.e., for those inactive inequality constraints ![]() .

.

Note that, we require LICQ holds at ![]() so that

so that ![]() is unique. LICQ is called constraint qualification. There are other constraint qualifications that must hold to guarantee KKT conditions hold if

is unique. LICQ is called constraint qualification. There are other constraint qualifications that must hold to guarantee KKT conditions hold if ![]() is a local minimum. (See https://www.math.washington.edu/~burke/crs/516/notes/cq_lec.pdf) For example, if

is a local minimum. (See https://www.math.washington.edu/~burke/crs/516/notes/cq_lec.pdf) For example, if ![]() is a local minimum and Abadie constraint qualification (ACQ) holds at

is a local minimum and Abadie constraint qualification (ACQ) holds at ![]() , then KTT conditions still hold. Here ACQ is a weaker constraint qualification than LICQ because LICQ => ACQ.

, then KTT conditions still hold. Here ACQ is a weaker constraint qualification than LICQ because LICQ => ACQ.

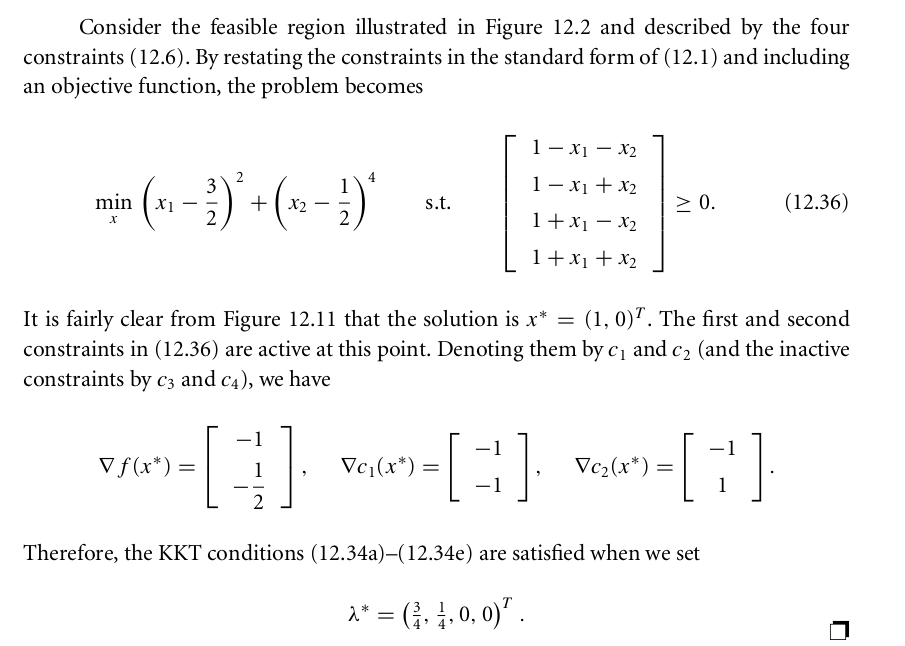

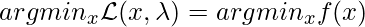

Here is my rough understanding of KKT conditions: We want to let ![]() subject to the same constraints.

subject to the same constraints. ![]() consists of

consists of ![]() and a bunch of

and a bunch of ![]() and

and ![]() . To make sure

. To make sure ![]() and

and ![]() have the same local solution

have the same local solution ![]() , you must do the following checks:

, you must do the following checks:

- For a local minimum

of

of  , if

, if  , then

, then  is not guaranteed unless

is not guaranteed unless  . Put it in other words, if you want

. Put it in other words, if you want  to have the same optimum as

to have the same optimum as  , then

, then  , the term added to

, the term added to besides

besides  , should not influence

, should not influence  and

and  to reach their local minimum at

to reach their local minimum at  at the same time.

at the same time. - For equality constraints and active inequality constraints,

and

and  are zeros which can be safely added to

are zeros which can be safely added to  without affecting

without affecting  being the local optimum for both

being the local optimum for both  and

and  .

.

Next, you should note that for the local minimum ![]() ,

, . This means, at

. This means, at ![]() , the force to minimize

, the force to minimize ![]() is pulled by the force to ensure

is pulled by the force to ensure ![]() to stay in feasible areas. This can be illustrated in the following example:

to stay in feasible areas. This can be illustrated in the following example:

As you can see, at ![]() , the force to minimize

, the force to minimize ![]() is the direction

is the direction ![]() (marked as purple arrow) . The force to pull

(marked as purple arrow) . The force to pull ![]() to stay the feasible region (the square in the middle) is

to stay the feasible region (the square in the middle) is ![]() and

and ![]() (marked as red arrows).

(marked as red arrows). ![]() makes sure

makes sure ![]() ,

, ![]() and

and ![]() are linearly dependent at this point so that

are linearly dependent at this point so that ![]() can’t be dragged any further by any force.

can’t be dragged any further by any force.

Although knowing a feasible point ![]() and a

and a ![]() satisfying KTT conditions is generally not sufficient to conclude

satisfying KTT conditions is generally not sufficient to conclude ![]() is the solution for the constrained optimization problem, under certain conditions

is the solution for the constrained optimization problem, under certain conditions ![]() indeed is the solution. For example, if you know additional information like Second Order Sufficient Conditions, then KTT conditions become sufficient to say

indeed is the solution. For example, if you know additional information like Second Order Sufficient Conditions, then KTT conditions become sufficient to say ![]() is a local solution. For another example, if Slater’s condition holds for the constrained problem, then

is a local solution. For another example, if Slater’s condition holds for the constrained problem, then ![]() . (Ref: http://math.stackexchange.com/questions/379543/kkt-and-slaters-condition, https://www.cs.cmu.edu/~ggordon/10725-F12/slides/16-kkt.pdf p11). In the famous SVM model, the objective function and its constraints also satisfies Slater’s condition such that KTT conditions are sufficient and necessary. See https://czxttkl.com/?p=3114 for how we do optimization for SVM using KTT.

. (Ref: http://math.stackexchange.com/questions/379543/kkt-and-slaters-condition, https://www.cs.cmu.edu/~ggordon/10725-F12/slides/16-kkt.pdf p11). In the famous SVM model, the objective function and its constraints also satisfies Slater’s condition such that KTT conditions are sufficient and necessary. See https://czxttkl.com/?p=3114 for how we do optimization for SVM using KTT.

Actually, Slater’s condition is originally used to prove that the strong duality holds for the constrained problem. Let’s illustrate it by introducing Duality Theory.

Duality Theory

An optimization problem can usually be constructed as a primal problem, for which there exists a corresponding dual problem. The dual problem sometimes has magics such that the computation of the dual problem is easier, or the solution of the dual problem can hint bounds of the primal problem.

Let’s say we face the same optimization problem as follows.

![]()

s.t. ![]() and

and ![]()

This problem can be converted into a primal problem incorporating all the constraints ![]() and

and ![]() :

:

![]() subject to

subject to ![]()

Why does the primal problem look like this? This is because the minimum of ![]() is the same as the minimum of the original constrained optimization problem. For those

is the same as the minimum of the original constrained optimization problem. For those ![]() s.t.

s.t. ![]() ,

, ![]() therefore any

therefore any ![]() violating the constraints is definitely not the solution to the primal problem. For those

violating the constraints is definitely not the solution to the primal problem. For those ![]() satisfying the constraints,

satisfying the constraints, ![]() . Therefore, it is equivalent to

. Therefore, it is equivalent to ![]() as to do the original constrained problem.

as to do the original constrained problem.

Now we construct the dual problem of the primal problem. The dual problem is:

![]() subject to

subject to ![]() . (Here

. (Here ![]() is infimum function denoting the lower bound of

is infimum function denoting the lower bound of ![]() . For example,

. For example, ![]() . We don’t use

. We don’t use ![]() to denote the lower bound of

to denote the lower bound of ![]() because

because ![]() can be infinitely close to but never reach the lower bound for any

can be infinitely close to but never reach the lower bound for any ![]() .)

.)

Now we have Weak Duality which goes like this:

![]() for any

for any ![]() and any feasible

and any feasible ![]()

This suggests that the optimum of the dual problem has some non-negative duality gap smaller than the optimum of the primal problem. If we can close the duality gap between the dual and the primal, we can solve the primal problem by solving the dual problem which sometimes is much easier. The duality gap is zero when Strong Duality holds for the optimization problem.

Ref: http://jackvalmadre.tumblr.com/post/15798388985

Here for the duality theory for linear programming specifically: https://www.math.washington.edu/~burke/crs/407/notes/section4.pdf

Lagrange Multiplier

Lagrange Multiplier is used for finding minimum/maximum of a function under equality constraints. Lagrange Multiplier utilizes an important fact that when a function achieves a minimum/maximum under certain equality constraints, the gradient of the function and the gradients of the constraints have the same direction. What Lagrange Multiplier essentially does is to add constraints, each multiplied by a Lagrange Multiplier, to the objective function to form an “auxiliary objective function”. By taking the derivative of the auxiliary objective function and set it to zero, we can find all stationary points of the auxiliary objective function. Among those stationary points, there must be at least one that achieves the minimum/maximum. A good tutorial of Lagrange Multiplier can be found here: http://www.slimy.com/~steuard/teaching/tutorials/Lagrange.html

Lagrange Multiplier method can be modified to solve constrained problems with inequality constraints. The multiplier and optimal solution can be found by using KKT conditions: http://www.csc.kth.se/utbildning/kth/kurser/DD3364/Lectures/KKT.pdf. One example of using Lagrange Multiplier to solve problems with inequality constraints can be found here: http://users.wpi.edu/~pwdavis/Courses/MA1024B10/1024_Lagrange_multipliers.pdf.

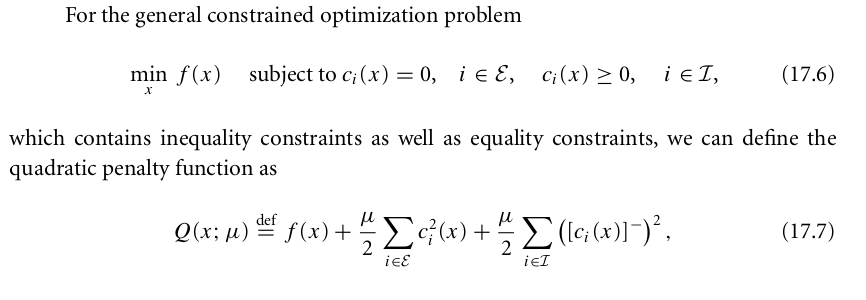

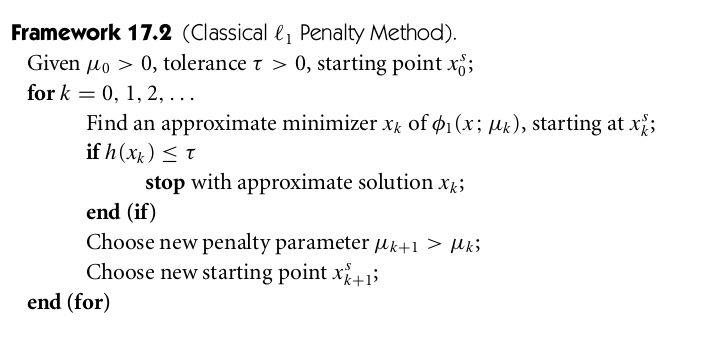

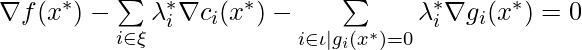

A family of constrained problem solvers converts constrained problems into unconstrained problems. In the family of such solvers, one kind of algorithms are called inexact method: the solution to the transformed unconstrained problem is close but not exactly the same to that to the original constrained problem. The other kind of algorithm is called exact method in which the solution does not change after the transformation. In the following introduced algorithms, Quadratic Penalty Method belongs to inexact method. ![]() Penalty Method and Augmented Lagrangian Method belong to exact method.

Penalty Method and Augmented Lagrangian Method belong to exact method.

Quadratic Penalty Method

![]() Penalty Method

Penalty Method

When only equality constraints are present, ![]() is smooth. But when inequality constraints are also present,

is smooth. But when inequality constraints are also present, ![]() is not smooth any more. Moreover,

is not smooth any more. Moreover, ![]() sometimes goes to be very large in iterations, likely to cause the Hessian matrix of

sometimes goes to be very large in iterations, likely to cause the Hessian matrix of ![]() ,

, ![]() , become ill-conditioned.

, become ill-conditioned.

![]() Penalty Method

Penalty Method

![]() Penalty Method is exact but the non-smoothness of

Penalty Method is exact but the non-smoothness of ![]() makes it difficult to find minimizer of it. Refer to the textbook to some hack ways to solve it.

makes it difficult to find minimizer of it. Refer to the textbook to some hack ways to solve it.

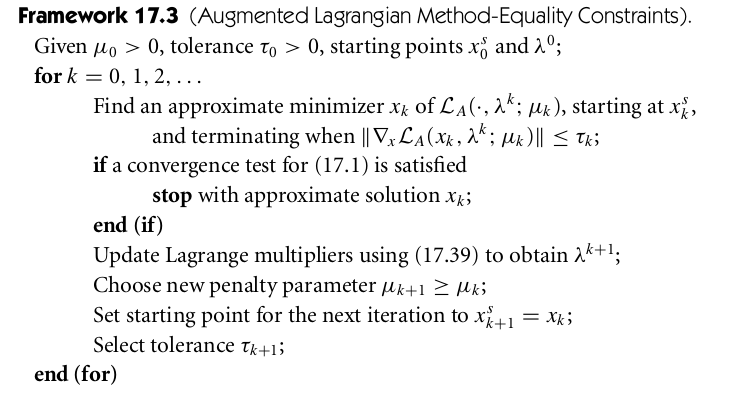

Augmented Lagrangian Method

It reduces the possibility of ill-conditioning of Quadratic Penalty Method and preserves the smoothness as opposed to ![]() Penalty Method.

Penalty Method.

https://en.wikipedia.org/wiki/Augmented_Lagrangian_method

Stochastic Gradient Descent

Stochastic Gradient Descent has been popular among large-scale machine learning. So far the most canonical text about stochastic gradient descent I’ve found is: Bottou, L. (2012). Stochastic gradient descent tricks. In Neural Networks: Tricks of the Trade (pp. 421-436). Springer Berlin Heidelberg.

The core idea is that “use stochastic gradient descent when training time is the bottleneck“. What does this mean? Roughly understanding, gradient descent should result in higher training accuracy than its stochastic counterpart because the former uses the full dataset to calculate a less noisy weight update. However in an iteration, gradient descent will take a long time to traverse all samples to calculate weight update. During that, the weight update of stochastic gradient descent has happened many times and the objective function it optimizes has been improved many times! Therefore, the paper points out that “SGD needs less time than the other algorithms to reach a predefined expected risk”. Please understand Table 2 in the paper carefully.

Projected Gradient Descent

This post highly summarizes the difference between ordinary gradient descent and projected gradient descent: http://math.stackexchange.com/questions/571068/what-is-the-difference-between-projected-gradient-descent-and-ordinary-gradient

This paper showcases how to use projected gradient descent for non-negative matrix factorization:

Lin, C. J. (2007). Projected gradient methods for nonnegative matrix factorization. Neural computation, 19(10), 2756-2779.

This paper also points out that if all the constraints are just non-negativity constraint, then we can set parameters ![]() , then we can just optimize the objective w.r.t

, then we can just optimize the objective w.r.t ![]() , where

, where ![]() .

.

However, I have not seen projected stochastic gradient descent been applied on any problem. I will keep searching why it is rarely used. My guess is that stochastic-based updates are already very noisy and unstable. The projection performed after each stochastic update will make parameter updates even more unstable such that the optimization cannot find the correct path towards the optimal parameterization.

Quadratic Programming

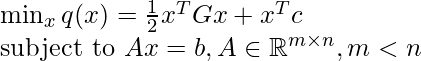

Quadratic Programming is an optimization problem with a quadratic objective function and linear constraints. If constraints only consist of equalities, it looks like the following:

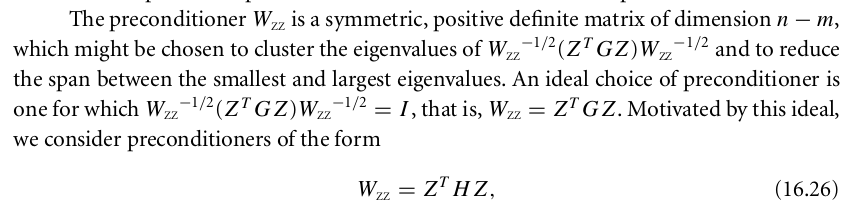

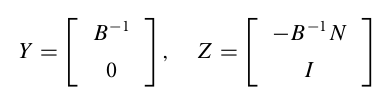

Now we introduce an iterative way to solve the quadratic programming with equality constraints, which is covered in Chapter 16.3 of Numerical Optimization by Nocedal & Wright.

First, the optimal solution ![]() can be represented as

can be represented as ![]() , where

, where ![]() ,

, ![]() ,

, ![]() ,

, ![]() and

and ![]() . Note that in

. Note that in ![]() ,

, ![]() ,

, ![]() and

and ![]() are some constants determined by elimination algorithms (introduced soon).

are some constants determined by elimination algorithms (introduced soon). ![]() is the free variables that

is the free variables that ![]() . Why can

. Why can ![]() be represented by

be represented by ![]() ? This is because

? This is because ![]() has rank

has rank ![]() (

(![]() ) therefore we can use

) therefore we can use ![]() variables in

variables in ![]() to represent

to represent ![]() variables in

variables in ![]() . Since

. Since ![]() ,

, ![]() indicates that

indicates that ![]() . Therefore,

. Therefore, ![]() is a solution satisfying

is a solution satisfying ![]() . Therefore,

. Therefore, ![]() can be seen as a sum of a particular solution to the constraint

can be seen as a sum of a particular solution to the constraint ![]() (the first term) plus a transformation

(the first term) plus a transformation ![]() applied on

applied on ![]() . Since

. Since ![]() holds, any choice of

holds, any choice of ![]() will still satisfy the constraint

will still satisfy the constraint ![]() . The only difference of choices of

. The only difference of choices of ![]() remains to be whether

remains to be whether ![]() minimizes the objective function.

minimizes the objective function.

To determine ![]() ,

, ![]() and

and ![]() , we use methods called simple elimination or general elimination:

, we use methods called simple elimination or general elimination:

1. simple elimination says that: find a permutation matrix ![]() such that

such that ![]() . Here the columns of

. Here the columns of ![]() are a subset of columns of

are a subset of columns of ![]() that are linearly independent.

that are linearly independent. ![]() . Now that if we set:

. Now that if we set:

, ![]() can be represented as

can be represented as ![]() .

.

2. general elimination uses QR-factorization on ![]() (

(![]() is a permutation matrix to obtain

is a permutation matrix to obtain ![]() ,

, ![]() and

and ![]() :

:

(I am unclear of when to use simple elimination or general elimination as the book compares the two algorithms vaguely to me. )

Now let’s go back solving the quadratic programming problem once obtaining ![]() . Plugging it to

. Plugging it to ![]() with all constants removed, we obtain

with all constants removed, we obtain ![]() . The textbook gives an iterative method to find desired

. The textbook gives an iterative method to find desired ![]() using Conjugate Gradient method, with the assumption that

using Conjugate Gradient method, with the assumption that ![]() is positive definite:

is positive definite:

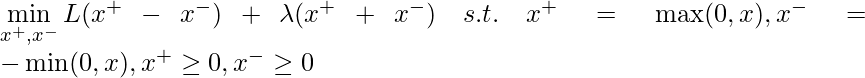

![]() regularization

regularization

Please note that ![]() regularization refers to the problem of the form:

regularization refers to the problem of the form: ![]() . Such forms of problems often have solutions

. Such forms of problems often have solutions ![]() with great sparsity. That is why

with great sparsity. That is why ![]() regularization is often used to achieve feature selection. This kind of problem is different to another kind of problem (also introduced in this post): use

regularization is often used to achieve feature selection. This kind of problem is different to another kind of problem (also introduced in this post): use ![]() as nonsmooth exact penalty function to solve constrained problems.

as nonsmooth exact penalty function to solve constrained problems.

This paper gives a full overview of methods to solve ![]() regularization problems. I am here giving a simple approach:

regularization problems. I am here giving a simple approach:

When given a ![]() regularization problem

regularization problem ![]() , we can write an equivalent constrained optimization problem:

, we can write an equivalent constrained optimization problem:

To explain why we can write such equivalent constrained optimization problem, let’s first check that ![]() and

and ![]() . Therefore

. Therefore ![]() and

and ![]() . Depending on the strength of

. Depending on the strength of ![]() ,

, ![]() and

and ![]() will be constrained to not fluctuate too radically, resulting the constrained

will be constrained to not fluctuate too radically, resulting the constrained ![]() norm of original

norm of original ![]() . Eventually, the

. Eventually, the ![]() regularization problem becomes a box constraint optimization problem (albeit the box is single side bounded, because you only require

regularization problem becomes a box constraint optimization problem (albeit the box is single side bounded, because you only require ![]() and

and ![]() to be non-negative.)

to be non-negative.)

There is an online implementation of such method using L-BFGS-B (box constraint of L-BFGS): https://gist.github.com/vene/fab06038a00309569cdd

Coordinate Descent

https://www.cs.cmu.edu/~ggordon/10725-F12/slides/25-coord-desc.pdf

Some Topics to Explore

Non convex global optimization: http://mathoverflow.net/questions/32533/is-all-non-convex-optimization-heuristic

Leetcode 202: Happy Number

https://leetcode.com/problems/happy-number/

Write an algorithm to determine if a number is “happy”.

A happy number is a number defined by the following process: Starting with any positive integer, replace the number by the sum of the squares of its digits, and repeat the process until the number equals 1 (where it will stay), or it loops endlessly in a cycle which does not include 1. Those numbers for which this process ends in 1 are happy numbers.

Example: 19 is a happy number

- 12 + 92 = 82

- 82 + 22 = 68

- 62 + 82 = 100

- 12 + 02 + 02 = 1

Code

class Solution(object):

def isHappy(self, n):

"""

:type n: int

:rtype: bool

"""

if n <= 0:

return False

nums = set()

while True:

n = sum([int(d)**2 for d in str(n)])

if n == 1:

return True

if n in nums:

return False

nums.add(n)

Idea

A number if happy if it has 1 in its happy loop. If a number is unhappy, it will endless loop, meaning at some point the square sum of digits will have repeated values. In my code, I am using a set to record the square sum in the loop. Whenever we see repeated square sum, we return unhappy. Of course, this method requires O(N) space, where N is the size of digits of the given number.

Another method is to use the idea of Floyd Cycle Detection algorithm: create a fast and a slow pointer. If fast pointer has the same value as the slow pointer, there must be a cycle. If the point they meet has the value, the number is happy.

int digitSquareSum(int n) {

int sum = 0, tmp;

while (n) {

tmp = n % 10;

sum += tmp * tmp;

n /= 10;

}

return sum;

}

bool isHappy(int n) {

int slow, fast;

slow = fast = n;

do {

slow = digitSquareSum(slow);

fast = digitSquareSum(fast);

fast = digitSquareSum(fast);

} while(slow != fast);

if (slow == 1) return 1;

else return 0;

}

Refs: https://leetcode.com/discuss/33055/my-solution-in-c-o-1-space-and-no-magic-math-property-involved

Leetcode 143: Reorder List

https://leetcode.com/problems/reorder-list/

Given a singly linked list L: L0→L1→…→Ln-1→Ln,

reorder it to: L0→Ln→L1→Ln-1→L2→Ln-2→…

You must do this in-place without altering the nodes’ values.

For example,

Given {1,2,3,4}, reorder it to {1,4,2,3}.

Code

# Definition for singly-linked list.

# class ListNode(object):

# def __init__(self, x):

# self.val = x

# self.next = None

class Solution(object):

def reorderList(self, head):

"""

:type head: ListNode

:rtype: void Do not return anything, modify head in-place instead.

"""

if not head or not head.next:

return

# find the middle point, where p1 will finally land.

p1 = p2 = head # p1: fast pointer, p2: slow pointer

while p2.next and p2.next.next:

p2 = p2.next.next

p1 = p1.next

p3 = p1.next

head2 = None

p1.next = None

# reverse the latter part

while p3:

next = p3.next

p3.next = head2

head2 = p3

p3 = next

# the first part has at least one more element than the second part

while head:

next1 = head.next

head.next = head2

if head2:

next2 = head2.next

head2.next = next1

head = next1

head2 = next2

Idea

There are three steps:

- use a slow pointer and a fast pointer to find the middle point of the linked list. The middle point will split the linked list into two parts.

- Reverse the second part of the linked list.

- Merge the two parts.