Recently, an exploding reproducibility research paper [1] shows that more than half of past published papers in the main psychological journals can’t be replicated to generate significance findings.

This makes me dig a little further on how to conduct statistical tests. Let me show you my little experiments in `R` first.

# remove(list=ls()) set.seed(19910715) n1 = 1000000 n2 = 1000000 mean1 = 0.01 mean2 = 0 grp1 <- rnorm(n1, mean=mean1, sd=1) grp2 <- rnorm(n2, mean=mean2, sd=1) t_test <- t.test(grp1, grp2) > t_test Welch Two Sample t-test data: grp1 and grp2 t = 5.8024, df = 2e+06, p-value = 6.538e-09 alternative hypothesis: true difference in means is not equal to 0 95 percent confidence interval: 0.005435609 0.010980841 sample estimates: mean of x mean of y 0.009316992 0.001108767

What I did above is to generate two groups of samples following normal distribution. They are supposed to be only 0.01 different in regard of mean. Then I did a two sides t-test to test whether the means of the two samples are different. The p-value, 6.54e-09, shows drastic significance.

True, they are indeed different since they come from two normal distributions with two close but different means. However, in the imperfect real world, almost any pair of groups of samples will have at least tiny difference in their means. Hence, you are very easy to get a significance result. That is why reporting p-value is not enough by itself.

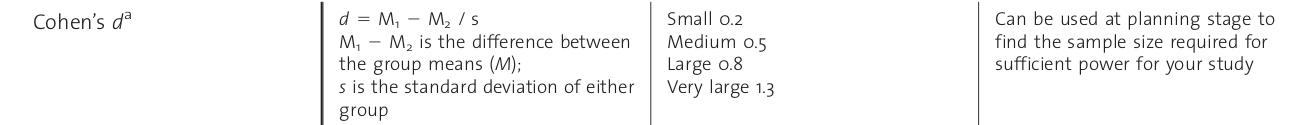

According to the paper [2], you should always report effect size together with p-value. You can also use `pes` in `compute.es` package to calculate Cohen’s d, one type of effect size (or called standardized mean difference):

library(compute.es) pes_ <- pes(p=t_test$p.value, n.1=n1, n.2=n2, dig = 10, tail="two") > pes_ Mean Differences ES: d [ 95 %CI] = 0.008205837 [ 0.005434016 , 0.01097766 ] # This is Cohen's d var(d) = 2e-06 p-value(d) = 6.5e-09 U3(d) = 50.32736 % CLES(d) = 50.23148 % Cliff's Delta = 0.004629622 g [ 95 %CI] = 0.008205834 [ 0.005434014 , 0.01097765 ] var(g) = 2e-06 p-value(g) = 6.5e-09 U3(g) = 50.32736 % CLES(g) = 50.23148 % Correlation ES: r [ 95 %CI] = 0.004102884 [ 0.002716995 , 0.005488758 ] var(r) = 5e-07 p-value(r) = 6.5e-09 z [ 95 %CI] = 0.004102907 [ 0.002717001 , 0.005488813 ] var(z) = 5e-07 p-value(z) = 6.5e-09 Odds Ratio ES: OR [ 95 %CI] = 1.014995 [ 1.009905 , 1.020111 ] p-value(OR) = 6.5e-09 Log OR [ 95 %CI] = 0.01488374 [ 0.009856215 , 0.01991127 ] var(lOR) = 6.5798e-06 p-value(Log OR) = 6.5e-09 Other: NNT = 433.793 Total N = 2e+06

The result turns out to be a very poor effect size (only 0.0082), as you can compare with the table of Cohen’s d effect size:

The power of the test will be determined once you specify sample size, significance level and effect size ([3], [5]). So I use an R package `pwr` to calculate the power. I use conventional 0.05 significance level.

# library(pwr) pwr.t.test(n=n1, d=pes_$d, sig.level = 0.05, type="two.sample", alternative="two.sided") Two-sample t test power calculation n = 1e+06 d = 0.008205837 sig.level = 0.05 power = 0.9999391 # What it returns alternative = two.sided NOTE: n is number in *each* group

The power is 0.9999391, which indicates that you are not gonna to miss any true difference almost for sure. Although our effect size is poor, we have large `n` (sample size). That is why we have such high power.

Some observations can be found:

1. Keeping other parameters the same but decreasing `sig.level` will cause the power to decrease. Intuitively, if you want to pay a high price to avoid Type I Error, you inevitably introduce some additional probability of neglecting true facts.

2. Keeping other parameters the same but decreasing `n` will cause the power to decrease. Intuitively, this says that if you are given infinite data, you will definitely find any true facts.

3. Keeping other parameters the same but decreasing `d` will cause the power to decrease. Intuitively, a very large effect size will definitely help you identify true facts.

The `pwr` package can also help you determine the effect size you want, given `n`, `sig.level` and `power`:

pwr.t.test(n=n1, sig.level=0.05, power=0.9999391, type="two.sample", alternative="two.sided") Two-sample t test power calculation n = 1e+06 d = 0.008220784 # What it returns sig.level = 0.05 power = 0.9999391 alternative = two.sided NOTE: n is number in *each* group

You can also use pwr.t.test to determine sample sizes. For example, you want significance level to be 0.05, power to be 0.8 and small effect size (0.2), then you will need 393 samples in each group at least.

> pwr.t.test(d=0.2, sig.level=0.05, power=0.8, type="two.sample", alternative="two.sided") Two-sample t test power calculation n = 393.4057 d = 0.2 sig.level = 0.05 power = 0.8 alternative = two.sided NOTE: n is number in *each* group

The `pwr` package has a function to give you what values different scales of effect size should be:

> cohen.ES(test="t", size=c("small", "medium", "large"))

Conventional effect size from Cohen (1982)

test = t

size = small

effect.size = 0.2

References

[1] Open Science Collaboration. (2015). Estimating the reproducibility of psychological science. Science, 349(6251), aac4716.

[2] Sullivan, G. M., & Feinn, R. (2012). Using effect size-or why the P value is not enough. Journal of graduate medical education, 4(3), 279-282.

[3] http://www.statmethods.net/stats/power.html

[4] http://www.ats.ucla.edu/stat/r/dae/t_test_power3.htm

[5] http://www.quora.com/Why-dont-people-care-much-about-power-1-Type-II-error-of-a-hypothesis-test